Gilad Golani

Explore Gilad’s insights, drawn from his extensive experience in R&D, software engineering, and product management. With a strategic mindset and hands-on expertise, he shares valuable perspectives on bridging development and product management to deliver quality-driven solutions.

Name's Data Security Posts

.webp)

False Positives Are Killing Your DSPM Program: How to Measure Classification Accuracy

False Positives Are Killing Your DSPM Program: How to Measure Classification Accuracy

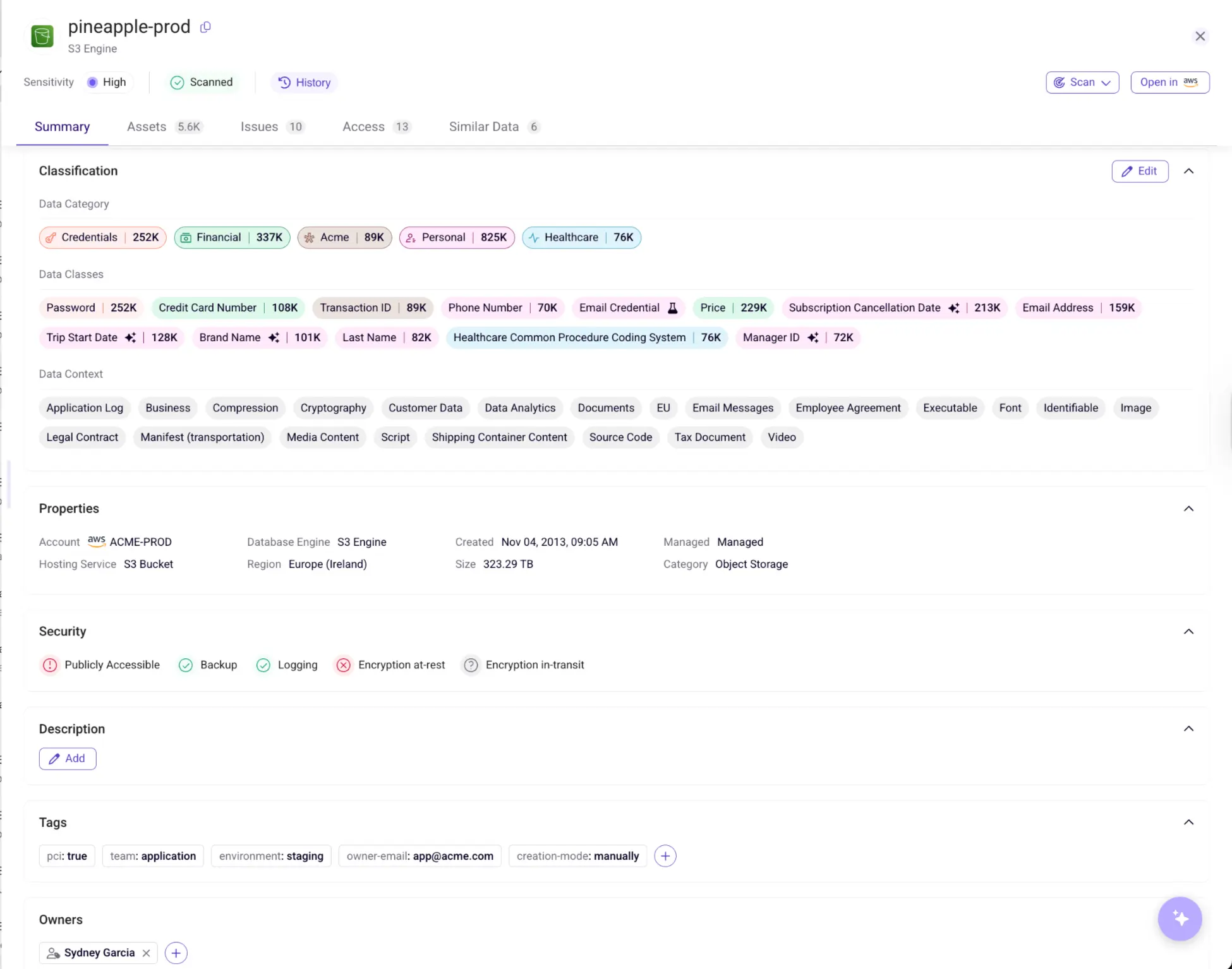

As more organizations move sensitive data to the cloud, Data Security Posture Management (DSPM) has become a critical security investment. But as DSPM adoption grows, a big problem is emerging: security teams are overwhelmed by false positives that create too much noise and not enough useful insight. If your security program is flooded with unnecessary alerts, you end up with more risk, not less.

Most enterprises say their existing data discovery and classification solutions fall short, primarily because they misclassify data. False positives waste valuable analyst time and deteriorate trust in your security operation. Security leaders need to understand what high-quality data classification accuracy really is, why relying only on regex fails, and how to use objective metrics like precision and recall to assess potential tools. Here’s a look at what matters most for accuracy in DSPM.

What Does Good Data Classification Accuracy Look Like?

To make real progress with data classification accuracy, you first need to know how to measure it. Two key metrics - precision and recall - are at the core of reliable classification. Precision tells you the share of correct positive results among everything identified as positive, while recall shows the percentage of actual sensitive items that get caught. You want both metrics to be high. Your DSPM solution should identify sensitive data, such as PII or PCI, without generating excessive false or misclassified results.

The F1-score adds another perspective, blending precision and recall for a single number that reflects both discovery and accuracy. On the ground, these metrics mean fewer false alerts, quicker responses, and teams that spend their time fixing problems rather than chasing noise. "Good" data classification produces consistent, actionable results, even as your cloud data grows and changes.

The Hidden Cost of Regex-Only Data Discovery

A lot of older DSPM tools still depend on regular expressions (regex) to classify data in both structured and unstructured systems. Regex works for certain fixed patterns, but it struggles with the diverse, changing data types common in today’s cloud and SaaS environments. Regex can't always recognize if a string that “looks” like a personal identifier is actually just a random bit of data. This results in security teams buried by alerts they don’t need, leading to alert fatigue.

Far from helping, regex-heavy approaches waste resources and make it easier for serious risks to slip through. As privacy regulations become more demanding and the average breach hit $4.4 million according to the annual "Cost of a Data Breach Report" by IBM and the Ponemon Institute, ignoring precision and recall is becoming increasingly costly.

How to Objectively Test DSPM Accuracy in Your POC

If your current DSPM produces more noise than value, a better method starts with clear testing. A meaningful proof-of-value (POV) process uses labeled data and a confusion matrix to calculate true positives, false positives, and false negatives. Don’t rely on vendor promises. Always test their claims with data from your real environment. Ask hard questions: How does the platform classify unstructured data? How much alert noise can you expect? Can it keep accuracy high even when scanning huge volumes across SaaS, multi-cloud, and on-prem systems? The best DSPM tool cuts through the clutter, surfacing only what matters.

Sentra Delivers Highest Accuracy with Small Language Models and Context

Sentra’s DSPM platform raises the bar by going beyond regex, using purpose-built small language models (SLMs) and advanced natural language processing (NLP) for context-driven data classification at scale. Customers and analysts consistently report that Sentra achieves over the highest classification accuracy for PII and PCI, with very few false positives.

How does Sentra get these results without data ever leaving your environment? The platform combines multi-cloud discovery, agentless install, and deep contextual awareness - scanning extensive environments and accurately discerning real risks from background noise. Whether working with unstructured cloud data, ever-changing SaaS content, or traditional databases, Sentra keeps analysts focused on real issues and helps you stay compliant. Instead of fighting unnecessary alerts, your team sees clear results and can move faster with confidence.

Want to see Sentra DSPM in action? Schedule a Demo.

Reducing False Positives Produces Real Outcomes

Classification accuracy has a direct impact on whether your security is efficient or overwhelmed. With compliance rules tightening and threats growing, security teams cannot afford DSPM solutions that bury them in false positives. Regex-only tools no longer cut it - precision, recall, and truly reliable results should be standard.

Sentra’s SLM-powered, context-aware classification delivers the trustworthy performance businesses need, changing DSPM from just another alert engine to a real tool for reducing risk. Want to see the difference yourself? Put Sentra’s accuracy to the test in your own environment and finally move past false positive fatigue.

<blogcta-big>

.webp)

Zero Data Movement: The New Data Security Standard that Eliminates Egress Risk

Zero Data Movement: The New Data Security Standard that Eliminates Egress Risk

Cloud adoption and the explosion of data have boosted business agility, but they’ve also created new headaches for security teams. As companies move sensitive information into multi-cloud and hybrid environments, old security models start to break down. Shuffling data for scanning and classification adds risk, piles on regulatory complexity, and drives up operational costs.

Zero Data Movement (ZDM) offers a new architectural approach, reshaping how advanced Data Security Posture Management (DSPM) platforms provide visibility, protection, and compliance. This post breaks down what makes ZDM unique, why it matters for security-focused enterprises, and how Sentra provides an innovative agentless and scalable design that is genuinely a zero data movement DSPM .

Defining Zero Data Movement Architecture

Zero Data Movement (ZDM) sets a new standard in data security. The premise is straightforward: sensitive data should stay in its original environment for security analysis, monitoring, and enforcement. Older models require copying, exporting, or centralizing data to scan it, while ZDM ensures that all security actions happen directly where data resides.

ZDM removes egress risk -shrinking the attack surface and reducing regulatory issues. For organizations juggling large cloud deployments and tight data residency rules, ZDM isn’t just an improvement - it's essential. Groups like the Cloud Security Alliance and new privacy regulations are moving the industry toward designs that build in privacy and non-stop protection.

Risks of Data Movement: Compliance, Cost, and Egress Exposure

Every time data is copied, exported, or streamed out of its native environment, new risks arise. Data movement creates challenges such as:

- Egress risk: Data at rest or in transit outside its original environment increases risk of breach, especially as those environments may be less secure.

- Compliance and regulatory exposure: Moving data across borders or different clouds can break geo-fencing and privacy controls, leading to potential violations and steep fines.

- Loss of context and control: Scattered data makes it harder to monitor everything, leaving gaps in visibility.

- Rising total cost of ownership (TCO): Scanning and classification can incur heavy cloud compute costs - so efficiency matters. Exporting or storing data, especially shadow data, drives up storage, egress, and compliance costs as well.

As more businesses rely on data, moving it unnecessarily only increases the risk - especially with fast-changing cloud regulations.

Legacy and Competitor Gaps: Why Data Movement Still Happens

Not every security vendor practices true zero data movement, and the differences are notable. Products from Cyera, Securiti, or older platforms still require temporary data exporting or duplication for analysis. This might offer a quick setup, but it exposes users to egress risks, insider threats, and compliance gaps - problems that are worse in regulated fields.

Competitors like Cyera often rely on shortcuts that fall short of ZDM’s requirements. Securiti and similar providers depend on connectors, API snapshots, or central data lakes, each adding potential risks and spreading data further than necessary. With ZDM, security operations like monitoring and classification happen entirely locally, removing the need to trust external storage or aggregation. For more detail on how data movement drives up risk.

The Business Value of Zero Data Movement DSPM

Zero data movement DSPM changes the equation for businesses:

- Designed for compliance: Data remains within controlled environments, shrinking audit requirements and reducing breach likelihood.

- Lower TCO and better efficiency: Eliminates hidden expenses from extra storage, duplicate assets, and exporting to external platforms.

- Regulatory clarity and privacy: Supports data sovereignty, cross-border rules, and new zero trust frameworks with an egress-free approach.

Sentra’s agentless, cloud-native DSPM provides these benefits by ensuring sensitive data is never moved or copied. And Sentra delivers these benefits at scale - across multi-petabyte enterprise environments - without the performance and cost tradeoffs others suffer from. Real scenarios show the results: financial firms keep audit trails without data ever leaving allowed regions. Healthcare providers safeguard PHI at its source. Global SaaS companies secure customer data at scale, cost-effectively while meeting regional rules.

Future-Proofing Data Security: ZDM as the New Standard

With data volumes expected to hit 181 zettabytes in 2025, older protection methods that rely on moving data can’t keep up. Zero data movement architecture meets today's security demands and supports zero trust, metadata-driven access, and privacy-first strategies for the future.

Companies wanting to avoid dead ends should pick solutions that offer unified discovery, classification and policy enforcement without egress risk. Sentra’s ZDM architecture makes this possible, allowing organizations to analyze and protect information where it lives, at cloud speed and scale.

Conclusion

Zero Data Movement is more than a technical detail - it's a new architectural standard for any organization serious about risk control, compliance, and efficiency. As data grows and regulations become stricter, the old habits of moving, copying, or centralizing sensitive data will no longer suffice.

Sentra stands out by delivering a zero data movement DSPMplatform that's agentless, real-time, and truly multicloud. For security leaders determined to cut egress risk, lower compliance spending, and get ahead in privacy, ZDM is the clear path forward.

<blogcta-big>

.webp)

Unstructured Data Is 80% of Your Risk: Why DSPM 1.0 Vendors, Like Varonis and Cyera, Fail to Protect It at Petabyte Scale

Unstructured Data Is 80% of Your Risk: Why DSPM 1.0 Vendors, Like Varonis and Cyera, Fail to Protect It at Petabyte Scale

Unstructured data is the fastest-growing, least-governed, and most dangerous class of enterprise data. Emails, Slack messages, PDFs, screenshots, presentations, code repositories, logs, and the endless stream of GenAI-generated content — this is where the real risk lives.

The Unstructured data dilemma is this: 80% of your organization’s data is essentially invisible to your current security tools, and the volume is climbing by up to 65% each year. This isn’t just a hypothetical - it’s the reality for enterprises as unstructured data spreads across cloud and SaaS platforms. Yet, most Data Security Posture Management (DSPM) solutions - often called DSPM 1.0 - were never built to handle this explosion at petabyte scale. Especially legacy vendors and first-generation players like Cyera — were never designed to handle unstructured data at scale. Their architectures, classification engines, and scanning models break under real enterprise load.

Looking ahead to 2026, unstructured data security risk stands out as the single largest blind spot in enterprise security. If overlooked, it won’t just cause compliance headaches and soaring breach costs - it could put your organization in the headlines for all the wrong reasons.

The 80% Problem: Unstructured Data Dominates Your Risk

The Scale You Can’t Ignore - Over 80% of enterprise data is unstructured

- Unstructured data is growing 55-65% per year; by 2025, the world will store more than 180 zettabytes of it.

- 95% of organizations say unstructured data management is a critical challenge but less than 40% of data security budgets address this high-risk area. Unstructured data is everywhere: cloud object stores, SaaS apps, collaboration tools, and legacy file shares. Unlike structured data in databases, it often lacks consistent metadata, access controls, or even basic visibility. This “dark data” is behind countless breaches, from accidental file exposures and overshared documents to sensitive AI training datasets left unmonitored.

The Business Impact - The average breach now costs $4-4.9M, with unstructured data often at the center.

- Poor data quality, mostly from unstructured sources, costs the U.S. economy $3.1 trillion each year.

- More than half of organizations report at least one non-compliance incident annually, with average costs topping $1M. The takeaway: Unstructured data isn’t just a storage problem.

Why DSPM 1.0 Fails: The Blind Spots of Legacy Approaches

Traditional Tools Fall Short in Cloud-First, Petabyte-Scale Environments

Legacy DSPM and DCAP solutions, such as Varonis or Netwrix - were built for an era when data lived on-premises, followed predictable structures, and grew at a manageable pace.

In today’s cloud-first reality, their limitations have become impossible to ignore:

- Discovery Gaps: Agent-based scanning can’t keep up with sprawling, constantly changing cloud and SaaS environments. Shadow and dark data across platforms like Google Drive, Dropbox, Slack, and AWS S3 often go unseen.

- Performance Limits: Once environments exceed 100 TB, and especially as they reach petabyte scale—these tools slow dramatically or miss data entirely.

- Manual Classification: Most legacy tools rely on static pattern matching and keyword rules, causing them to miss sensitive information hidden in natural language, code, images, or unconventional file formats.

- Limited Automation: They generate alerts but offer little or no automated remediation, leaving security teams overwhelmed and forcing manual cleanup.

- Siloed Coverage: Solutions designed for on-premises or single-cloud deployments create dangerous blind spots as organizations shift to multi-cloud and hybrid architectures.

Example: Collaboration App Exposure

A global enterprise recently discovered thousands of highly sensitive files—contracts, intellectual property, and PII—were unintentionally shared with “anyone with the link” inside a cloud collaboration platform. Their legacy DSPM tool failed to identify the exposure because it couldn’t scan within the app or detect real-time sharing changes.

Further, even Emerging DSPM tools often rely on pattern matching or LLM-based scanning. These approaches also fail for three reasons:

- Inaccuracy at scale: LLMs hallucinate, mislabel, and require enormous compute.

- Cost blow-ups: Vendors pass massive cloud bills back to customers or incur inordinate compute cost.

- Architectural limitations: Without clustering and elastic scaling, large datasets overwhelm the system.

This is exactly where Cyera and legacy tools struggle - and where Sentra’s SLM-powered classifier thrives with >99% accuracy at a fraction of the cost.

The New Mandate: Securing Unstructured Data in 2026 and Beyond

GenAI, and stricter privacy laws (GDPR, CCPA, HIPAA) have raised the stakes for unstructured data security. Gartner now recommends Data Access Governance (DAG) and AI-driven classification to reduce oversharing and prepare for AI-centric workloads.

What Modern Security Leaders Need - Agentless, Real-Time Discovery: No deployment hassles, continuous visibility, and coverage for unstructured data stores no matter where they live.

- Petabyte-Scale Performance: Scan, classify, and risk-score all data, everywhere it lives.

- AI-Driven Deep Classification: Use of natural language processing (NLP), Domain-specific Small Language Models (SLMs), and context analysis for every unstructured format.

- Automated Remediation: Playbooks that fix exposures, govern permissions, and ensure compliance without manual work.

- Multi-Cloud & SaaS Coverage: Security that follows your data, wherever it goes.

Sentra: Turning the 80% Blind Spot into a Competitive Advantage

Sentra was built specifically to address the risks of unstructured data in 2026 and beyond. There are nuances involved in solving this. Selecting an appropriate solution is key to a sustainable approach. Here’s what sets Sentra apart:

- Agentless Discovery Across All Environments:Instantly scans and classifies unstructured data across AWS, Azure, Google, M365, Dropbox, legacy file shares, and more - no agents required, no blind spots left behind.

- Petabyte-Tested Performance:Designed for Fortune 500 scale, Sentra keeps speed and accuracy high across petabytes, not just terabytes.

- AI-Powered Deep Classification:Our platform uses advanced NLP, SLMs, and context-aware algorithms to classify, label, and risk-score every file - including code, images, and AI training data, not just structured fields.

- Continuous, Context-Rich Visibility:Real-time risk scoring, identity and access mapping, and automated data lineage show not just where data lives, but who can access it and how it’s used.

- Automated Remediation and Orchestration: Sentra goes beyond alerts. Built-in playbooks fix permissions, restrict sharing, and enforce policies within seconds.

- Compliance-First, Audit-Ready: Quickly spot compliance gaps, generate audit trails, and reduce regulatory risk and reporting costs.

During a recent deployment with a global financial services company, Sentra uncovered 40% more exposed sensitive files than their previous DSPM tool. Automated remediation covered over 10 million documents across three clouds, cutting manual investigation time by 80%.

Actionable Takeaways for Security Leaders

1. Put Unstructured Data at the Center of Your 2026 Security Plan: Make sure your DSPM strategy covers all data, especially “dark” and shadow data in SaaS, object stores, and collaboration platforms.

2. Choose Agentless, AI-Driven Discovery: Legacy, agent-based tools can’t keep up. And underperforming emerging tools may not adequately scale. Look for continuous, automated scanning and classification that scales with your data.

3. Automate Remediation Workflows: Visibility is just the start; your platform should fix exposures and enforce policies in real time.

4. Adopt Multi-Cloud, SaaS-Agnostic Solutions: Your data is everywhere, and your security should be too. Ensure your solution supports all of your unstructured data repositories.

5. Make Compliance Proactive: Use real-time risk scoring and automated reporting to stay ahead of auditors and regulators.

Conclusion: Ready for the 80% Challenge?

With petabyte-scale, cloud-first data, ignoring unstructured data risk is no longer an option. Traditional DSPM tools can’t keep up, leaving most of your data - and your business - vulnerable. Sentra’s agentless, AI-powered platform closes this gap, delivering the discovery, classification, and automated response you need to turn your biggest blind spot into your strongest defense. See how Sentra uncovers your hidden risk - book an instant demo today.

Don’t let unstructured data be your organization’s Achilles’ heel. With Sentra, enterprises finally have a way to secure the data that matters most.

<blogcta-big>

.webp)

How SLMs (Small Language Models) Make Sentra’s AI Faster and More Accurate

How SLMs (Small Language Models) Make Sentra’s AI Faster and More Accurate

The LLM Hype, and What’s Missing

Over the past few years, large language models (LLMs) have dominated the AI conversation. From writing essays to generating code, LLMs like GPT-4 and Claude have proven that massive models can produce human-like language and reasoning at scale.

But here's the catch: not every task needs a 70-billion-parameter model. Parameters are computationally expensive - they require both memory and processing time.

At Sentra, we discovered early on that the work our customers rely on for accurate, scalable classification of massive data flows - isn’t about writing essays or generating text. It’s about making decisions fast, reliably, and cost-effectively across dynamic, real-world data environments. While large language models (LLMs) are excellent at solving general problems, it creates a lot of unnecessary computational overhead.

That’s why we’ve shifted our focus toward Small Language Models (SLMs) - compact, specialized models purpose-built for a single task - understanding and classifying data efficiently. By running hundreds of SLMs in parallel on regular CPUs, Sentra can deliver faster insights, stronger data privacy, and a dramatically lower total cost of AI-based classification that scales with their business, not their cloud bill.

What Is an SLM?

An SLM is a smaller, domain-specific version of a language model. Instead of trying to understand and generate any kind of text, an SLM is trained to excel at a particular task, such as identifying the topic of a document (what the document is about or what type of document it is), or detecting sensitive entities within documents, such as passwords, social security numbers, or other forms of PII.

In other words: If an LLM is a generalist, an SLM is a specialist. At Sentra, we use SLMs that are tuned and optimized for security data classification, allowing them to process high volumes of content with remarkable speed, consistency, and precision. These SLMs are based on standard open source models, but trained with data that was curated by Sentra, to achieve the level of accuracy that only Sentra can guarantee.

From LLMs to SLMs: A Strategic Evolution

Like many in the industry, we started by testing LLMs to see how well they could classify and label data. They were powerful, but also slow, expensive, and difficult to scale. Over time, it became clear: LLMs are too big and too expensive to run on customer data for Sentra to be a viable, cost effective solution for data classification.

Each SLM handles a focused part of the process: initial categorization, text extraction from documents and images, and sensitive entity classification. The SLMs are not only accurate (even more accurate than LLMs classifying using prompts) - they can run on standard CPUs efficiently, and they run inside the customer’s environment, as part of Sentra’s scanners.

The Benefits of SLMs for Customers

a. Speed and Efficiency

SLMs process data faster because they’re lean by design. They don’t waste cycles generating full sentences or reasoning across irrelevant contexts. This means real-time or near-real-time classification, even across millions of data points.

b. Accuracy and Adaptability

SLMs are pre-trained “zero-shot” language models that can categorize and classify generically, without the need to pre-train on a specific task in advance. This is the meaning of “zero shot” - it means that regardless of the data it was trained on, the model can classify an arbitrary set of entities and document labels without training on each one specifically. This is possible due to the fact that language models are very advanced, and they are able to capture deep natural language understanding at the training stage.

Regardless of that, Sentra fine tunes these models to further increase the accuracy of the classification, by curating a very large set of tagged data that resembles the type of data that our customers usually run into.

Our feedback loops ensure that model performance only gets better over time - a direct reflection of our customers’ evolving environments.

c. Cost and Sustainability

Because SLMs are compact, they require less compute power, which means lower operational costs and a smaller carbon footprint. This efficiency allows us to deliver powerful AI capabilities to customers without passing on the heavy infrastructure costs of running massive models.

d. Security and Control

Unlike LLMs hosted on external APIs, SLMs can be run within Sentra’s secure environment, preserving data privacy and regulatory compliance. Customers maintain full control over their sensitive information - a critical requirement in enterprise data security.

A Quick Comparison: SLMs vs. LLMs

The difference between SLMs and LLMs becomes clear when you look at their performance across key dimensions:

| Factor | SLMs | LLMs |

|---|---|---|

| Speed | Fast, optimized for classification throughput | Slower and more compute-intensive for large-scale inference |

| Cost | Cost-efficient | Expensive to run at scale |

| Accuracy (for simple tasks) | Optimized for classification | Comparable but unnecessary overhead |

| Deployment | Lightweight, easy to integrate | Complex and resource-heavy |

| Adaptability (with feedback) | Continuously fine-tuned, ability to fine tune per customer | Harder to customize, fine-tuning costly |

| Best Use Case | Classification, tagging, filtering | Reasoning and analysis, generation, synthesis |

Continuous Learning: How Sentra’s SLMs Grow

One of the most powerful aspects of our SLM approach is continuous learning. Each Sentra customer project contributes valuable insights, from new data patterns to evolving classification needs. These learnings feed back into our training workflows, helping us refine and expand our models over time.

While not every model retrains automatically, the system is built to support iterative optimization: as our team analyzes feedback and performance, models can be fine-tuned or extended to handle new categories and contexts.

The result is an adaptive ecosystem of SLMs that becomes more effective as our customer base and data diversity grow, ensuring Sentra’s AI remains aligned with real-world use cases.

Sentra’s Multi-SLM Architecture

Sentra’s scanning technology doesn’t rely on a single model. We run many SLMs in parallel, each specializing in a distinct layer of classification:

- Embedding models that convert data into meaningful vector representations

- Entity Classification models that label sensitive entities

- Document Classification models that label documents by type

- Image-to-text and speech-to-text models that are able to process non-textual data into textual data

This layered approach allows us to operate at scale - quickly, cheaply, and with great results. In practice, that means faster insights, fewer errors, and a more responsive platform for every customer.

The Future of AI Is Specialized

We believe the next frontier of AI isn’t about who can build the biggest model, it’s about who can build the most efficient, adaptive, and secure ones.

By embracing SLMs, Sentra is pioneering a future where AI systems are purpose-built, transparent, and sustainable. Our approach aligns with a broader industry shift toward task-optimized intelligence - models that do one thing extremely well and can learn continuously over time.

Conclusion: The Power of Small

At Sentra, we’ve learned that in AI, bigger isn’t always better. Our commitment to SLMs reflects our belief that efficiency, adaptability, and precision matter most for customers. By running thousands of small, smart models rather than a single massive one, we’re able to classify data faster, cheaper, and with greater accuracy - all while ensuring customer privacy and control.

In short: Sentra’s SLMs represent the power of small, and the future of intelligent classification.

<blogcta-big>

.webp)

How Automated Remediation Enables Proactive Data Protection at Scale

How Automated Remediation Enables Proactive Data Protection at Scale

Scaling Automated Data Security in Cloud and AI Environments

Modern cloud and AI environments move faster than human response. By the time a manual workflow catches up, sensitive data may already be at risk. Organizations need automated remediation to reduce response time, enforce policy at scale, and safeguard sensitive data the moment it becomes exposed. Comprehensive data discovery and accurate data classification are foundational to this effort. Without knowing what data exists and how it's handled, automation can't succeed.

Sentra’s cloud-native Data Security Platform (DSP) delivers precisely that. With built-in, context-aware automation, data discovery, and classification, Sentra empowers security teams to shift from reactive alerting to proactive defense. From discovery to remediation, every step is designed for precision, speed, and seamless integration into your existing security stack. precisely that. With built-in, context-aware automation, Sentra empowers security teams to shift from reactive alerting to proactive defense. From discovery to remediation, every step is designed for precision, speed, and seamless integration into your existing security stack.

Automated Remediation: Turning Data Risk Into Action

Sentra doesn't just detect risk, it acts. At the core of its value is its ability to execute automated remediation through native integrations and a powerful API-first architecture. This lets organizations immediately address data risks without waiting for manual intervention.

Key Use Cases for Automated Data Remediation

Sensitive Data Tagging & Classification Automation

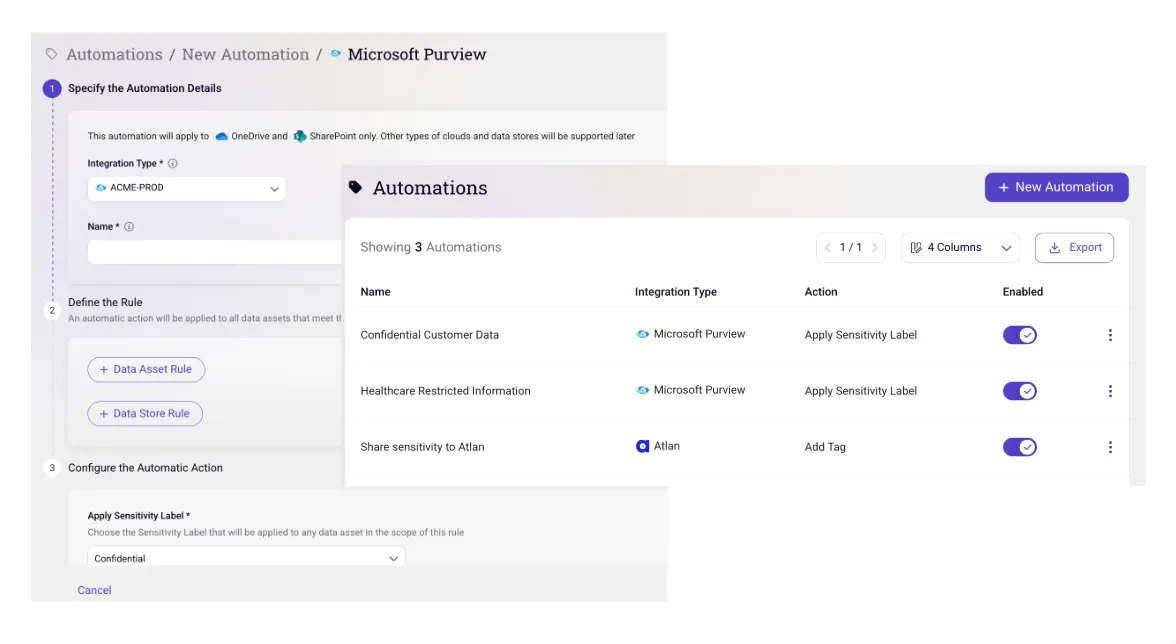

Sentra accurately classifies and tags sensitive data across environments like Microsoft 365, Amazon S3, Azure, and Google Cloud Platform. Its Automation Rules Page enables dynamic labels based on data type and context, empowering downstream tools to apply precise protections.

Automated Access Revocation & Insider Risk Mitigation

Sentra identifies excessive or inappropriate access and revokes it in real time. With integrations into IAM and CNAPP tools, it enforces least-privilege access. Advanced use cases include Just-In-Time (JIT) access via SOAR tools like Tines or Torq.

Enforced Data Encryption & Masking Automation

Sentra ensures sensitive data is encrypted and masked through integrations with Microsoft Purview, Snowflake DDM, and others. It can remediate misclassified or exposed data and apply the appropriate controls, reducing exposure and improving compliance.

Integrated Remediation Workflow Automation

Sentra streamlines incident response by triggering alerts and tickets in ServiceNow, Jira, and Splunk. Context-rich events accelerate triage and support policy-driven automated remediation workflows.

Architecture Built for Scalable Security Automation

Cloud & AI Data Visibility with Actionable Remediation

Sentra provides visibility across AWS, Azure, GCP, and M365 while minimizing data movement. It surfaces actionable guidance, such as missing logging or improper configurations, for immediate remediation.

Dynamic Policy Enforcement via Tagging

Sentra’s tagging flows directly into cloud-native services and DLP platforms, powering dynamic, context-aware policy enforcement.

API-First Architecture for Security Automation

With a REST API-first design, Sentra integrates seamlessly with security stacks and enables full customization of workflows, dashboards, and automation pipelines.

Why Sentra for Automated Remediation?

Sentra offers a unified platform for security teams that need visibility, precision, and automation at scale. Its advantages include:

- No agents or connectors required

- High-accuracy data classification for confident automation

- Deep integration with leading security and IT platforms

- Context-rich tagging to drive intelligent enforcement

- Built-in data discovery that powers proactive policy decisions

- OpenAPI interface for tailored remediation workflows

These capabilities are particularly valuable for CISOs, Heads of Data Security, and AI Security teams tasked with securing sensitive data in complex, distributed environments.

Automate Data Remediation and Strengthen Cloud Security

Today’s cloud and AI environments demand more than visibility, they require decisive, automated action. Security leaders can no longer afford to rely on manual processes when sensitive data is constantly in motion.

Sentra delivers the speed, precision, and context required to protect what matters most. By embedding automated remediation into core security workflows, organizations can eliminate blind spots, respond instantly to risk, and ensure compliance at scale.

<blogcta-big>

.webp)

New Healthcare Cyber Regulations: What Security Teams Need to Know

New Healthcare Cyber Regulations: What Security Teams Need to Know

Why New Healthcare Cybersecurity Regulations Are Critical

In today’s healthcare landscape, cyberattacks on hospitals and health services have become increasingly common and devastating. For organizations that handle vast amounts of sensitive patient information, a single breach can mean exposing millions of records, causing not only financial repercussions but also risking patient privacy, trust, and care continuity.

Top Data Breaches in Hospitals in 2024: A Year of Costly Cyber Incidents

In 2024, there have been a series of high-profile data breaches in the healthcare sector, exposing critical vulnerabilities and emphasizing the urgent need for stronger cybersecurity measures in 2025 and beyond. Among the most significant incidents was the breach at Change Healthcare, Inc., which resulted in the exposure of 100 million records. As one of the largest healthcare data breaches in history, this event highlighted the challenges of securing patient data at scale and the immense risks posed by hacking incidents. Similarly, HealthEquity, Inc. suffered a breach impacting 4.3 million individuals, highlighting the vulnerabilities associated with healthcare business associates who manage data for multiple organizations. Finally, Concentra Health Services, Inc. experienced a breach that compromised nearly 4 million patient records, raising critical concerns about the adequacy of cybersecurity defenses in healthcare facilities. These incidents have significantly impacted patients and providers alike, highlighting the urgent need for robust cybersecurity measures and stricter regulations to protect sensitive data.

New York’s New Cybersecurity Reporting Requirements for Hospitals

In response to the growing threat of cyberattacks, many healthcare organizations and communities are implementing stronger cybersecurity protections. In October, New York State took a significant step by introducing new cybersecurity regulations for general hospitals aimed at safeguarding patient data and reinforcing security measures across healthcare systems. Under these regulations, hospitals in New York must report any “material cybersecurity incident” to the New York State Department of Health (NYSDOH) within 72 hours of discovery.

This 72-hour reporting window aligns with other global regulatory frameworks, such as the European Union’s GDPR and the SEC’s requirements for public companies. However, its application in healthcare represents a critical shift, ensuring incidents are addressed and reported promptly.

The rapid reporting requirement aims to:

- Enable the NYSDOH to assess and respond to cyber incidents across the state’s healthcare network.

- Help mitigate potential fallout by ensuring hospitals promptly address vulnerabilities.

- Protect patients by fostering transparency around data breaches and associated risks.

For hospitals, meeting this requirement means refining incident response protocols to act swiftly upon detecting a breach. Compliance with these regulations not only safeguards patient data but also strengthens trust in healthcare services.

With these regulations, New York is setting a precedent that could reshape healthcare cybersecurity standards nationwide. By emphasizing proactive cybersecurity and quick incident response, the state is establishing a higher bar for protecting sensitive data in healthcare organizations, inspiring other states to potentially follow as well.

HIPAA Updates and the Role of HHS

While New York leads with immediate, state-level action, the Department of Health and Human Services (HHS) is also working to update the HIPAA Security Rule with new cybersecurity standards. These updates, expected to be proposed later this year, will follow a lengthy regulatory process, including a notice of proposed rulemaking, a public comment period, and the eventual issuance of a final rule. Once finalized, healthcare organizations will have time to comply.

In the interim, the HHS has outlined voluntary cybersecurity goals, announced in January 2024. While these recommendations are a step forward, they lack the urgency and enforceability of New York’s state-level regulations. The contrast between the swift action in New York and the slower federal process highlights the critical role state initiatives play in bridging gaps in patient data protection.

Together, these developments—New York’s rapid reporting requirements and the ongoing HIPAA updates—show a growing recognition of the need for stronger cybersecurity measures in healthcare. They emphasize the importance of immediate action at the state level while federal efforts progress toward long-term improvements in data security standards.

Penalties for Healthcare Cybersecurity Non-Compliance in NY

Non-compliance with any health law or regulation in New York State, including cybersecurity requirements, may result in penalties. However, the primary goal of these regulations is not to impose financial penalties but to ensure that healthcare facilities are equipped with the necessary resources and guidance to defend against cyberattacks. Under Section 12 of health law regulations in New York State, violations can result in civil penalties of up to $2,000 per offense, with increased fines for more severe or repeated infractions. If a violation is repeated within 12 months and poses a serious health threat, the fine can rise to $5,000. For violations directly causing serious physical harm to a patient, penalties may reach $10,000. A portion of fines exceeding $2,000 is allocated to the Patient Safety Center to support its initiatives. These penalties aim to ensure compliance, with enforcement actions carried out by the Commissioner or the Attorney General. Additionally, penalties may be negotiated or settled under certain circumstances, providing flexibility while maintaining accountability.

Importance of Prioritizing Breach Reporting

With the rapid digitization of healthcare services, regulations are expected to tighten significantly in the coming years. HIPAA, in particular, is anticipated to evolve with stronger privacy protections and expanded rules to address emerging challenges. Healthcare providers must make cybersecurity a top priority to protect patients from cyber threats. This involves adopting proactive risk assessments, implementing strong data protection strategies, and optimizing breach detection, response, and reporting capabilities to meet regulatory requirements effectively.

Data Security Platforms (DSPs) are essential for safeguarding sensitive healthcare data. These platforms enable organizations to locate and classify patient information, such as lab results, prescriptions, personally identifiable information, or medical images - across multiple formats and environments, ensuring comprehensive protection and regulatory compliance.

Breach Reporting With Sentra

A proper classification solution is essential for understanding the nature and sensitivity of your data at all times. With Sentra, you gain a clear, real-time view of your data's classification, making it easier to determine if sensitive data was involved in a breach, identify the types of data affected, and track who had access to it. This ensures that your breach reports are accurate, comprehensive, and aligned with regulatory requirements.

Sentra can help you to adhere to many compliance frameworks, including PCI, GDPR, SOC2 and more, that may be applicable to your sensitive data as it travels around the organization. It automatically will alert you to violations, provide insight into the impact of any compromise, help you to prioritize associated risks, and integrate with common IR tools to streamline remediation. Sentra automates these processes so you can focus energies on eliminating risks.

.webp)

If you want to learn more about Sentra's Data Security Platform, and how you can get started with adhering to the different compliance frameworks, please visit Sentra's demo page.

<blogcta-big>

.webp)

Supercharging DLP with Automatic Data Discovery & Classification of Sensitive Data

Supercharging DLP with Automatic Data Discovery & Classification of Sensitive Data

Data Loss Prevention (DLP) is a keystone of enterprise security, yet traditional DLP solutions continue to suffer from high rates of both false positives and false negatives, primarily because they struggle to accurately identify and classify sensitive data in cloud-first environments.

New advanced data discovery and contextual classification technology directly addresses this gap, transforming DLP from an imprecise, reactive tool into a proactive, highly effective solution for preventing data loss.

Why DLP Solutions Can’t Work Alone

DLP solutions are designed to prevent sensitive or confidential data from leaving your organization, support regulatory compliance, and protect intellectual property and reputation. A noble goal indeed. Yet DLP projects are notoriously anxiety-inducing for CISOs. On the one hand, they often generate a high amount of false positives that disrupt legitimate business activities and further exacerbate alert fatigue for security teams.

What’s worse than false positives? False negatives. Today traditional DLP solutions too often fail to prevent data loss because they cannot efficiently discover and classify sensitive data in dynamic, distributed, and ephemeral cloud environments.

Traditional DLP faces a twofold challenge:

- High False Positives: DLP tools often flag benign or irrelevant data as sensitive, overwhelming security teams with unnecessary alerts and leading to alert fatigue.

- High False Negatives: Sensitive data is frequently missed due to poor or outdated classification, leaving organizations exposed to regulatory, reputational, and operational risks.

These issues stem from DLP’s reliance on basic pattern-matching, static rules, and limited context. As a result, DLP cannot keep pace with the ways organizations use, store, and share data, resulting in the dual-edged sword of both high false positives and false negatives. Furthermore, the explosion of unstructured data types and shadow IT creates blind spots that traditional DLP solutions cannot detect. As a result, DLP often can’t keep pace with the ways organizations use, store, and share data. It isn’t that DLP solutions don’t work, rather they lack the underlying discovery and classification of sensitive data needed to work correctly.

AI-Powered Data Discovery & Classification Layer

Continuous, accurate data classification is the foundation for data security. An AI-powered data discovery and classification platform can act as the intelligence layer that makes DLP work as intended. Here’s how Sentra complements the core limitations of DLP solutions:

1. Continuous, Automated Data Discovery

- Comprehensive Coverage: Discovers sensitive data across all data types and locations - structured and unstructured sources, databases, file shares, code repositories, cloud storage, SaaS platforms, and more.

- Cloud-Native & Agentless: Scans your entire cloud estate (AWS, Azure, GCP, Snowflake, etc.) without agents or data leaving your environment, ensuring privacy and scalability.

- Shadow Data Detection: Uncovers hidden or forgotten (“shadow”) data sets that legacy tools inevitably miss, providing a truly complete data inventory.

2. Contextual, Accurate Classification

- AI-Driven Precision: Sentra proprietary LLMs and hybrid models achieve over 95% classification accuracy, drastically reducing both false positives and false negatives.

- Contextual Awareness: Sentra goes beyond simple pattern-matching to truly understand business context, data lineage, sensitivity, and usage, ensuring only truly sensitive data is flagged for DLP action.

- Custom Classifiers: Enables organizations to tailor classification to their unique business needs, including proprietary identifiers and nuanced data types, for maximum relevance.

3. Real-Time, Actionable Insights

- Sensitivity Tagging: Automatically tags and labels files with rich metadata, which can be fed directly into your DLP for more granular, context-aware policy enforcement.

- API Integrations: Seamlessly integrates with existing DLP, IR, ITSM, IAM, and compliance tools, enhancing their effectiveness without disrupting existing workflows.

- Continuous Monitoring: Provides ongoing visibility and risk assessment, so your DLP is always working with the latest, most accurate data map.

.webp)

How Sentra Supercharges DLP Solutions

Better Classification Means Less Noise, More Protection

- Reduce Alert Fatigue: Security teams focus on real threats, not chasing false alarms, which results in better resource allocation and faster response times.

- Accelerate Remediation: Context-rich alerts enable faster, more effective incident response, minimizing the window of exposure.

- Regulatory Compliance: Accurate classification supports GDPR, PCI DSS, CCPA, HIPAA, and more, reducing audit risk and ensuring ongoing compliance.

- Protect IP and Reputation: Discover and secure proprietary data, customer information, and business-critical assets, safeguarding your organization’s most valuable resources.

Why Sentra Outperforms Legacy Approaches

Sentra’s hybrid classification framework combines rule-based systems for structured data with advanced LLMs and zero-shot learning for unstructured and novel data types.

This versatility ensures:

- Scalability: Handles petabytes of data across hybrid and multi-cloud environments, adapting as your data landscape evolves.

- Adaptability: Learns and evolves with your business, automatically updating classifications as data and usage patterns change.

- Privacy: All scanning occurs within your environment - no data ever leaves your control, ensuring compliance with even the strictest data residency requirements.

Use Case: Where DLP Alone Fails, Sentra Prevails

A financial services company uses a leading DLP solution to monitor and prevent the unauthorized sharing of sensitive client information, such as account numbers and tax IDs, across cloud storage and email. The DLP is configured with pattern-matching rules and regular expressions for identifying sensitive data.

What Goes Wrong:

An employee uploads a spreadsheet to a shared cloud folder. The spreadsheet contains a mix of client names, account numbers, and internal project notes. However, the account numbers are stored in a non-standard format (e.g., with dashes, spaces, or embedded within other text), and the file is labeled with a generic name like “Q2_Projects.xlsx.” The DLP solution, relying on static patterns and file names, fails to recognize the sensitive data and allows the file to be shared externally. The incident goes undetected until a client reports a data breach.

How Sentra Solves the Problem:

To address this, the security team set out to find a solution capable of discovering and classifying unstructured data without creating more overhead. They selected Sentra for its autonomous ability to continuously discover and classify all types of data across their hybrid cloud environment. Once deployed, Sentra immediately recognizes the context and content of files like the spreadsheet that enabled the data leak. It accurately identifies the embedded account numbers—even in non-standard formats—and tags the file as highly sensitive.

This sensitivity tag is automatically fed into the DLP, which then successfully enforces strict sharing controls and alerts the security team before any external sharing can occur. As a result, all sensitive data is correctly classified and protected, the rate of false negatives was dramatically reduced, and the organization avoids further compliance violations and reputational harm.

Getting Started with Sentra is Easy

- Deploy Agentlessly: No complex installation. Sentra integrates quickly and securely into your environment, minimizing disruption.

- Automate Discovery & Classification: Build a living, accurate inventory of your sensitive data assets, continuously updated as your data landscape changes.

- Enhance DLP Policies: Feed precise, context-rich sensitivity tags into your DLP for smarter, more effective enforcement across all channels.

- Monitor Continuously: Stay ahead of new risks with ongoing discovery, classification, and risk assessment, ensuring your data is always protected.

“Sentra’s contextual classification engine turns DLP from a reactive compliance checkbox into a proactive, business-enabling security platform.”

Fuel DLP with Automatic Discovery & Classification

DLP is an essential data protection tool, but without accurate, context-aware data discovery and classification, it’s incomplete and often ineffective. Sentra supercharges your DLP with continuous data discovery and accurate classification, ensuring you find and protect what matters most—while eliminating noise, inefficiency, and risk.

Ready to see how Sentra can supercharge your DLP? Contact us for a demo today.

<blogcta-big>

.webp)

Best Practices: Automatically Tag and Label Sensitive Data

Best Practices: Automatically Tag and Label Sensitive Data

The Importance of Data Labeling and Tagging

In today's fast-paced business environment, data rarely stays in one place. It moves across devices, applications, and services as individuals collaborate with internal teams and external partners. This mobility is essential for productivity but poses a challenge: how can you ensure your data remains secure and compliant with business and regulatory requirements when it's constantly on the move?

Why Labeling and Tagging Data Matters

Data labeling and tagging provide a critical solution to this challenge. By assigning sensitivity labels to your data, you can define its importance and security level within your organization. These labels act as identifiers that abstract the content itself, enabling you to manage and track the data type without directly exposing sensitive information. With the right labeling, organizations can also control access in real-time.

For example, labeling a document containing social security numbers or credit card information as Highly Confidential allows your organization to acknowledge the data's sensitivity and enforce appropriate protections, all without needing to access or expose the actual contents.

Why Sentra’s AI-Based Classification Is a Game-Changer

Sentra’s AI-based classification technology enhances data security by ensuring that the sensitivity labels are applied with exceptional accuracy. Leveraging advanced LLM models, Sentra enhances data classification with context-aware capabilities, such as:

- Detecting the geographic residency of data subjects.

- Differentiating between Customer Data and Employee Data.

- Identifying and treating Synthetic or Mock Data differently from real sensitive data.

This context-based approach eliminates the inefficiencies of manual processes and seamlessly scales to meet the demands of modern, complex data environments. By integrating AI into the classification process, Sentra empowers teams to confidently and consistently protect their data—ensuring sensitive information remains secure, no matter where it resides or how it is accessed.

Benefits of Labeling and Tagging in Sentra

Sentra enhances your ability to classify and secure data by automatically applying sensitivity labels to data assets. By automating this process, Sentra removes the manual effort required from each team member—achieving accuracy that’s only possible through a deep understanding of what data is sensitive and its broader context.

Here are some key benefits of labeling and tagging in Sentra:

- Enhanced Security and Loss Prevention: Sentra’s integration with Data Loss Prevention (DLP) solutions prevents the loss of sensitive and critical data by applying the right sensitivity labels. Sentra’s granular, contextual tags help to provide the detail necessary to action remediation automatically so that operations can scale.

- Easily Build Your Tagging Rules: Sentra’s Intuitive Rule Builder allows you to automatically apply sensitivity labels to assets based on your pre-existing tagging rules and or define new ones via the builder UI (see screen below). Sentra imports discovered Microsoft Purview Information Protection (MPIP) labels to speed this process.

- Labels Move with the Data: Sensitivity labels created in Sentra can be mapped to Microsoft Purview Information Protection (MPIP) labels and applied to various applications like SharePoint, OneDrive, Teams, Amazon S3, and Azure Blob Containers. Once applied, labels are stored as metadata and travel with the file or data wherever it goes, ensuring consistent protection across platforms and services.

- Automatic Labeling: Sentra allows for the automatic application of sensitivity labels based on the data's content. Auto-tagging rules, configured for each sensitivity label, determine which label should be applied during scans for sensitive information.

- Support for Structured and Unstructured Data: Sentra enables labeling for files stored in cloud environments such as Amazon S3 or EBS volumes and for database columns in structured data environments like Amazon RDS. By implementing these labeling practices, your organization can track, manage, and protect data with ease while maintaining compliance and safeguarding sensitive information. Whether collaborating across services or storing data in diverse cloud environments, Sentra ensures your labels and protection follow the data wherever it goes.

Applying Sensitivity Labels to Data Assets in Sentra

%2520image%2520for%2520blog%2520(1).webp)

In today’s rapidly evolving data security landscape, ensuring that your data is properly classified and protected is crucial. One effective way to achieve this is by applying sensitivity labels to your data assets. Sensitivity labels help ensure that data is handled according to its level of sensitivity, reducing the risk of accidental exposure and enabling compliance with data protection regulations.

Below, we’ll walk you through the necessary steps to automatically apply sensitivity labels to your data assets in Sentra. By following these steps, you can enhance your data governance, improve data security, and maintain clear visibility over your organization's sensitive information.

The process involves three key actions:

- Create Sensitivity Labels: The first step in applying sensitivity labels is creating them within Sentra. These labels allow you to categorize data assets according to various rules and classifications. Once set up, these labels will automatically apply to data assets based on predefined criteria, such as the types of classifications detected within the data. Sensitivity labels help ensure that sensitive information is properly identified and protected.

- Connect Accounts with Data Assets: The next step is to connect your accounts with the relevant data assets. This integration allows Sentra to automatically discover and continuously scan all your data assets, ensuring that no data goes unnoticed. As new data is created or modified, Sentra will promptly detect and categorize it, keeping your data classification up to date and reducing manual efforts.

- Apply Classification Tags: Whenever a data asset is scanned, Sentra will automatically apply classification tags to it, such as data classes, data contexts, and sensitivity labels. These tags are visible in Sentra’s data catalog, giving you a comprehensive overview of your data’s classification status. By applying these tags consistently across all your data assets, you’ll have a clear, automated way to manage sensitive data, ensuring compliance and security.

By following these steps, you can streamline your data classification process, making it easier to protect your sensitive information, improve your data governance practices, and reduce the risk of data breaches.

Applying MPIP Labels

In order to apply Microsoft Purview Information Protection (MPIP) labels based on Sentra sensitivity labels, you are required to follow a few additional steps:

- Set up the Microsoft Purview integration - which will allow Sentra to import and sync MPIP sensitivity labels.

- Create tagging rules - which will allow you to map Sentra sensitivity labels to MPIP sensitivity labels (for example “Very Confidential” in Sentra would be mapped to “ACME - Highly Confidential” in MPIP), and choose to which services this rule would apply (for example, Microsoft 365 and Amazon S3).

Using Sensitivity Labels in Microsoft DLP

Microsoft Purview DLP (as well as all other industry-leading DLP solutions) supports MPIP labels in its policies so admins can easily control and prevent data loss of sensitive data across multiple services and applications.For instance, a MPIP ‘highly confidential’ label may instruct Microsoft Purview DLP to restrict transfer of sensitive data outside a certain geography. Likewise, another similar label could instruct that confidential intellectual property (IP) is not allowed to be shared within Teams collaborative workspaces. Labels can be used to help control access to sensitive data as well. Organizations can set a rule with read permission only for specific tags. For example, only production IAM roles can access production files. Further, for use cases where data is stored in a single store, organizations can estimate the storage cost for each specific tag.

Build a Stronger Foundation with Accurate Data Classification

Effectively tagging sensitive data unlocks significant benefits for organizations, driving improvements across accuracy, efficiency, scalability, and risk management. With precise classification exceeding 95% accuracy and minimal false positives, organizations can confidently label both structured and unstructured data. Automated tagging rules reduce the reliance on manual effort, saving valuable time and resources. Granular, contextual tags enable confident and automated remediation, ensuring operations can scale seamlessly. Additionally, robust data tagging strengthens DLP and compliance strategies by fully leveraging Microsoft Purview’s capabilities. By streamlining these processes, organizations can consistently label and secure data across their entire estate, freeing resources to focus on strategic priorities and innovation.

<blogcta-big>