Cloud Data Security Learning Center

Data Protection and Classification in Microsoft 365

Data Protection and Classification in Microsoft 365

Imagine the fallout of a single misstep—a phishing scam tricking an employee into sharing sensitive data. The breach doesn’t just compromise information; it shakes trust, tarnishes reputations, and invites compliance penalties. With data breaches on the rise, safeguarding your organization’s Microsoft 365 environment has never been more critical.

Data classification helps prevent such disasters. This article provides a clear roadmap for protecting and classifying Microsoft 365 data. It explores how data is saved and classified, discusses built-in tools for protection, and covers best practices for maintaining Microsoft 365 data protection.

How Is Data Saved and Classified in Microsoft 365?

Microsoft 365 stores data across tools and services. For example, emails are stored in Exchange Online, while documents and data for collaboration are found in Sharepoint and Teams, and documents or files for individual users are stored in OneDrive. This data is primarily unstructured—a format ideal for documents and images but challenging for identifying sensitive information.

All of this data is largely stored in an unstructured format typically used for documents and images. This format not only allows organizations to store large volumes of data efficiently; it also enables seamless collaboration across teams and departments. However, as unstructured data cannot be neatly categorized into tables or columns, it becomes cumbersome to discern what data is sensitive and where it is stored.

To address this, Microsoft 365 offers a data classification dashboard that helps classify data of varying levels of sensitivity and data governed by different regulatory compliance frameworks. But how does Microsoft identify sensitive information with unstructured data?

Microsoft employs advanced technologies such as RegEx scans, trainable classifiers, Bloom filters, and data classification graphs to identify and classify data as public, internal, or confidential. Once classified, data protection and governance policies are applied based on sensitivity and retention labels.

Data classification is vital for understanding, protecting, and governing data. With your Microsoft 365 data classified appropriately, you can ensure seamless collaboration without risking data exposure.

Microsoft 365 Data Protection and Classification Tools

Microsoft 365 includes several key tools and frameworks for classifying and securing data. Here are a few.

Microsoft Purview

Microsoft Purview is a cornerstone of data classification and protection within Microsoft 365.

Key Features:

- Over 200+ prebuilt classifiers and the ability to create custom classifiers tailored to specific business needs.

- Purview auto-classifies data across Microsoft 365 and other supported apps, such as Adobe Photoshop and Adobe PDF, while users work on them.

- Sensitivity labels that apply encryption, watermarks, and access restrictions to secure sensitive data.

- Double Key Encryption to ensure that sensitivity labels persist even when file formats change.

Purview autonomously applies sensitivity labels like "confidential" or "highly confidential" based on preconfigured policies, ensuring optimal access control. These labels persist even when files are shared or converted to other formats, such as from Word to PDF.

Additionally, Purview’s data loss prevention (DLP) policies prevent unauthorized sharing or deletion of sensitive data by flagging and reporting violations in real time. For example, if a sensitive file is shared externally, Purview can immediately block the transfer and alert your security team.

Microsoft Defender

Microsoft Defender for Cloud Apps strengthens security by providing a cloud app discovery window to identify applications accessing data. Once identified, it classifies files within these applications based on sensitivity, applying appropriate protections as per preconfigured policies.

Key Features:

- Data Sensitivity Classification: Defender identifies sensitive files and assigns protection based on sensitivity levels, ensuring compliance and reducing risk. For example, it labels files containing credit card numbers, personal identifiers, or confidential business information with sensitivity classifications like "Highly Confidential."

- Threat Detection and Response: Defender detects known threats targeted at sensitive data in emails, collaboration tools (like SharePoint and Teams), URLs, file attachments, and OneDrive. If an admin account is compromised, Microsoft Defender immediately spots the threat, disables the account, and notifies your IT team to prevent significant damage.

- Automation: Defender automates incident response, ensuring that malicious activities are flagged and remediated promptly.

Intune

Microsoft Intune provides comprehensive device management and data protection, enabling organizations to enforce policies that safeguard sensitive information on both managed and unmanaged smartphones, computers, and other devices.

Key Features:

- Customizable Compliance Policies: Intune allows organizations to enforce device compliance policies that align with internal and regulatory standards. For example, it can block non-compliant devices from accessing sensitive data until issues are resolved.

- Data Access Control: Intune disallows employees from accessing corporate data on compromised devices or through insecure apps, such as those not using encryption for emails.

- Endpoint Security Management: By integrating with Microsoft Defender, Intune provides endpoint protection and automated responses to detected threats, ensuring only secure devices can access your organization’s network.

Intune supports organizations by enabling the creation and enforcement of device compliance policies tailored to both internal and regulatory standards. These policies detect non-compliant devices, issue alerts, and restrict access to sensitive data until compliance is restored. Conditional access ensures that only secure and compliant devices connect to your network.

Microsoft 365-managed apps like Outlook, Word, and Excel. These policies define which apps can access specific data, such as emails, and regulate permissible actions, including copying, pasting, forwarding, and taking screenshots. This layered security approach safeguards critical information while maintaining seamless app functionality.

Does Microsoft have a DLP Solution?

Microsoft 365’s data loss prevention (DLP) policies represent the implementation of the zero-trust framework. These policies aim to prevent oversharing, accidental deletion, and data leaks across Microsoft 365 services, including Exchange Online, SharePoint, Teams, and OneDrive, as well as Windows and macOS devices.

Retention policies, deployed via retention labels, help organizations manage the data lifecycle effectively.These labels ensure that data is retained only as long as necessary to meet compliance requirements, reducing the risks associated with prolonged data storage.

What is the Microsoft 365 Compliance Center?

The Microsoft 365 compliance center offers tools to manage policies and monitor data access, ensuring adherence to regulations. For example, DLP policies allow organizations to define specific automated responses when certain regulatory requirements—like GDPR or HIPAA—are violated.

Microsoft Purview Compliance Portal: This portal ensures sensitive data is classified, stored, retained, and used in adherence to relevant compliance regulations. Meanwhile, Microsoft 365’s MPIP ensures that only authorized users can access sensitive information, whether collaborating on Teams or sharing files in SharePoint. Together, these tools enable secure collaboration while keeping regulatory compliance at the forefront.

12 Best Practices for Microsoft 365 Data Protection and Classification

To achieve effective Microsoft 365 data protection and classification, organizations should follow these steps:

- Create precise labels, tags, and classification policies; don’t rely solely on prebuilt labels and policies, as definitions of sensitive data may vary by context.

- Automate labeling to minimize errors and quickly capture new datasets.

- Establish and enforce data use policies and guardrails automatically to reduce risks of data breaches, compliance failures, and insider threat risks.

- Regularly review and update data classification and usage policies to reflect evolving threats, new data storage, and changing compliance laws.o policies must stay up to date to remain effective.

- Define context-appropriate DLP policies based on your business needs; factoring in remote work, ease of collaboration, regional compliance standards, etc.

- Apply encryption to safeguard data inside and outside your organization.

- Enforce role-based access controls (RBAC) and least privilege principles to ensure users only have access to data and can perform actions within the scope of their roles. This limits the risk of accidental data exposure, deletion, and cyberattacks.

- Create audit trails of user activity around data and maintain version histories to prevent and track data loss.

- Follow the 3-2-1 backup rule: keep three copies of your data, store two on different media, and one offsite.

- Leverage the full suite of Microsoft 365 tools to monitor sensitive data, detect real-time threats, and secure information effectively.

- Promptly resolve detected risks to mitigate attacks early.

- Ensure data protection and classification policies do not impede collaboration to prevent teams from creating shadow data, which puts your organization at risk of data breaches.

For example, consider #3. If a disgruntled employee starts transferring sensitive intellectual property to external devices in preparation for a ransomware attack, having the right data use policies in place will allow your organization to stop the threat before it escalates.

Microsoft 365 Data Protection and Classification Limitations

Despite Microsoft 365’s array of tools, there are some key gaps. AI/ML-powered data security posture management (DSPM) and data detection and response (DDR) solutions fill these easily.

The top limitations of Microsoft 365 data protection and classification are the following:

- Limitations Handling Large Volumes of Unstructured Data: Purview struggles to automatically classify and apply sensitivity labels to diverse and vast datasets, particularly in Azure services or non-Microsoft clouds.

- Contextless Data Classification: Without considering context, Microsoft Purview’s MPIP can lead to false positives (over-labeling non-sensitive data) or false negatives (missing sensitive data).

- Inconsistent Labeling Across Providers: Microsoft tools are limited to its ecosystem, making it difficult for enterprises using multi-cloud environments to enforce consistent organization-wide labeling.

- Minimal Threat Response Capabilities: Microsoft Defender relies heavily on IT teams for remediation and lacks robust autonomous responses.

- Sporadic Interruption of User Activity: Inaccurate DLP classifications can disrupt legitimate data transfers in collaboration channels, frustrating employees and increasing the risk of shadow IT workarounds.

Sentra Fills the Gap: Protection Measures to Address Microsoft 365 Data Risks

Today’s businesses must get ahead of data risks by instituting Microsoft 365 data protection and classification best practices such as least privilege access and encryption. Otherwise, they risk data exposure, damaging cyberattacks, and hefty compliance fines. However, implementing these best practices depends on accurate and context-sensitive data classification in Microsoft 365.

Sentra’s Cloud-native Data Security Platform enables secure collaboration and file sharing across all Microsoft 365 services including SharePoint, OneDrive, Teams, OneNote, Office, Word, Excel, and more. Sentra provides data access governance, shadow data detection, and privacy audit automation for M365 data. It also evaluates risks and alerts for policy or regulatory violations.

Specifically, Sentra complements Purview in the following ways:

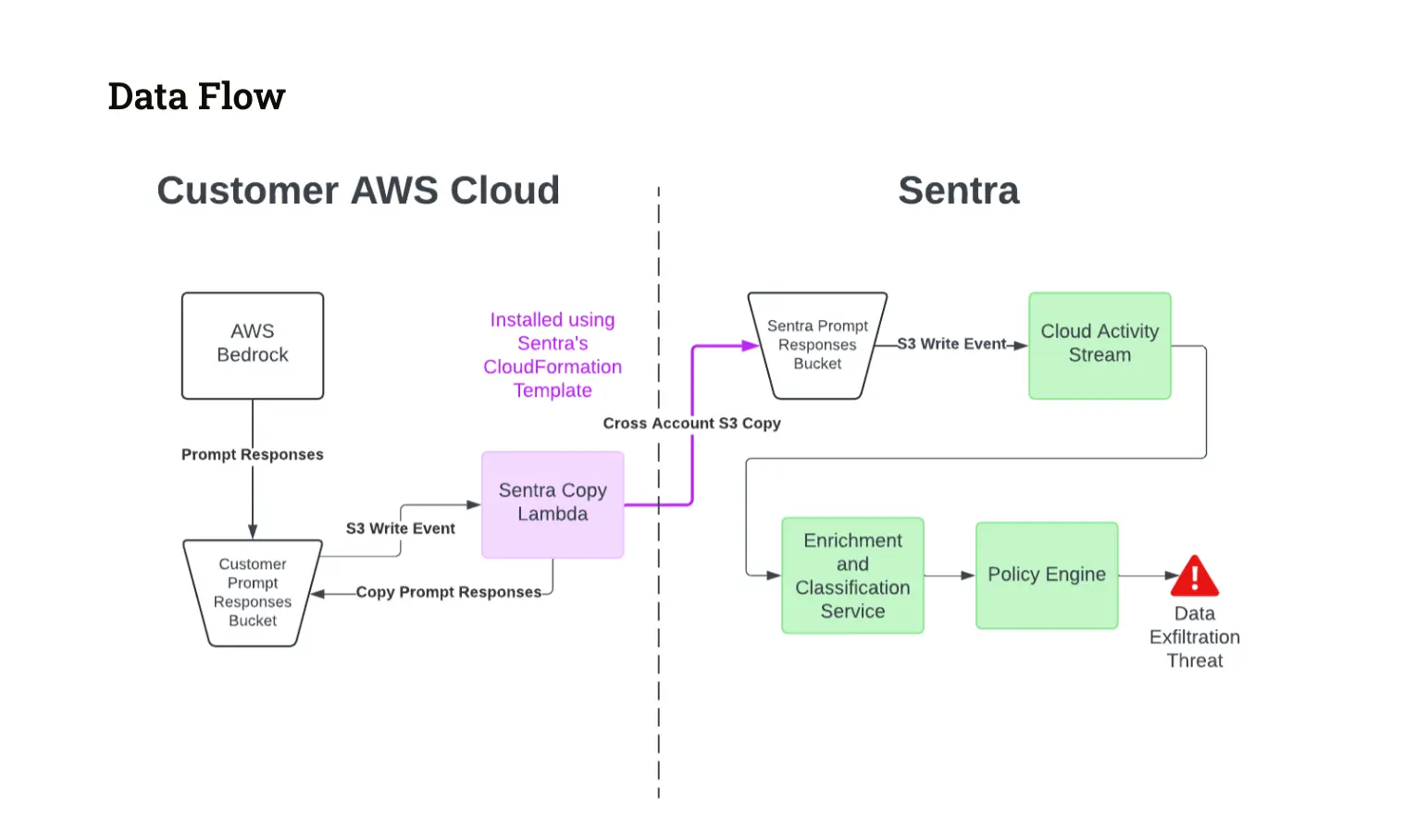

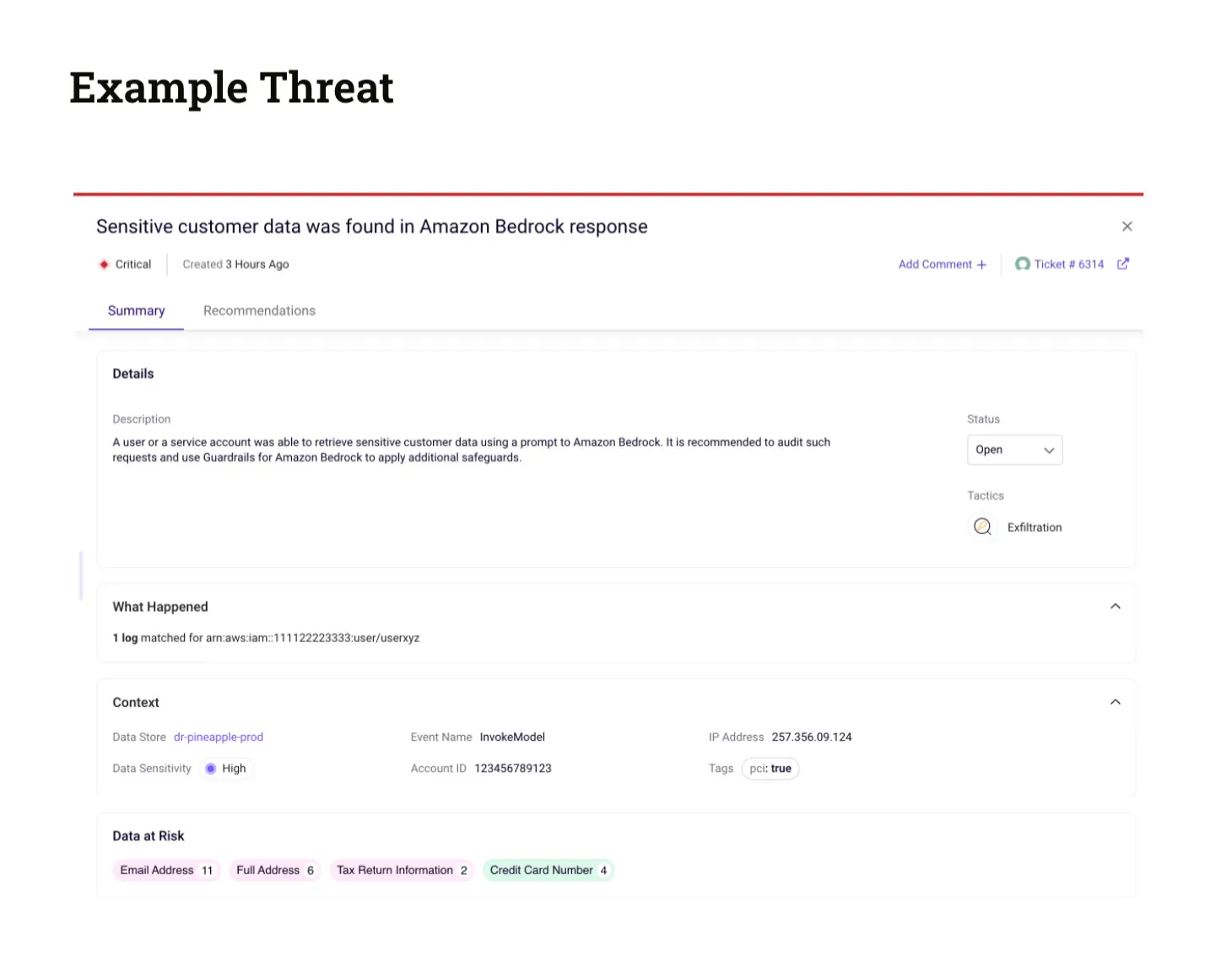

- Sentra Data Detection & Response (DDR): Continuously monitors for threats such as data exfiltration, weakening of data security posture, and other suspicious activities in real time. While Purview Insider Risk Management focuses on M365 applications, Sentra DDR extends these capabilities to Azure and non-Microsoft applications.

- Data Perimeter Protection: Sentra automatically detects and identifies an organization’s data perimeters across M365, Azure, and non-Microsoft clouds. It alerts “organizations when sensitive data leaves its boundaries, regardless of how it is copied or exported.

- Shadow Data Reduction: Using context-based analysis powered by Sentra’s DataTreks™, the platform identifies unnecessary shadow data, reducing the attack surface and improving data governance.

- Training Data Monitoring: Sentra monitors training datasets continuously, identifying privacy violations of sensitive PII or real-time threats like training data poisoning or suspicious access.

- Data Access Governance: Sentra adds to Purview’s data catalog by including metadata on users and applications with data access permissions, ensuring better governance.

- Automated Privacy Assessments: Sentra automates privacy evaluations aligned with frameworks like GDPR and CCPA, seamlessly integrating them into Purview’s data catalog.

- Rich Contextual Insights: Sentra delivers detailed data context to understand usage, sensitivity, movement, and unique data types. These insights enable precise risk evaluation, threat prioritization, and remediation, and they can be consumed via an API by DLP systems, SIEMs, and other tools.

By addressing these gaps, Sentra empowers organizations to enhance their Microsoft 365 data protection and classification strategies. Request a demo to experience Sentra’s innovative solutions firsthand.

<blogcta-big>

Create an Effective RFP for a Data Security Platform & DSPM

Create an Effective RFP for a Data Security Platform & DSPM

This RFP Guide is designed to help organizations create their own RFP for selection of Cloud-native Data Security Platform (DSP) & Data Security Posture Management (DSPM) solutions. The purpose is to identify key essential requirements that will enable effective discovery, classification, and protection of sensitive data across complex environments, including in public cloud infrastructures and in on-premises environments.

Instructions for Vendors

Each section provides essential and recommended requirements to achieve a best practice capability. These have been accumulated over dozens of customer implementations. Customers may also wish to include their own unique requirements specific to their industry or data environment.

1. Data Discovery & Classification

2. Data Access Governance

3. Posture, Risk Assessment & Threat Monitoring

4. Incident Response & Remediation

5. Infrastructure & Deployment

6. Operations & Support

7. Pricing & Licensing

Conclusion

This RFP template is designed to facilitate a structured and efficient evaluation of DSP and DSPM solutions. Vendors are encouraged to provide comprehensive and transparent responses to ensure an accurate assessment of their solution’s capabilities.

Sentra’s cloud-native design combines powerful Data Discovery and Classification, DSPM, DAG, and DDR capabilities into a complete Data Security Platform (DSP). With this, Sentra customers achieve enterprise-scale data protection and do so very efficiently - without creating undue burdens on the personnel who must manage it.

To learn more about Sentra’s DSP, request a demo here and choose a time for a meeting with our data security experts. You can also choose to download the RFP as a pdf.

Best Practices: Automatically Tag and Label Sensitive Data

Best Practices: Automatically Tag and Label Sensitive Data

The Importance of Data Labeling and Tagging

In today's fast-paced business environment, data rarely stays in one place. It moves across devices, applications, and services as individuals collaborate with internal teams and external partners. This mobility is essential for productivity but poses a challenge: how can you ensure your data remains secure and compliant with business and regulatory requirements when it's constantly on the move?

Why Labeling and Tagging Data Matters

Data labeling and tagging provide a critical solution to this challenge. By assigning sensitivity labels to your data, you can define its importance and security level within your organization. These labels act as identifiers that abstract the content itself, enabling you to manage and track the data type without directly exposing sensitive information. With the right labeling, organizations can also control access in real-time.

For example, labeling a document containing social security numbers or credit card information as Highly Confidential allows your organization to acknowledge the data's sensitivity and enforce appropriate protections, all without needing to access or expose the actual contents.

Why Sentra’s AI-Based Classification Is a Game-Changer

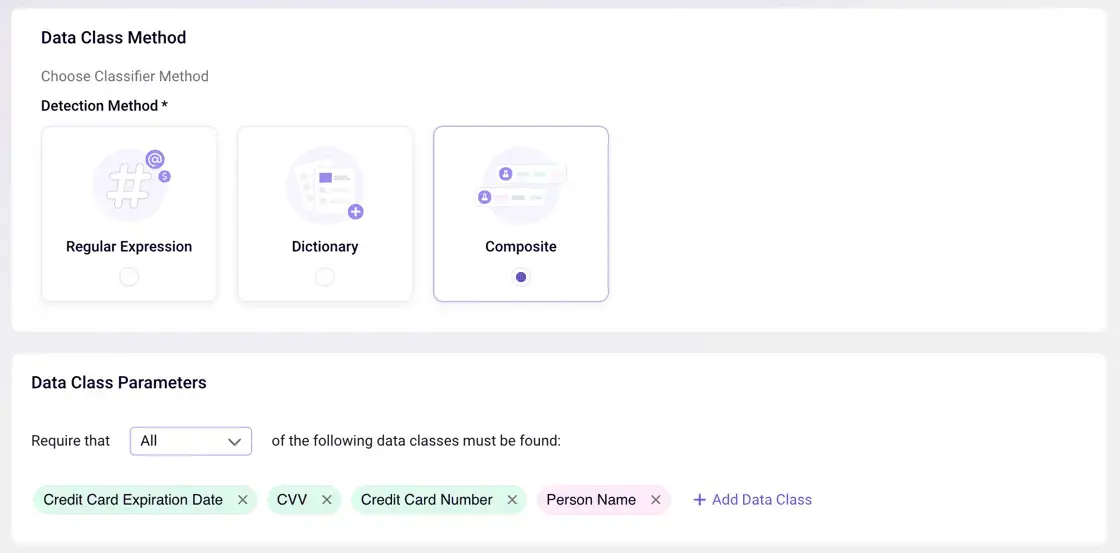

Sentra’s AI-based classification technology enhances data security by ensuring that the sensitivity labels are applied with exceptional accuracy. Leveraging advanced LLM models, Sentra enhances data classification with context-aware capabilities, such as:

- Detecting the geographic residency of data subjects.

- Differentiating between Customer Data and Employee Data.

- Identifying and treating Synthetic or Mock Data differently from real sensitive data.

This context-based approach eliminates the inefficiencies of manual processes and seamlessly scales to meet the demands of modern, complex data environments. By integrating AI into the classification process, Sentra empowers teams to confidently and consistently protect their data—ensuring sensitive information remains secure, no matter where it resides or how it is accessed.

Benefits of Labeling and Tagging in Sentra

Sentra enhances your ability to classify and secure data by automatically applying sensitivity labels to data assets. By automating this process, Sentra removes the manual effort required from each team member—achieving accuracy that’s only possible through a deep understanding of what data is sensitive and its broader context.

Here are some key benefits of labeling and tagging in Sentra:

- Enhanced Security and Loss Prevention: Sentra’s integration with Data Loss Prevention (DLP) solutions prevents the loss of sensitive and critical data by applying the right sensitivity labels. Sentra’s granular, contextual tags help to provide the detail necessary to action remediation automatically so that operations can scale.

- Easily Build Your Tagging Rules: Sentra’s Intuitive Rule Builder allows you to automatically apply sensitivity labels to assets based on your pre-existing tagging rules and or define new ones via the builder UI (see screen below). Sentra imports discovered Microsoft Purview Information Protection (MPIP) labels to speed this process.

- Labels Move with the Data: Sensitivity labels created in Sentra can be mapped to Microsoft Purview Information Protection (MPIP) labels and applied to various applications like SharePoint, OneDrive, Teams, Amazon S3, and Azure Blob Containers. Once applied, labels are stored as metadata and travel with the file or data wherever it goes, ensuring consistent protection across platforms and services.

- Automatic Labeling: Sentra allows for the automatic application of sensitivity labels based on the data's content. Auto-tagging rules, configured for each sensitivity label, determine which label should be applied during scans for sensitive information.

- Support for Structured and Unstructured Data: Sentra enables labeling for files stored in cloud environments such as Amazon S3 or EBS volumes and for database columns in structured data environments like Amazon RDS. By implementing these labeling practices, your organization can track, manage, and protect data with ease while maintaining compliance and safeguarding sensitive information. Whether collaborating across services or storing data in diverse cloud environments, Sentra ensures your labels and protection follow the data wherever it goes.

Applying Sensitivity Labels to Data Assets in Sentra

%2520image%2520for%2520blog%2520(1).webp)

In today’s rapidly evolving data security landscape, ensuring that your data is properly classified and protected is crucial. One effective way to achieve this is by applying sensitivity labels to your data assets. Sensitivity labels help ensure that data is handled according to its level of sensitivity, reducing the risk of accidental exposure and enabling compliance with data protection regulations.

Below, we’ll walk you through the necessary steps to automatically apply sensitivity labels to your data assets in Sentra. By following these steps, you can enhance your data governance, improve data security, and maintain clear visibility over your organization's sensitive information.

The process involves three key actions:

- Create Sensitivity Labels: The first step in applying sensitivity labels is creating them within Sentra. These labels allow you to categorize data assets according to various rules and classifications. Once set up, these labels will automatically apply to data assets based on predefined criteria, such as the types of classifications detected within the data. Sensitivity labels help ensure that sensitive information is properly identified and protected.

- Connect Accounts with Data Assets: The next step is to connect your accounts with the relevant data assets. This integration allows Sentra to automatically discover and continuously scan all your data assets, ensuring that no data goes unnoticed. As new data is created or modified, Sentra will promptly detect and categorize it, keeping your data classification up to date and reducing manual efforts.

- Apply Classification Tags: Whenever a data asset is scanned, Sentra will automatically apply classification tags to it, such as data classes, data contexts, and sensitivity labels. These tags are visible in Sentra’s data catalog, giving you a comprehensive overview of your data’s classification status. By applying these tags consistently across all your data assets, you’ll have a clear, automated way to manage sensitive data, ensuring compliance and security.

By following these steps, you can streamline your data classification process, making it easier to protect your sensitive information, improve your data governance practices, and reduce the risk of data breaches.

Applying MPIP Labels

In order to apply Microsoft Purview Information Protection (MPIP) labels based on Sentra sensitivity labels, you are required to follow a few additional steps:

- Set up the Microsoft Purview integration - which will allow Sentra to import and sync MPIP sensitivity labels.

- Create tagging rules - which will allow you to map Sentra sensitivity labels to MPIP sensitivity labels (for example “Very Confidential” in Sentra would be mapped to “ACME - Highly Confidential” in MPIP), and choose to which services this rule would apply (for example, Microsoft 365 and Amazon S3).

Using Sensitivity Labels in Microsoft DLP

Microsoft Purview DLP (as well as all other industry-leading DLP solutions) supports MPIP labels in its policies so admins can easily control and prevent data loss of sensitive data across multiple services and applications.For instance, a MPIP ‘highly confidential’ label may instruct Microsoft Purview DLP to restrict transfer of sensitive data outside a certain geography. Likewise, another similar label could instruct that confidential intellectual property (IP) is not allowed to be shared within Teams collaborative workspaces. Labels can be used to help control access to sensitive data as well. Organizations can set a rule with read permission only for specific tags. For example, only production IAM roles can access production files. Further, for use cases where data is stored in a single store, organizations can estimate the storage cost for each specific tag.

Build a Stronger Foundation with Accurate Data Classification

Effectively tagging sensitive data unlocks significant benefits for organizations, driving improvements across accuracy, efficiency, scalability, and risk management. With precise classification exceeding 95% accuracy and minimal false positives, organizations can confidently label both structured and unstructured data. Automated tagging rules reduce the reliance on manual effort, saving valuable time and resources. Granular, contextual tags enable confident and automated remediation, ensuring operations can scale seamlessly. Additionally, robust data tagging strengthens DLP and compliance strategies by fully leveraging Microsoft Purview’s capabilities. By streamlining these processes, organizations can consistently label and secure data across their entire estate, freeing resources to focus on strategic priorities and innovation.

<blogcta-big>

PII Compliance Checklist: 2025 Requirements & Best Practices

PII Compliance Checklist: 2025 Requirements & Best Practices

What is PII Compliance?

In our contemporary digital landscape, where information flows seamlessly through the vast network of the internet, protecting sensitive data has become crucial. Personally Identifiable Information (PII), encompassing data that can be utilized to identify an individual, lies at the core of this concern. PII compliance stands as the vigilant guardian, the fortification that organizations adopt to ensure the secure handling and safeguarding of this invaluable asset.

In recent years, the frequency and sophistication of cyber threats have surged, making the need for robust protective measures more critical than ever. PII compliance is not merely a legal obligation; it is strategically essential for businesses seeking to instill trust, maintain integrity, and protect their customers and stakeholders from the perils of identity theft and data breaches.

Sensitive vs. Non-Sensitive PII Examples

Before delving into the intricacies of PII compliance, one must navigate the nuanced waters that distinguish sensitive from non-sensitive PII. The former comprises information of profound consequence – Social Security numbers, financial account details, and health records. Mishandling such data could have severe repercussions.

On the other hand, non-sensitive PII includes less critical information like names, addresses, and phone numbers. The ability to discern between these two categories is fundamental to tailoring protective measures effectively.

This table provides a clear visual distinction between sensitive and non-sensitive PII, illustrating the types of information that fall into each category.

The Need for Robust PII Compliance

The need for PII compliance is propelled by the escalating threats of data breaches and identity theft in the digital realm. Cybercriminals, armed with advanced techniques, continuously evolve their strategies, making it crucial for organizations to fortify their defenses. Implementing PII compliance, including robust Data Security Posture Management (DSPM), not only acts as a shield against potential risks but also serves as a foundation for building trust among customers, stakeholders, and regulatory bodies. DSPM reduces data breaches, providing a proactive approach to safeguarding sensitive information and bolstering the overall security posture of an organization.

PII Compliance Checklist

As we delve into the intricacies of safeguarding sensitive data through PII compliance, it becomes imperative to embrace a proactive and comprehensive approach. The PII Compliance Checklist serves as a navigational guide through the complex landscape of data protection, offering a meticulous roadmap for organizations to fortify their digital defenses.

From the initial steps of discovering, identifying, classifying, and categorizing PII to the formulation of a compliance-based PII policy and the implementation of cutting-edge data security measures - this checklist encapsulates the essence of responsible data stewardship. Each item on the checklist acts as a strategic layer, collectively forming an impenetrable shield against the evolving threats of data breaches and identity theft.

1. Discover, Identify, Classify, and Categorize PII

The cornerstone of PII compliance lies in a thorough understanding of your data landscape. Conducting a comprehensive audit becomes the backbone of this process. The journey begins with a meticulous effort to discover the exact locations where PII resides within your organization's data repositories.

Identifying the diverse types of information collected is equally important, as is the subsequent classification of data into sensitive and non-sensitive categories. Categorization, based on varying levels of confidentiality, forms the final layer, establishing a robust foundation for effective PII compliance.

2. Create a Compliance-Based PII Policy

In the intricate tapestry of data protection, the formulation of a compliance-based PII policy emerges as a linchpin. This policy serves as the guiding document, articulating the purpose behind the collection of PII, establishing the legal basis for processing, and delineating the measures implemented to safeguard this information.

The clarity and precision of this policy are paramount, ensuring that every employee is not only aware of its existence but also adheres to its principles. It becomes the ethical compass that steers the organization through the complexities of data governance.

The Java code snippet represents a simplified PII policy class. It includes fields for the purpose of collecting PII, legal basis, and protection measures. The enforcePolicy method could be used to validate data against the policy.

3. Implement Data Security With the Right Tools

Arming your organization with cutting-edge data security tools and technologies is the next critical stride in the journey of PII compliance. Encryption, access controls, and secure transmission protocols form the arsenal against potential threats, safeguarding various types of sensitive data.

The emphasis lies not only on adopting these measures but also on the proactive and regular updating and patching of software to address vulnerabilities, ensuring a dynamic defense against evolving cyber threats.

The JavaScript code snippet provides examples of implementing data security measures, including data encryption, access controls, and secure transmission.

4. Practice IAM

Identity and Access Management (IAM) emerges as the sentinel standing guard over sensitive data. The implementation of IAM practices should be designed not only to restrict unauthorized access but also to regularly review and update user access privileges. The alignment of these privileges with job roles and responsibilities becomes the anchor, ensuring that access is not only secure but also purposeful.

5. Monitor and Respond

In the ever-shifting landscape of digital security, continuous monitoring becomes the heartbeat of effective PII compliance. Simultaneously, it advocates for the establishment of an incident response plan, a blueprint for swift and decisive action in the aftermath of a breach. The timely response becomes the bulwark against the cascading impacts of a data breach.

6. Regularly Assess Your Organization’s PII

The journey towards PII compliance is not a one-time endeavor but an ongoing commitment, making periodic assessments of an organization's PII practices a critical task. Internal audits and risk assessments become the instruments of scrutiny, identifying areas for improvement and addressing emerging threats. It is a proactive stance that ensures the adaptive evolution of PII compliance strategies in tandem with the ever-changing threat landscape.

7. Keep Your Privacy Policy Updated

In the dynamic sphere of technology and regulations, the privacy policy becomes the living document that shapes an organization's commitment to data protection. It is of vital importance to regularly review and update the privacy policy. It is not merely a legal requirement but a demonstration of the organization's responsiveness to the evolving landscape, aligning data protection practices with the latest compliance requirements and technological advancements.

The Ruby script provides an example of a script to review and update a privacy policy.

8. Prepare a Data Breach Response Plan

Anticipation and preparedness are the hallmarks of resilient organizations. Despite the most stringent preventive measures, the possibility of a data breach looms. Beyond the blueprint, it emphasizes the necessity of practicing and regularly updating this plan, transforming it from a theoretical document into a well-oiled machine ready to mitigate the impact of a breach through strategic communication, legal considerations, and effective remediation steps.

Key PII Compliance Standards

Understanding the regulatory landscape is crucial for PII compliance. Different regions have distinct compliance standards and data privacy regulations that organizations must adhere to. Here are some key standards:

- United States Data Privacy Regulations: In the United States, organizations need to comply with various federal and state regulations. Examples include the Health Insurance Portability and Accountability Act (HIPAA) for healthcare information and the Gramm-Leach-Bliley Act (GLBA) for financial data.

- Europe Data Privacy Regulations: European countries operate under the General Data Protection Regulation (GDPR), a comprehensive framework that sets strict standards for the processing and protection of personal data. GDPR compliance is essential for organizations dealing with European citizens' information.

Conclusion

PII compliance is not just a regulatory requirement; it is a fundamental aspect of responsible and ethical business practices. Protecting sensitive data through a robust compliance framework not only mitigates the risk of data breaches but also fosters trust among customers and stakeholders. By following a comprehensive PII compliance checklist and staying informed about relevant standards, organizations can navigate the complex landscape of data protection successfully. As technology continues to advance, a proactive and adaptive approach to PII compliance is key to securing the future of sensitive data protection.

If you want to learn more about Sentra's Data Security Platform and how you can use a strong PII compliance framework to protect sensitive data, reduce breach risks, and build trust with customers and stakeholders, request a demo today.

<blogcta-big>

Cloud Vulnerability Management Best Practices for 2025

Cloud Vulnerability Management Best Practices for 2025

What is Cloud Vulnerability Management?

Cloud vulnerability management is a proactive approach to identifying and mitigating security vulnerabilities within your cloud infrastructure, enhancing cloud data security. It involves the systematic assessment of cloud resources and applications to pinpoint potential weaknesses that cybercriminals might exploit.

By addressing these vulnerabilities, you reduce the risk of data breaches, service interruptions, and other security incidents that could have a significant impact on your organization.

Common Vulnerabilities in Cloud Security

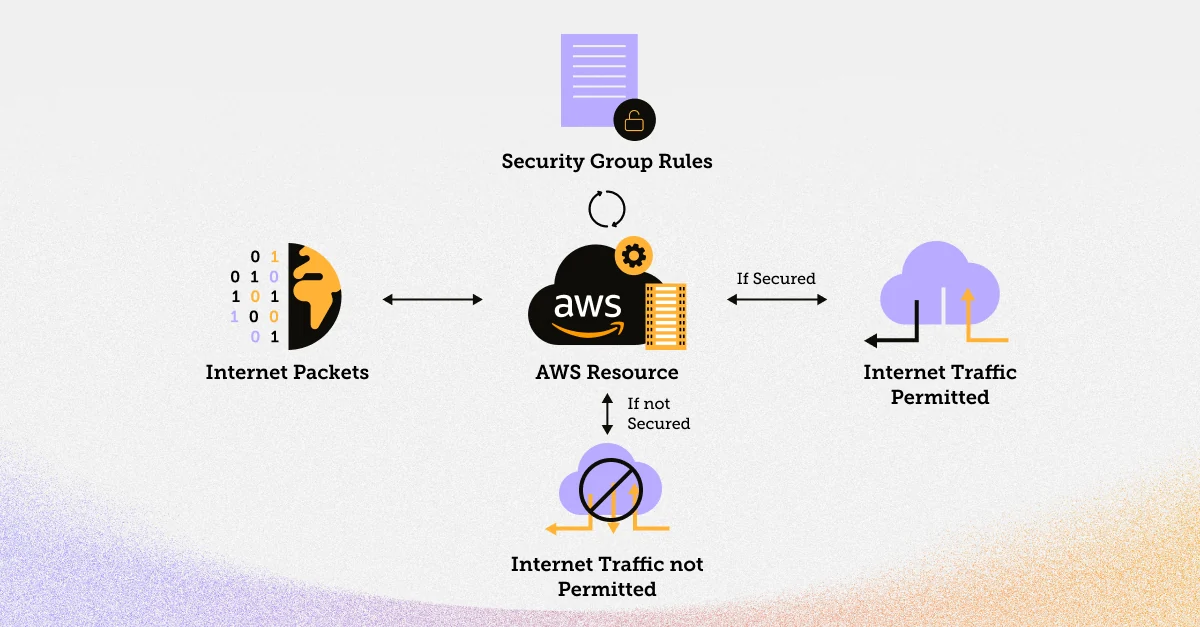

Before diving into the details of cloud vulnerability management, it's essential to understand the types of vulnerabilities that can affect your cloud environment. Here are some common vulnerabilities that private cloud security experts encounter:

Vulnerable APIs

Application Programming Interfaces (APIs) are the backbone of many cloud services. They allow applications to communicate and interact with the cloud infrastructure. However, if not adequately secured, APIs can be an entry point for cyberattacks. Insecure API endpoints, insufficient authentication, and improper data handling can all lead to vulnerabilities.

Misconfigurations

Misconfigurations are one of the leading causes of security breaches in the cloud. These can range from overly permissive access control policies to improperly configured firewall rules. Misconfigurations may leave your data exposed or allow unauthorized access to resources.

Data Theft or Loss

Data breaches can result from poor data handling practices, encryption failures, or a lack of proper data access controls. Stolen or compromised data can lead to severe consequences, including financial losses and damage to an organization's reputation.

Poor Access Management

Inadequate access controls can lead to unauthorized users gaining access to your cloud resources. This vulnerability can result from over-privileged user accounts, ineffective role-based access control (RBAC), or lack of multi-factor authentication (MFA).

Non-Compliance

Non-compliance with regulatory standards and industry best practices can lead to vulnerabilities. Failing to meet specific security requirements can result in fines, legal actions, and a damaged reputation.

Understanding these vulnerabilities is crucial for effective cloud vulnerability management. Once you can recognize these weaknesses, you can take steps to mitigate them.

Cloud Vulnerability Assessment and Mitigation

Now that you're familiar with common cloud vulnerabilities, it's essential to know how to mitigate them effectively. Mitigation involves a combination of proactive measures to reduce the risk and the potential impact of security issues.

Here are some steps to consider:

- Regular Vulnerability Scanning: Implement a robust vulnerability scanning process that identifies and assesses vulnerabilities within your cloud environment. Use automated tools that can detect misconfigurations, outdated software, and other potential weaknesses.

- Access Control: Implement strong access controls to ensure that only authorized users have access to your cloud resources. Enforce the principle of least privilege, providing users with the minimum level of access necessary to perform their tasks.

- Configuration Management: Regularly review and update your cloud configurations to ensure they align with security best practices. Tools like Infrastructure as Code (IaC) and Configuration Management Databases (CMDBs) can help maintain consistency and security.

- Patch Management: Keep your cloud infrastructure up to date by applying patches and updates promptly. Vulnerabilities in the underlying infrastructure can be exploited by attackers, so staying current is crucial.

- Encryption: Use encryption to protect data both at rest and in transit. Ensure that sensitive information is adequately encrypted, and use strong encryption protocols and algorithms.

- Monitoring and Incident Response: Implement comprehensive monitoring and incident response capabilities to detect and respond to security incidents in real time. Early detection can minimize the impact of a breach.

- Security Awareness Training: Train your team on security best practices and educate them about potential risks and how to identify and report security incidents.

Key Features of Cloud Vulnerability Management

Effective cloud vulnerability management provides several key benefits that are essential for securing your cloud environment. Let's explore these features in more detail:

Better Security

Cloud vulnerability management ensures that your cloud environment is continuously monitored for vulnerabilities. By identifying and addressing these weaknesses, you reduce the attack surface and lower the risk of data breaches or other security incidents. This proactive approach to security is essential in an ever-evolving threat landscape.

Cost-Effective

By preventing security incidents and data breaches, cloud vulnerability management helps you avoid potentially significant financial losses and reputational damage. The cost of implementing a vulnerability management system is often far less than the potential costs associated with a security breach.

Highly Preventative

Vulnerability management is a proactive and preventive security measure. By addressing vulnerabilities before they can be exploited, you reduce the likelihood of a security incident occurring. This preventative approach is far more effective than reactive measures.

Time-Saving

Cloud vulnerability management automates many aspects of the security process. This automation reduces the time required for routine security tasks, such as vulnerability scanning and reporting. As a result, your security team can focus on more strategic and complex security challenges.

Steps in Implementing Cloud Vulnerability Management

Implementing cloud vulnerability management is a systematic process that involves several key steps. Let's break down these steps for a better understanding:

Identification of Issues

The first step in implementing cloud vulnerability management is identifying potential vulnerabilities within your cloud environment. This involves conducting regular vulnerability scans to discover security weaknesses.

Risk Assessment

After identifying vulnerabilities, you need to assess their risk. Not all vulnerabilities are equally critical. Risk assessment helps prioritize which vulnerabilities to address first based on their potential impact and likelihood of exploitation.

Vulnerabilities Remediation

Remediation involves taking action to fix or mitigate the identified vulnerabilities. This step may include applying patches, reconfiguring cloud resources, or implementing access controls to reduce the attack surface.

Vulnerability Assessment Report

Documenting the entire vulnerability management process is crucial for compliance and transparency. Create a vulnerability assessment report that details the findings, risk assessments, and remediation efforts.

Re-Scanning

The final step is to re-scan your cloud environment periodically. New vulnerabilities may emerge, and existing vulnerabilities may reappear. Regular re-scanning ensures that your cloud environment remains secure over time.

By following these steps, you establish a robust cloud vulnerability management program that helps secure your cloud environment effectively.

Challenges with Cloud Vulnerability Management

While cloud vulnerability management offers many advantages, it also comes with its own set of challenges. Some of the common challenges include:

Cloud Vulnerability Management Best Practices

To overcome the challenges and maximize the benefits of cloud vulnerability management, consider these best practices:

- Automation: Implement automated vulnerability scanning and remediation processes to save time and reduce the risk of human error.

- Regular Training: Keep your security team well-trained and updated on the latest cloud security best practices.

- Scalability: Choose a vulnerability management solution that can scale with your cloud environment.

- Prioritization: Use risk assessments to prioritize the remediation of vulnerabilities effectively.

- Documentation: Maintain thorough records of your vulnerability management efforts, including assessment reports and remediation actions.

- Collaboration: Foster collaboration between your security team and cloud administrators to ensure effective vulnerability management.

- Compliance Check: Regularly verify your cloud environment's compliance with relevant standards and regulations.

Tools to Help Manage Cloud Vulnerabilities

To assist you in your cloud vulnerability management efforts, there are several tools available. These tools offer features for vulnerability scanning, risk assessment, and remediation.

Here are some popular options:

Sentra: Sentra is a cloud-based data security platform that provides visibility, assessment, and remediation for data security. It can be used to discover and classify sensitive data, analyze data security controls, and automate alerts in cloud data stores, IaaS, PaaS, and production environments.

Tenable Nessus: A widely-used vulnerability scanner that provides comprehensive vulnerability assessment and prioritization.

Qualys Vulnerability Management: Offers vulnerability scanning, risk assessment, and compliance management for cloud environments.

AWS Config: Amazon Web Services (AWS) provides AWS Config, as well as other AWS cloud security tools, to help you assess, audit, and evaluate the configurations of your AWS resources.

Azure Security Center: Microsoft Azure's Security Center offers Azure Security tools for continuous monitoring, threat detection, and vulnerability assessment.

Google Cloud Security Scanner: A tool specifically designed for Google Cloud Platform that scans your applications for vulnerabilities.

OpenVAS: An open-source vulnerability scanner that can be used to assess the security of your cloud infrastructure.

Choosing the right tool depends on your specific cloud environment, needs, and budget. Be sure to evaluate the features and capabilities of each tool to find the one that best fits your requirements.

Conclusion

In an era of increasing cyber threats and data breaches, cloud vulnerability management is a vital practice to secure your cloud environment. By understanding common cloud vulnerabilities, implementing effective mitigation strategies, and following best practices, you can significantly reduce the risk of security incidents. Embracing automation and utilizing the right tools can streamline the vulnerability management process, making it a manageable and cost-effective endeavor. Remember that security is an ongoing effort, and regular vulnerability scanning, risk assessment, and remediation are crucial for maintaining the integrity and safety of your cloud infrastructure. With a robust cloud vulnerability management program in place, you can confidently leverage the benefits of the cloud while keeping your data and assets secure.

If you want to learn more about how you can implement a robust cloud vulnerability management program to confidently harness the power of the cloud while keeping your data and assets secure, request a demo today.

<blogcta-big>

Achieving Exabyte Scale Enterprise Data Security

Achieving Exabyte Scale Enterprise Data Security

The Growing Challenge for Enterprise Data Security

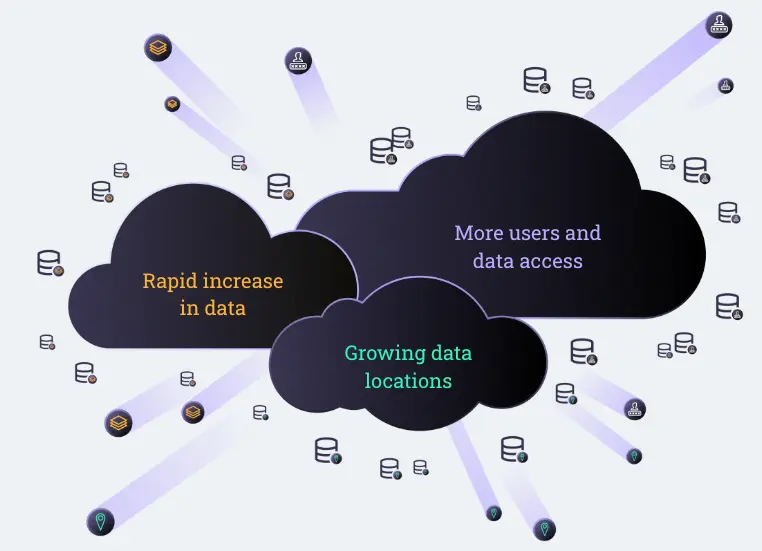

Enterprises are facing a unique set of challenges when it comes to managing and protecting their data. From my experience with customers, I’ve seen these challenges intensify as data governance frameworks struggle to keep up with evolving environments. Data is not confined to a single location - it’s scattered across different environments, from cloud platforms to on-premises servers and various SaaS applications. This distributed and siloed data stores model, while beneficial for flexibility and scalability, complicates data governance and introduces new security and privacy risks.

Many organizations now manage petabytes of constantly changing information, with new data being created, updated, or shared every second. As this volume expands into the hundreds or even thousands of petabytes (exabytes!), keeping track of it all becomes an overwhelming challenge.

The situation is further complicated by the rapid movement of data. Employees and applications copy, modify, or relocate sensitive information in seconds, often across diverse environments. This includes on-premises systems, multiple cloud platforms, and technologies like PaaS and IaaS. Such rapid data sprawl makes it increasingly difficult to maintain visibility and control over the data, and to keep the data protected with all the required controls, such as encryption and access controls.

The Complexities of Access Control

Alongside data sprawl, there’s also the challenge of managing access. Enterprise data ecosystems support thousands of identities (users, apps, machines) each with different levels of access and permissions. These identities may be spread across multiple departments and accounts, and their data needs are constantly evolving. Tracking and controlling which identity can access which data sets becomes a complex puzzle, one that can expose an organization to risks if not handled with precision.

For any enterprise, having an accurate, up-to-date view of who or what has access to what data (and why) is essential to maintaining security and ensuring compliance. Without this visibility and control, organizations run the risk of unauthorized access and potential data breaches.

The Need for Automated Data Risk Assessment

In today’s data-driven world, security analysts often discover sensitive data in misconfigured environments—sometimes only after a breach—leading to a time-consuming process of validating data sensitivity, identifying business owners, and initiating remediation. In my work with enterprises, I’ve noticed this process is often further complicated by unclear ownership and inconsistent remediation practices.

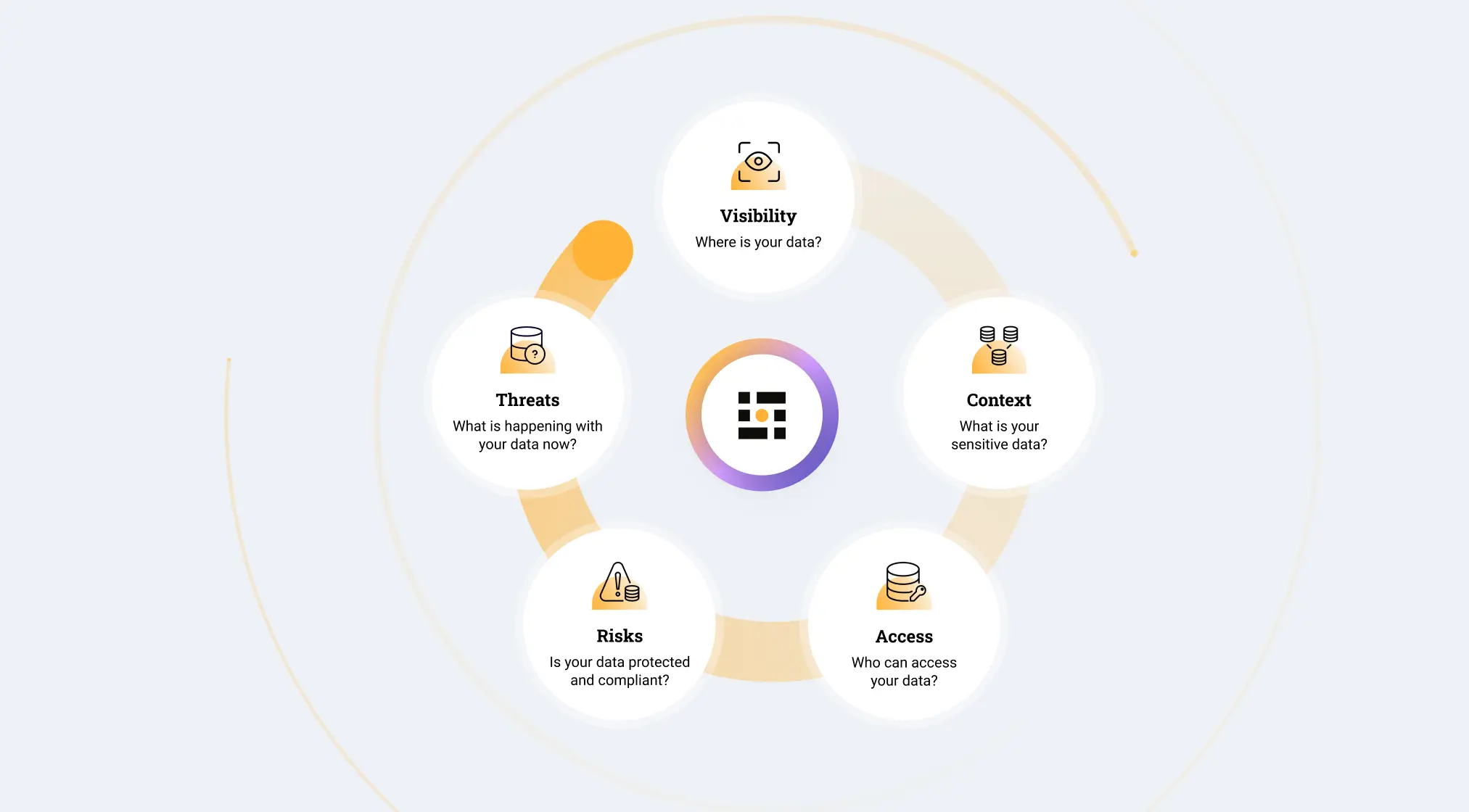

With data constantly moving and accessed across diverse environments, organizations face critical questions:

- Where is our sensitive data?

- Who has access?

- Are we compliant?

Addressing these challenges requires a dynamic, always-on approach with trusted classification and automated remediation to monitor risks and enforce protection 24/7.

The Scale of the Problem

For enterprise organizations, scale amplifies every data management challenge. The larger the organization, the more complex it becomes to ensure data visibility, secure access, and maintain compliance. Traditional, human-dependent security approaches often struggle to keep up, leaving gaps that malicious actors exploit. Enterprises need robust, scalable solutions that can adapt to their expanding data needs and provide real-time insights into where sensitive data resides, how it’s used, and where the risks lie.

The Solution: Data Security Platform (DSP)

Sentra’s Cloud-native Data Security Platform (DSP) provides a solution designed to meet these challenges head-on. By continuously identifying sensitive data, its posture, and access points, DSP gives organizations complete control over their data landscape.

Sentra enables security teams to gain full visibility and control of their data while proactively protecting against sensitive data breaches across the public cloud. By locating all data, properly classifying its sensitivity, analyzing how it’s secured (its posture), and monitoring where it’s moving, Sentra helps reduce the “data attack surface” - the sum of all places where sensitive or critical data is stored.

Based on a cloud-native design, Sentra’s platform combines robust capabilities, including Data Discovery and Classification, Data Security Posture Management (DSPM), Data Access Governance (DAG), and Data Detection and Response (DDR). This comprehensive approach to data security ensures that Sentra’s customers can achieve enterprise-scale protection and gain crucial insights into their data. Sentra’s DSP offers a distinct layer of data protection that goes beyond traditional, infrastructure-dependent approaches, making it an essential addition to any organization’s security strategy. By scaling data protection across multiple clouds and on-premises, Sentra enables organizations to meet the demands of enterprise growth and keep up with evolving business needs. And it does so efficiently, without creating unnecessary burdens on the security teams managing it.

.webp)

How a Robust DSP Can Handle Scale Efficiently

When selecting a DSP solution, it's essential to consider: How does this product ensure your sensitive data is kept secure no matter where it moves? And how can it scale effectively without driving up costs by constantly combing through every bit of data?

The key is in tailoring the DSP to your unique needs. Each organization, with its variety of environments and security requirements, needs a DSP that can adapt to specific demands. At Sentra, we’ve developed a flexible scanning engine that puts you in control, allowing you to customize what data is scanned, how it is tagged, and when. Our platform incorporates advanced optimization algorithms to keep scanning costs low without compromising on quality.

Priority Scanning

Do you really need to scan all the organization’s data? Do all data stores and assets hold the same priority? A smart DLP solution puts you in control, allowing you to adjust your scanning strategy based on the organization's specific priorities and sensitive data locations and uses.

For example, some organizations may prioritize scanning employee-generated content, while others might focus on their production environment and perform more frequent scans there. Tailoring your scanning strategy ensures that the most important data is protected without overwhelming resources.

Smart Sampling

Is it necessary to scan every database record and every character in every file? The answer depends on your organization’s risk tolerance. For instance, in a PCI production environment, you might reduce the amount of sampling and scan every byte, while in a development environment you can group and sample data sets that share similar characteristics, allowing for more efficient scanning without compromising on security.

.webp)

Delta scanning (tracking data changes)

Delta scanning focuses on what matters most by selectively scanning data that poses a higher risk. Instead of re-scanning data that hasn’t changed, delta scanning prioritizes new or modified data, ensuring that resources are used efficiently. This approach helps to reduce scanning costs while keeping your data protection efforts focused on what has changed or been added. A smart DLP will run efficiently and prioritize “new data” over “old data”, allowing you to optimize your scanning costs.

On-Demand Data Scans

As you build your scanning strategy, it is important to keep the ability to trigger an immediate scan request. This is handy when you’re fixing security risks and want a short feedback loop to verify your changes.

This also gives you the ability to prepare for compliance audits effectively by ensuring readiness and accurate and fresh classification.

Balancing Scan Speed and Cost

Smart sampling enables a balance between scan speed and cost. By focusing scans on relevant data and optimizing the scanning process, you can keep costs down while maintaining high accuracy and efficiency across your data landscape.

Achieve Scalable Data Protection with Cloud-Native DSPs

As enterprise organizations continue to navigate the complexities of managing vast amounts of data across multiple environments, the need for effective data security strategies becomes increasingly critical. The challenges of access control, risk analysis, and scaling security efforts can overwhelm traditional approaches, making it clear that a more automated, comprehensive solution is essential. A cloud-native Data Security Platform (DSP) offers the agility and efficiency required to meet these demands.

By incorporating advanced features like smart sampling, delta scanning, and on-demand scan requests, Sentra’s DSP ensures that organizations can continuously monitor, protect, and optimize their data security posture without unnecessary resource strain. Balancing scan frequency, sensitivity and cost efficiency further enhances the ability to scale effectively, providing organizations with the tools they need to manage data risks, remain compliant, and protect sensitive information in an ever-evolving digital landscape.

If you want to learn more, talk to our data security experts and request a demo today.

<blogcta-big>

How Sentra Built a Data Security Platform for the AI Era

How Sentra Built a Data Security Platform for the AI Era

In just three years, Sentra has witnessed the rapid evolution of the data security landscape. What began with traditional on-premise Data Loss Prevention (DLP) solutions has shifted to a cloud-native focus with Data Security Posture Management (DSPM). This marked a major leap in how organizations protect their data, but the evolution didn’t stop there.

The next wave introduced new capabilities like Data Detection and Response (DDR) and Data Access Governance (DAG), pushing the boundaries of what DSPM could offer. Now, we’re entering an era where SaaS Security Posture Management (SSPM) and Artificial Intelligence Security Posture Management (AI-SPM) are becoming increasingly important.

These shifts are redefining what we’ve traditionally called Data Security Platform (DSP) solutions, marking a significant transformation in the industry. The speed of this evolution speaks to the growing complexity of data security needs and the innovation required to meet them.

The Evolution of Data Security

What Is Driving The Evolution of Data Security?

The evolution of the data security market is being driven by several key macro trends:

- Digital Transformation and Data Democratization: Organizations are increasingly embracing digital transformation, making data more accessible to various teams and users.

- Rapid Cloud Adoption: Businesses are moving to the cloud at an unprecedented pace to enhance agility and responsiveness.

- Explosion of Siloed Data Stores: The growing number of siloed data stores, diverse data technologies, and an expanding user base is complicating data management.

- Increased Innovation Pace: The rise of artificial intelligence (AI) is accelerating the pace of innovation, creating new opportunities and challenges in data security.

- Resource Shortages: As organizations grow, the need for automation to keep up with increasing demands has never been more critical.

- Stricter Data Privacy Regulations: Heightened data privacy laws and stricter breach disclosure requirements are adding to the urgency for robust data protection measures.

Similarly, there has been an evolution in the roles involved with the management, governance, and protection of data. These roles are increasingly intertwined and co-dependent as described in our recent blog entitled “Data: The Unifying Force Behind Disparate GRC Functions”. We identify that today each respective function operates within its own domain yet shares ownership of data at its core. As the co-dependency on data increases so does the need for a unifying platform approach to data security.

Sentra has adapted to these changes to align our messaging with industry expectations, buyer requirements, and product/technology advancements.

A Data Security Platform for the AI Era

Sentra is setting the standard with the leading Data Security Platform for the AI Era.

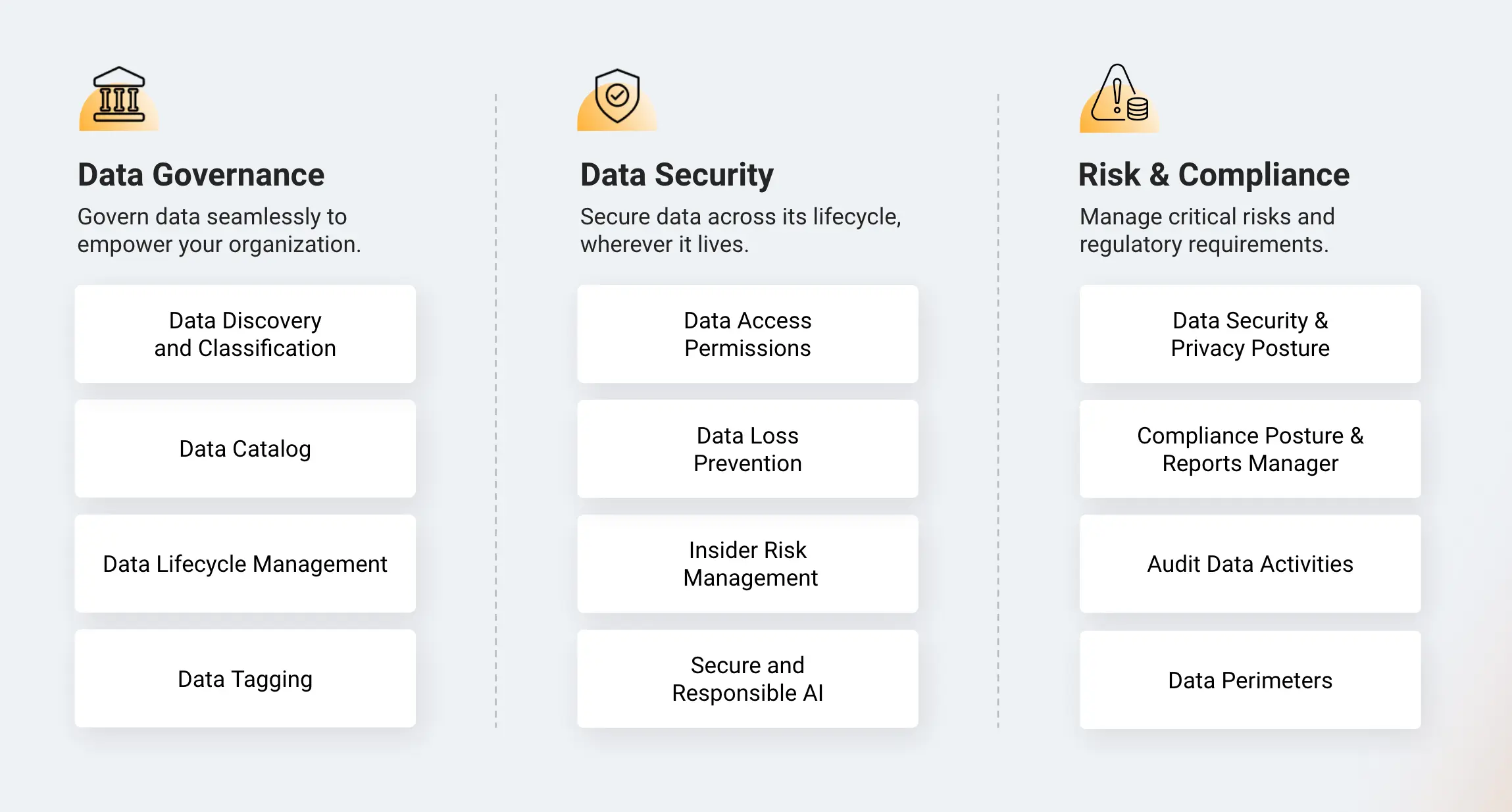

With its cloud-native design, Sentra seamlessly integrates powerful capabilities like Data Discovery and Classification, Data Security Posture Management (DSPM), Data Access Governance (DAG), and Data Detection and Response (DDR) into a comprehensive solution. This allows our customers to achieve enterprise-scale data protection while addressing critical questions about their data.

What sets Sentra apart is its connector-less, cloud-native architecture, which effortlessly scales to accommodate multi-petabyte, multi-cloud environments without the administrative burdens typical of connector-based legacy systems. These more labor-intensive approaches often struggle to keep pace and frequently overlook shadow data.

Moreover, Sentra harnesses the power of AI and machine learning to accurately interpret data context and classify data. This not only enhances data security but also ensures the privacy and integrity of data used in Gen- AI applications. We recognized the critical need for accurate and automated Data Discovery and Classification, along with Data Security Posture Management (DSPM), to address the risks associated with data proliferation in a multi-cloud landscape. Based on our customers' evolving needs, we expanded our capabilities to include DAG and DDR. These tools are essential for managing data access, detecting emerging threats, and improving risk mitigation and data loss prevention.

DAG maps the relationships between cloud identities, roles, permissions, data stores, and sensitive data classes. This provides a complete view of which identities and data stores in the cloud may be overprivileged. Meanwhile, DDR offers continuous threat monitoring for suspicious data access activity, providing early warnings of potential breaches.

We grew to support SaaS data repositories including Microsoft 365 (SharePoint, OneDrive, Teams, etc.), G Suite (Gdrive) and leveraged AI/ML to accurately classify data hidden within unstructured data stores.

Sentra’s accurate data sensitivity tagging and granular contextual details allows organizations to enhance the effectiveness of their existing tools, streamline workflows, and automate remediation processes. Additionally, Sentra offers pre-built integrations with various analysis and response tools used across the enterprise, including data catalogs, incident response (IR) platforms, IT service management (ITSM) systems, DLPs, CSPMs, CNAPPs, IAM, and compliance management solutions.

How Sentra Redefines Enterprise Data Security Across Clouds

Sentra has architected a solution that can deliver enterprise-scale data security without the traditional constraints and administrative headaches. Sentra’s cloud-native design easily scales to petabyte data volumes across multi-cloud and on-premises environments.

The Sentra platform incorporates a few major differentiators that distinguish it from other solutions including:

- Novel Scanning Technology: Sentra uses inventory files and advanced automatic grouping to create a new entity called “Data Asset”, a group of files that have the same structure, security posture and business function. Sentra automatically reduces billions of files into thousands of data assets (that represent different types of data) continuously, enabling full coverage of 100% of cloud data of petabytes to just several hundreds of thousands of files which need to be scanned (5-6 orders of magnitude less scanning required). Since there is no random sampling involved in the process, all types of data are fully scanned and for differentials on a daily basis. Sentra supports all leading IaaS, PaaS, SaaS and On-premises stores.

- AI-powered Autonomous Classification: Sentra’s use of AI-powered classification provides approximately 97% classification accuracy of data within unstructured documents and structured data. Additionally, Sentra provides rich data context (distinct from data class or type) about multiple aspects of files, such as data subject residency, business impact, synthetic or real data, and more. Further, Sentra’s classification uses LLMs (inside the customer environment) to automatically learn and adapt based on the unique business context, false positive user inputs, and allows users to add AI-based classifiers using natural language (powered by LLMs). This autonomous learning means users don’t have to customize the system themselves, saving time and helping to keep pace with dynamic data.

- Data Perimeters / Movement: Sentra DataTreks™ provides the ability to understand data perimeters automatically and detect when data is moving (e.g. copied partially or fully) to a different perimeter. For example, it can detect data similarity/movement from a well protected production environment to a less- protected development environment. This is important for highly dynamic cloud environments and promoting secure data democratization.

- Data Detection and Response (DDR): Sentra’s DDR module highlights anomalies such as unauthorized data access or unusual data movements in near real-time, integrating alerts into existing tools like ServiceNow or JIRA for quick mitigation.

- Easy Customization: In addition to ‘learning’ of a customer's unique data types, with Sentra it’s easy to create new classifiers, modify policies, and apply custom tagging labels.

As AI reshapes the digital landscape, it also creates new vulnerabilities, such as the risk of data exposure through AI training processes. The Sentra platform addresses these AI-specific challenges, while continuing to tackle the persistent security issues from the cloud era, providing an integrated solution that ensures data security remains resilient and adaptive.

Use Cases: Solving Complex Problems with Unique Solutions

Sentra’s unique capabilities allow it to serve a broad spectrum of challenging data security, governance and compliance use cases. Two frequently cited DSPM use cases are preventing data breaches and facilitating GenAI technology deployments. With the addition of data privacy compliance, these represent the top three.

Let's dive deeper into how Sentra's platform addresses specific challenges:

Data Risk Visibility

Sentra’s Data Security Platform enables continuous analysis of your security posture and automates risk assessments across your entire data landscape. It identifies data vulnerabilities across cloud-native and unmanaged databases, data lakes, and metadata catalogs. By automating the discovery and classification of sensitive data, teams can prioritize actions based on the sensitivity and policy guidelines related to each asset. This automation not only saves time but also enhances accuracy, especially when leveraging large language models (LLMs) for detailed data classification.

Security and Compliance Audit

Sentra Data Security Platform can also automate the process of identifying regulatory violations and ensuring adherence to custom and pre-built policies (including policies that map to common compliance frameworks).

The platform automates the identification of regulatory violations, ensuring compliance with both custom and established policies. It helps keep sensitive data in the right environments, preventing it from traveling to regions that violate retention policies or lack encryption. Unlike manual policy implementation, which is prone to errors, Sentra’s automated approach significantly reduces the risk of misconfiguration, ensuring that teams don’t miss critical activities.

Data Access Governance

Sentra enhances data access governance (DAG) by enforcing appropriate permissions for all users and applications within an organization. By automating the monitoring of access permissions, Sentra mitigates risks such as excessive permissions and unauthorized access. This ensures that teams can maintain least privilege access control, which is essential in a growing data ecosystem.

Minimizing Data and Attack Surface

The platform’s capabilities also extend to detecting unmanaged sensitive data, such as shadow or duplicate assets. By automatically finding and classifying these unknown data points, Sentra minimizes the attack surface, controls data sprawl, and enhances overall data protection.

Secure and Responsible AI

As organizations build new Generative AI applications, Sentra extends its protection to LLM applications, treating them as part of the data attack surface. This proactive management, alongside monitoring of prompts and outputs, addresses data privacy and integrity concerns, ensuring that organizations are prepared for the future of AI technologies.

Insider Risk Management

Sentra effectively detects insider risks by monitoring user access to sensitive information across various platforms. Its Data Detection and Response (DDR) capabilities provide real-time threat detection, analyzing user activity and audit logs to identify unusual patterns.

Data Loss Prevention (DLP)

The platform integrates seamlessly with endpoint DLP solutions to monitor all access activities related to sensitive data. By detecting unauthorized access attempts from external networks, Sentra can prevent data breaches before they escalate, all while maintaining a positive user experience.

Sentra’s robust Data Security Platform offers solutions for these use cases and more, empowering organizations to navigate the complexities of data security with confidence. With a comprehensive approach that combines visibility, governance, and protection, Sentra helps businesses secure their data effectively in today’s dynamic digital environment.

From DSPM to a Comprehensive Data Security Platform

Sentra has evolved beyond being the leading Data Security Posture Management (DSPM) solution; we are now a Cloud-native Data Security Platform (DSP). Today, we offer holistic solutions that empower organizations to locate, secure, and monitor their data against emerging threats. Our mission is to help businesses move faster and thrive in today’s digital landscape.

What sets the Sentra DSP apart is its unique layer of protection, distinct from traditional infrastructure-dependent solutions. It enables organizations to scale their data protection across ever-expanding multi-cloud environments, meeting enterprise demands while adapting to ever-changing business needs—all without placing undue burdens on the teams managing it.

And we continue to progress. In a world rapidly evolving with advancements in AI, the Sentra Data Security Platform stands as the most comprehensive and effective solution to keep pace with the challenges of the AI age. We are committed to developing our platform to ensure that your data security remains robust and adaptive.

AI: Balancing Innovation with Data Security

AI: Balancing Innovation with Data Security

The Rise of AI

Artificial Intelligence (AI) is a broad discipline focused on creating machines capable of mimicking human intelligence and more specifically…learning. It even dates back to the 1950s.

These tasks might include understanding natural language, recognizing images, solving complex problems, and even driving cars. Unlike traditional software, AI systems can learn from experience, adapt to new inputs, and perform human-like tasks by processing large amounts of data.

Today, around 42% of companies have reported exploring AI use within their company, and over 50% of companies plan to incorporate AI technologies in 2024. The AI Market is expected to reach a staggering $407 billion by 2027.

What Is the Difference Between AI, ML and LLM?

AI encompasses a vast range of technologies, including Machine Learning (ML), Generative AI (GAI), and Large Language Models (LLM), among others.

Machine Learning, a subset of AI, was developed in the 1980s. Its main focus is on enabling machines to learn from data, improve their performance, and make decisions without explicit programming. Google's search algorithm is a prime example of an ML application, using previous data to refine search results.

Generative AI (GAI), evolved from ML in the early 21st century, represents a class of algorithms capable of generating new data. They construct data that resembles the input, making them essential in fields like content creation and data augmentation.

Large Language Models (LLM) also arose from the GAI subset. LLMs generate human-like text by predicting the likelihood of a word given the previous words used in the text. They are the core technology behind many voice assistants and chatbots. One of the most well-known examples of LLMs is OpenAI's ChatGPT model.

LLMs are trained on huge sets of data — which is why they are called "large" language models. LLMs are built on machine learning: specifically, a type of neural network called a transformer model.

In simpler terms, an LLM is a computer program that has been fed enough examples to be able to recognize and interpret human language or other types of complex data. Many LLMs are trained on data that has been gathered from the Internet — thousands or even millions of gigabytes' worth of text. But the quality of the samples impacts how well LLMs will learn natural language, so LLM's programmers may use a more curated data set.

Here are some of the main functions LLMs currently serve:

- Natural language generation

- Language translation

- Sentiment analysis

- Content creation

What is AI SPM?

AI-SPM (artificial intelligence security posture management) is a comprehensive approach to securing artificial intelligence and machine learning. It includes identifying and addressing vulnerabilities, misconfigurations, and potential risks associated with AI applications and training data sets, as well as ensuring compliance with relevant data privacy and security regulations.

How Can AI Help Data Security?

With data breaches and cyber threats becoming increasingly sophisticated, having a way of securing data with AI is paramount. AI-powered security systems can rapidly identify and respond to potential threats, learning and adapting to new attack patterns faster than traditional methods. According to a 2023 report by IBM, the average time to identify and contain a data breach was reduced by nearly 50% when AI and automation were involved.

By leveraging machine learning algorithms, these systems can detect anomalies in real-time, ensuring that sensitive information remains protected. Furthermore, AI can automate routine security tasks, freeing up human experts to focus on more complex challenges. Ultimately, AI-driven data security not only enhances protection but also provides a robust defense against evolving cyber threats, safeguarding both personal and organizational data.

What Do You Need to Secure

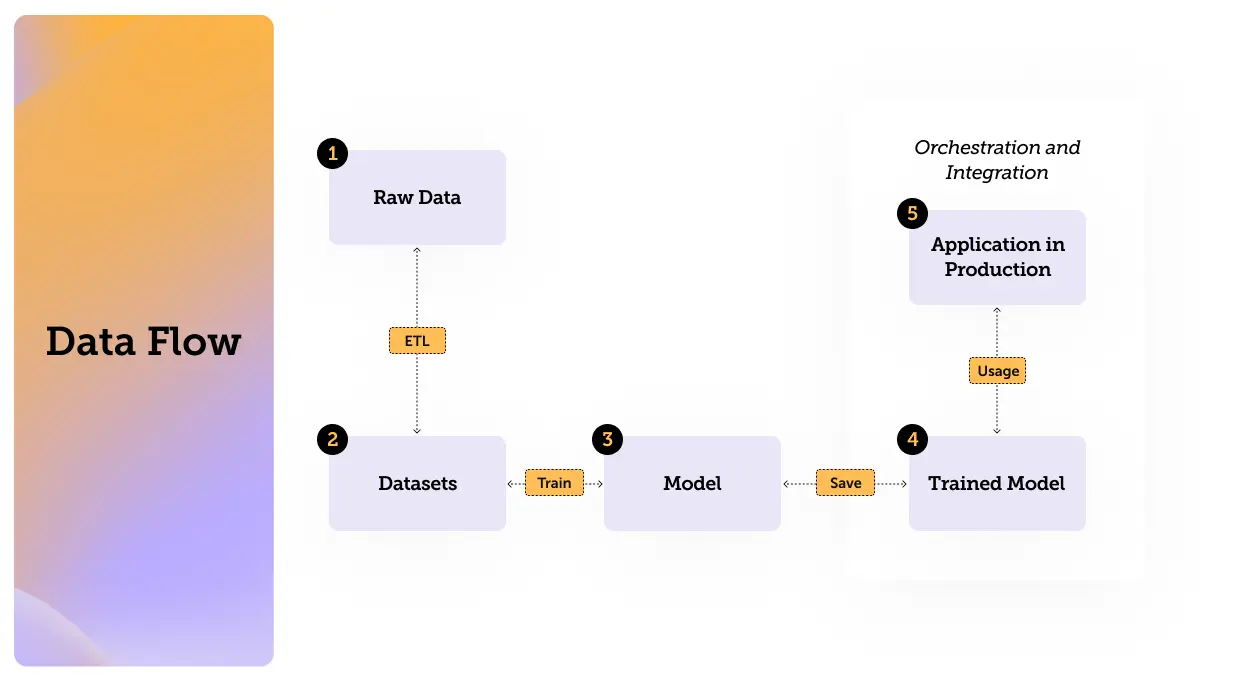

So now that we have defined Artificial Intelligence, Machine Learning and Large Language Models, it’s time to get familiar with the data flow and its components. Understanding the data flows can help us identify those vulnerable points where we can improve data security.

The process can be illustrated with the following flow:

(If you are already familiar with datasets models and everything in between feel free to jump straight to the threats section)

Understanding Training Datasets

The main component of the first stage we will discuss is the training dataset.

Training datasets are collections of labeled or unlabeled data used to train, validate, and test machine learning models. They can be identified by their structured nature and the presence of input-output pairs for supervised learning.

Training datasets are essential for training models, as they provide the necessary information for the model to learn and make predictions. They can be manually created, parsed using tools like Glue and ETLs, or sourced from predefined open-source datasets such as those from HuggingFace, Kaggle, and GitHub.

Training datasets can be stored locally on personal computers, virtual servers, or in cloud storage services such as AWS S3, RDS, and Glue.

Examples of training datasets include image datasets for computer vision tasks, text datasets for natural language processing, and tabular datasets for predictive modeling.

What is a Machine Learning Model?

This brings us to the next component: models.

A model in machine learning is a mathematical representation that learns from data to make predictions or decisions. Models can be pre-trained, like GPT-4, GPT-4.5, and LLAMA, or developed in-house.

Models are trained using training datasets. The training process involves feeding the model data so it can learn patterns and relationships within the data. This process requires compute power and be done using containers, or services such as AWS SageMaker and Bedrock. The output is a bunch of parameters that are used to fine tune the model. If someone gets their hand on those parameters it's as if they trained the model themselves.

Once trained, models can be used to predict outcomes based on new inputs. They are deployed in production environments to perform tasks such as classification, regression, and more.

How Data Flows: Orchestration and Integration

This leads us to our last stage which is the Orchestration and Integration (Flow). These tools manage the deployment and execution of models, ensuring they perform as expected in production environments. They handle the workflow of machine learning processes, from data ingestion to model deployment.

Integration: Integrating models into applications involves using APIs and other interfaces to allow seamless communication between the model and the application. This ensures that the model's predictions are utilized effectively.

Possible Threats: Orchestration tools can be exploited to perform LLM attacks, where vulnerabilities in the deployment and management processes are targeted.

We will cover this in the next chapter of this article.

Conclusion

We reviewed what AI is composed of and examined the individual components, including data flows and how they function within the broader AI ecosystem. In the part 2 episode of this 3 part series, we’ll explore LLM attack techniques and threats.

With Sentra, your team will gain visibility and control into any training dataset, models and AI applications in your cloud environments, such as AWS. By using Sentra, you can minimize data security risks in our AI applications and ensure they remain secure without sacrificing efficiency or performance. Sentra can help you navigate the complexities of AI security, providing the tools and knowledge necessary to protect your data and maximize the potential of your AI initiatives.

<blogcta-big>

How Sentra Accurately Classifies Sensitive Data at Scale

How Sentra Accurately Classifies Sensitive Data at Scale

Background on Classifying Different Types of Data

It’s first helpful to review the primary types of data we need to classify - Structured and Unstructured Data and some of the historical challenges associated with analyzing and accurately classifying it.

What Is Structured Data?