Updates From the Front Lines of Data Security

How CISOs Will Evaluate DSPM in 2026: 13 New Buying Criteria for Security Leaders

How CISOs Will Evaluate DSPM in 2026: 13 New Buying Criteria for Security Leaders

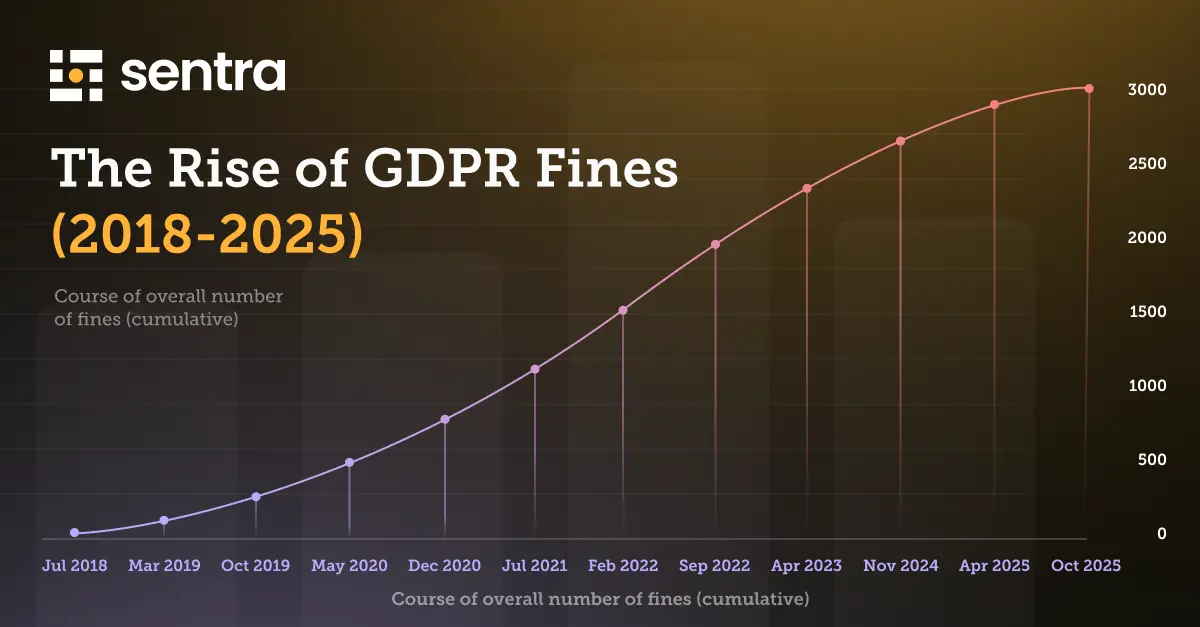

Data Security Posture Management (DSPM) has quickly become part of mainstream security, gaining ground on older solutions and newer categories like XDR and SSE. Beneath the hype, most security leaders share the same frustration: too many products promise results but simply can't deliver in the messy, large-scale settings that enterprises actually have. The DSPM market is expected to jump from $1.86B in 2024 to $22.5B by 2033, giving buyers more choice - and greater pressure - to demand what really sets a solution apart for the coming years.

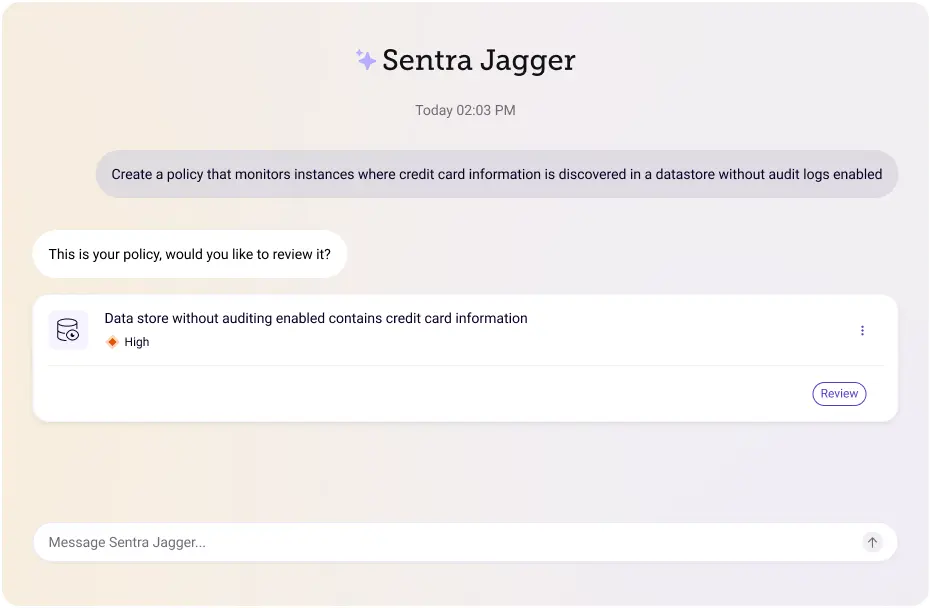

Instead of letting vendors dictate the RFP, what if CISOs led the process themselves? Fast-forward to 2026 and the checklist a CISO uses to evaluate DSPM solutions barely resembles the checklists of the past. Here are the 12 criteria everyone should insist on - criteria most vendors would rather you ignore, but industry leaders like Sentra are happy to highlight.

Why Legacy DSPM Evaluation Fails Modern CISOs

Traditional DSPM/DCAP evaluations were all about ticking off feature boxes: Can it scan S3 buckets? Show file types? But most CISO I meet point to poor data visibility as their biggest vulnerability. It's already obvious that today’s fragmented, agent-heavy tools aren’t cutting it.

So, what’s changed for 2026? Massive data volumes, new unstructured formats like chat logs or AI training sets, and rapid cloud adoption mean security leaders now need a different class of protection.

The right platform:

- Works without agents, everywhere you operate

- Focuses on bringing real, risk-based context - not just adding more alerts

- Automates compliance and fixes identity/data governance gaps

- Manages both structured and unstructured data across the whole organization

Old evaluation checklists don’t come close. It’s time to update yours.

The 13 DSPM Buying Criteria Vendors Hope You Don’t Ask

Here’s what should be at the heart of every modern assessment, especially for 2026:

- Is the platform truly agentless, everywhere? Agent-based designs slow you down and block coverage. The best solutions set up in minutes, with absolutely no agents - across SaaS, IaaS, or on-premises and will always discover any unknown and shadow data

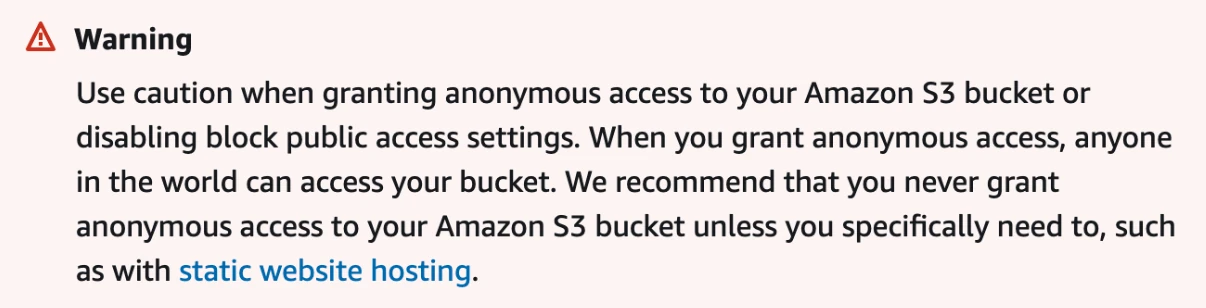

- Does it operate fully in-environment? Your data needs to stay in your cloud or region - not copied elsewhere for analysis. In-environment processing guards privacy, simplifies compliance, and matches global regulations (Cloud Security Alliance).

- Can it accurately classify unstructured data (>98% accuracy)? Most tools stumble outside of databases. Insist on AI-powered classification that understands language, context, and sensitivity. This covers everything from PDF files to Zoom recordings to LLM training data.

- How does it handle petabyte-scale scanning and will it break the bank? Legacy options get expensive as data grows. You need tools that can scan quickly and stay cost-effective across multi-cloud and hybrid environments at massive scale.

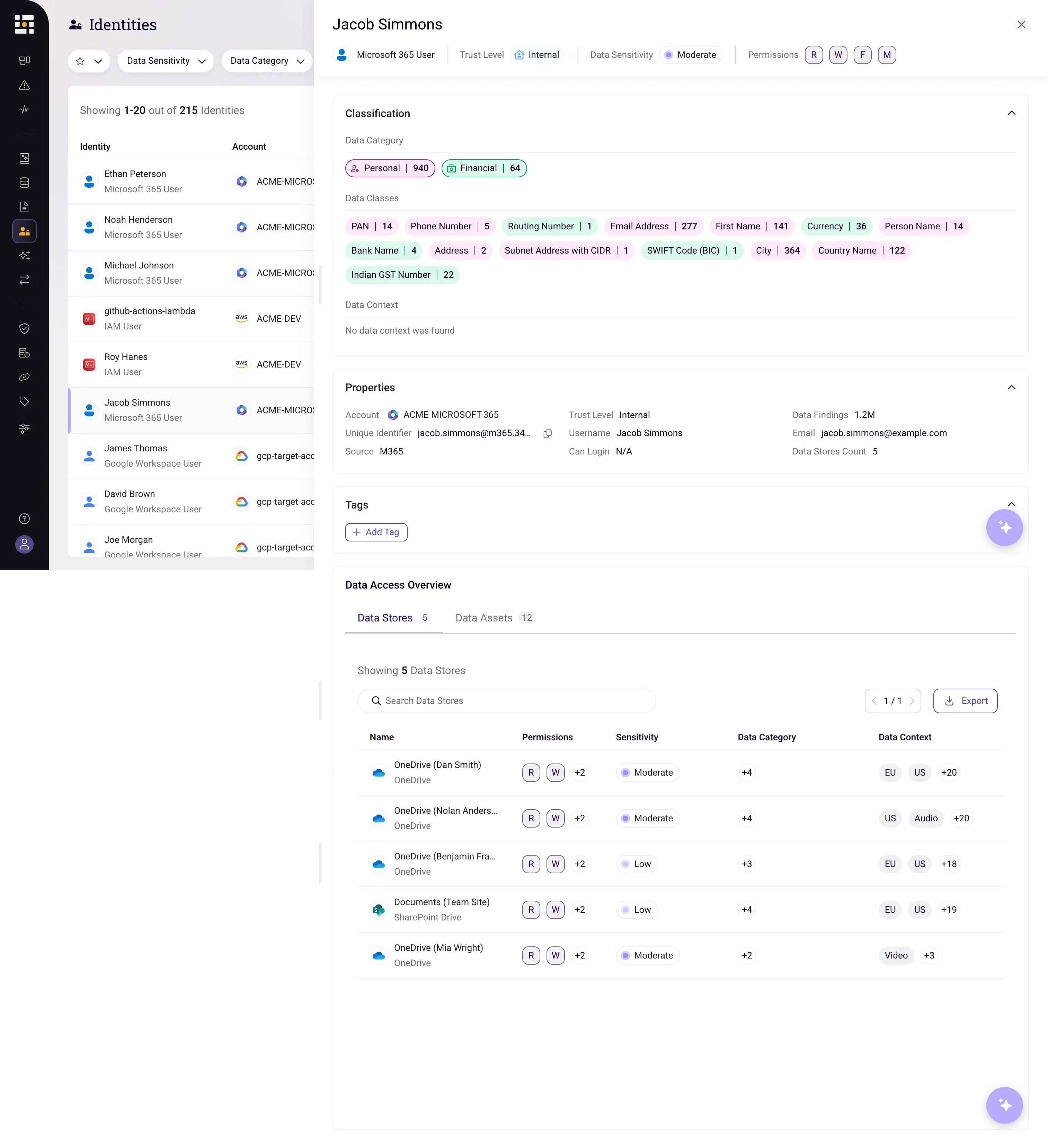

- Does it unify data and identity governance? Very few platforms support both human and machine identities - especially for service accounts or access across clouds. Only end-to-end coverage breaks down barriers between IT, business, and security.

- Can it surface business-contextualized risk insights? You need more than technical vulnerability. Leading platforms map sensitive data by its business importance and risk, making it easier to prioritize and take action.

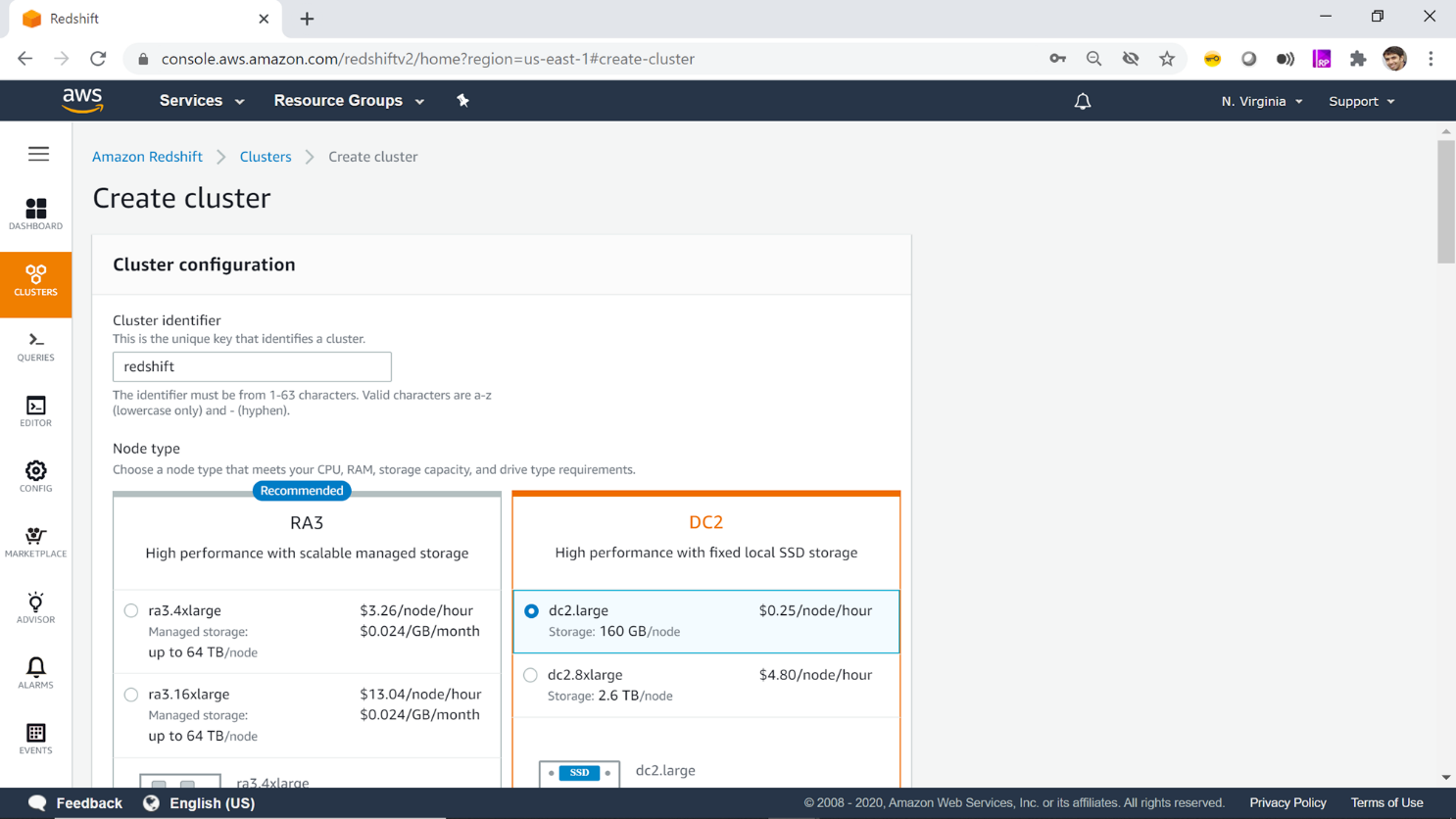

- Is deployment frictionless and multi-cloud native? DSPM should work natively in AWS, Azure, GCP, and SaaS, no complicated integrations required. Insist on fast, simple onboarding.

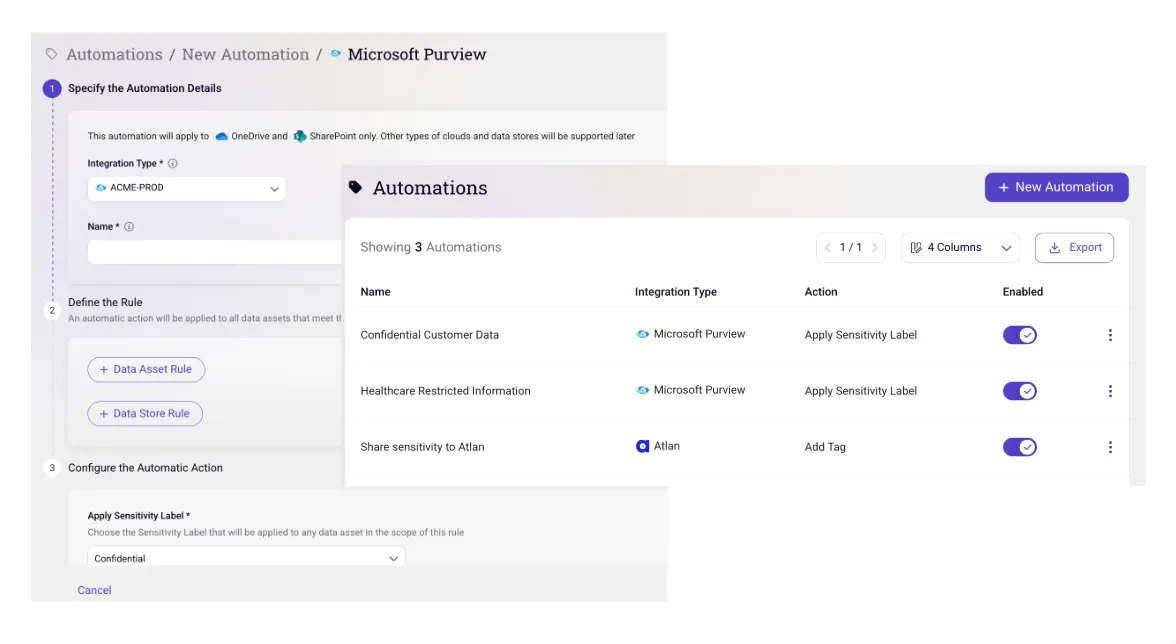

- Does it offer full remediation workflow automation? It’s not enough to raise the alarm. You want exposures fixed automatically, at scale, without manual effort.

- Does this fit within my Data Security Ecosystem? Choose only platforms that integrate and enrich your current data governance stack so every tool operates from the same source of truth without adding operational overhead.

- Are compliance and security controls bridged in a unified dashboard? No more switching between tools. Choose platforms where compliance and risk data are combined into a single view for GRC and SecOps.

- Does it support business-driven data discovery (e.g., by project, region, or owner)? You need dynamic views tied to business needs, helping cloud initiatives move faster without adding risk, so security can become a business enabler.

- What’s the track record on customer outcomes at scale? Actual results in complex, high-volume settings matter more than demo promises. Look for real stories from large organizations.

- How is pricing structured for future growth? Beware of pricing that seems low until your data doubles. Look for clear, usage-based models so expansion won’t bring hidden costs.

Agentless, In-Environment Power: Why It’s the New Gold Standard

Agentless, in-environment architecture removes hassles with endpoint installs, connectors, and worries about where your data goes. Gartner has highlighted that this approach reduces regulatory headaches and enables fast onboarding. As organizations keep adding new cloud and hybrid systems, only these platforms can truly scale for global teams and strict requirements.

Sentra’s platform keeps all processing inside your environment. There’s no need to export your data; offering peace of mind for privacy, sovereignty, and speed. With regulations increasing everywhere, this approach isn’t just helpful; it’s essential.

Classification Accuracy and Petabyte-Scale Efficiency: The Must-Haves for 2026

Unstructured data is growing fast, and workloads are now more diverse than ever. The difference between basic scanning and real, AI-driven classification is often the difference between protecting your company or ending up on the breach list. Leading platforms, including Sentra, deliver over 95% classification accuracy by using large language models and in-house methods across both structured and unstructured data.

Why is speed and scale so important? Old-school solutions were built with smaller data volumes in mind. Today, DSPM platforms must quickly and affordably identify and secure data in vast environments. Sentra’s scanning is both fast and affordable, keeping up as your data grows. To learn more about these challenges read: Reducing Cloud Data Attack Risk.

Don’t Settle: Redefining Best-in-Class DSPM Buying Criteria for 2026

Many vendors are still only comfortable offering the basics, but the demands facing CISOs today are anything but basic. Combining identity and data governance, multi-cloud support that works out of the box, and risk insights mapped to real business needs - these are the essential elements for protecting today’s and tomorrow’s data. If a solution doesn’t check all 12 boxes, you’re already limiting your security program before you start.

Need a side-by-side comparison for your next decision? Request a personalized demo to see exactly how Sentra meets every requirement.

Conclusion

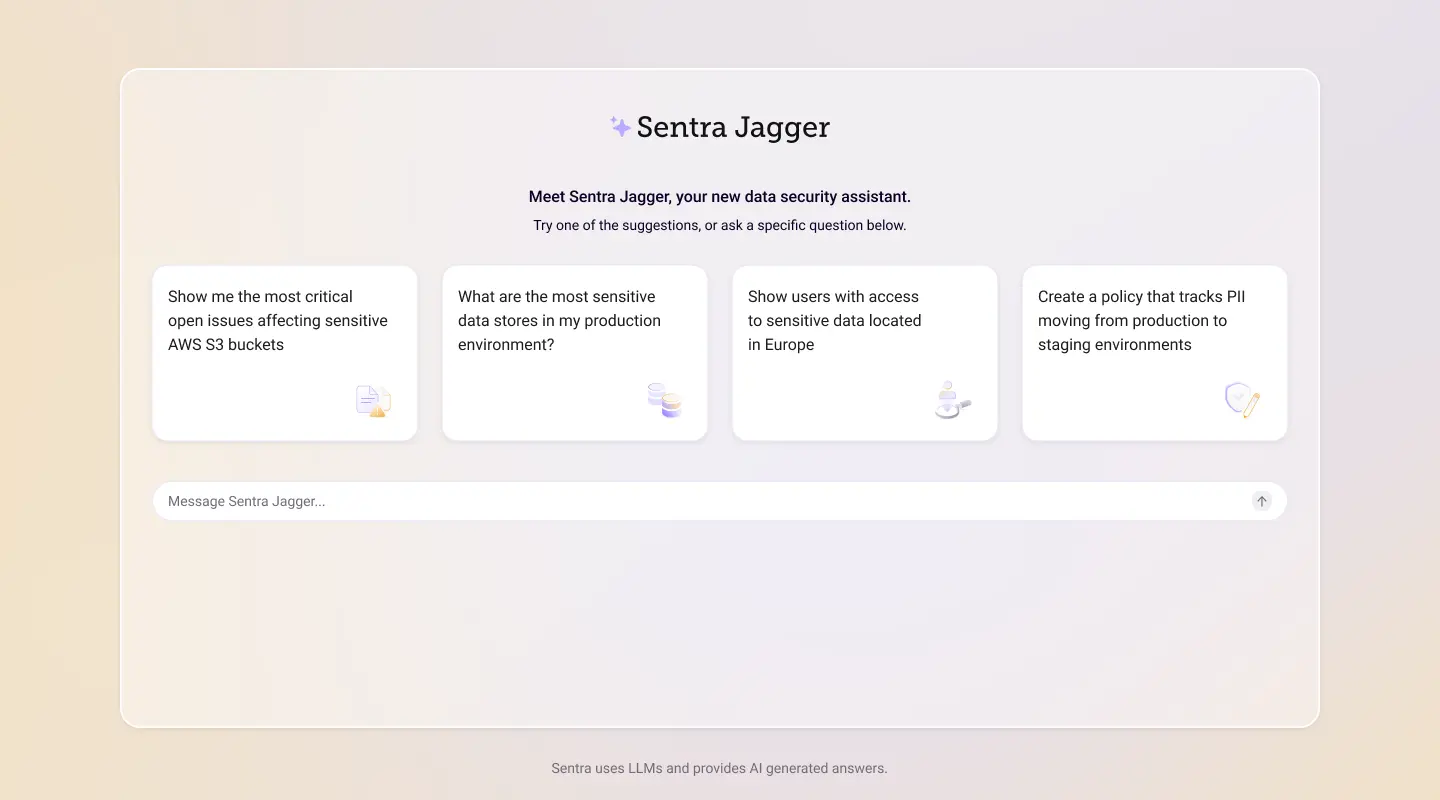

With AI further accelerating data growth, security teams can’t afford to settle for legacy features or generic checklists. By insisting on meaningful criteria - true agentless design, in-environment processing, precise AI-driven classification, scalable affordability, and business-first integration - CISOs set a higher standard for both their own organizations and the wider industry.

Sentra is ready to help you raise the bar. Contact us for a data risk assessment, or to discuss how to ensure your next buying decision leads to better protection, less risk, and a stronger position for the future.

Continue the Conversation

If you want to go deeper into how CISOs are rethinking data security, I explore these topics regularly on Guardians of the Data, a podcast focused on real-world data protection challenges, evolving DSPM strategies, and candid conversations with security leaders.

Watch or listen to Guardians of the Data for practical insights on securing data in an AI-driven, multi-cloud world.

<blogcta-big>

Sentra Is One of the Hottest Cybersecurity Startups

Sentra Is One of the Hottest Cybersecurity Startups

We knew we were on a hot streak, and now it’s official.

Sentra has been named one of CRN’s 10 Hottest Cybersecurity Startups of 2025. This recognition is a direct reflection of our commitment to redefining data security for the cloud and AI era, and of the growing trust forward-thinking enterprises are placing in our unique approach.

This milestone is more than just an award. It shows our relentless drive to protect modern data systems and gives us a chance to thank our customers, partners, and the Sentra team whose creativity and determination keep pushing us ahead.

The Market Forces Fueling Sentra’s Momentum

Cybersecurity is undergoing major changes. With 94% of organizations worldwide now relying on cloud technologies, the rapid growth of cloud-based data and the rise of AI agents have made security both more urgent and more complicated. These shifts are creating demands for platforms that combine unified data security posture management (DSPM) with fast data detection and response (DDR).

Industry data highlights this trend: over 73% of enterprise security operations centers are now using AI for real-time threat detection, leading to a 41% drop in breach containment time. The global cybersecurity market is growing rapidly, estimated to reach $227.6 billion in 2025, fueled by the need to break down barriers between data discovery, classification, and incident response 2025 cybersecurity market insights. In 2025, organizations will spend about 10% more on cyber defenses, which will only increase the demand for new solutions.

Why Recognition by CRN Matters and What It Means

Landing a place on CRN’s 10 Hottest Cybersecurity Startups of 2025 is more than publicity for Sentra. It signals we truly meet the moment. Our rise isn’t just about new features; it’s about helping security teams tackle the growing risks posed by AI and cloud data head-on. This recognition follows our mention as a CRN 2024 Stellar Startup, a sign of steady innovation and mounting interest from analysts and enterprises alike.

Being on CRN’s list means customers, partners, and investors value Sentra’s straightforward, agentless data protection that helps organizations work faster and with more certainty.

Innovation Where It Matters: Sentra’s Edge in Data and AI Security

Sentra stands out for its practical approach to solving urgent security problems, including:

- Agentless, multi-cloud coverage: Sentra identifies and classifies sensitive data and AI agents across cloud, SaaS, and on-premises environments without any agents or hidden gaps.

- Integrated DSPM + DDR: We go further than monitoring posture by automatically investigating incidents and responding, so security teams can act quickly on why DSPM+DDR matters.

- AI-driven advancements: Features like domain-specific AI Classifiers for Unstructure advanced AI classification leveraging SLMs, Data Security for AI Agents and Microsoft M365 Copilot help customers stay in control as they adopt new technologies Sentra’s AI-powered innovation.

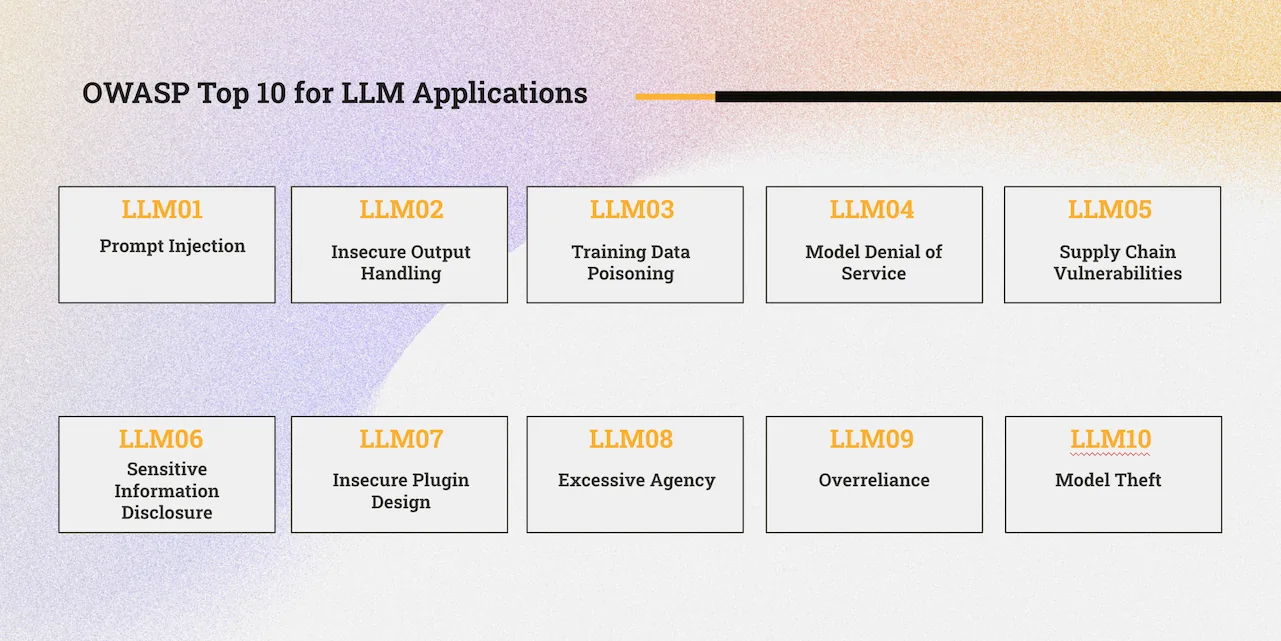

With new attack surfaces popping up all the time, from prompt injection to autonomous agent drift, Sentra’s architecture is built to handle the world of AI.

A Platform Approach That Outpaces the Competition

There are plenty of startups aiming to tackle AI, cloud, and data security challenges. Companies like 7AI, Reco, Exaforce, and Noma Security have been in the news for their funding rounds and targeted solutions. Still, very few offer the kind of unified coverage that sets Sentra apart.

Most competitors stick to either monitoring SaaS agents or reducing SOC alerts. Sentra does more by providing both agentless multi-cloud DSPM and built-in DDR. This gives organizations visibility, context, and the power to act in one platform. With features like Data Security for AI Agents, Sentra helps enterprises go beyond managing alerts by automating meaningful steps to defend sensitive data everywhere.

Thanks to Our Community and What’s Next

This honor belongs first and foremost to our community: customers breaking new ground in data security, partners building solutions alongside us, and a team with a clear goal to lead the industry.

If you haven’t tried Sentra yet, now’s a great time to see what we can do for your cloud and AI data security program. Find out why we’re at the forefront: schedule a personalized demo or read CRN’s full 2025 list for more insight.

Conclusion

Being named one of CRN’s hottest cybersecurity startups isn’t just a milestone. It pushes us forward toward our vision - data security that truly enables innovation. The market is changing fast, but Sentra’s focus on meaningful security results hasn't wavered.

Thank you to our customers, partners, investors, and team for your ongoing trust and teamwork. As AI and cloud technology shape the future, Sentra is ready to help organizations move confidently, securely, and quickly.

AI Governance Starts With Data Governance: Securing the Training Data and Agents Fuelling GenAI

AI Governance Starts With Data Governance: Securing the Training Data and Agents Fuelling GenAI

Generative AI isn’t just transforming products and processes - it’s expanding the entire enterprise risk surface. As C-suite executives and security leaders rush to unlock GenAI’s competitive advantages, a hard truth is clear: effective AI governance depends on solid, end-to-end data governance.

Sensitive data is increasingly used for model training and autonomous agents. If organizations fail to discover, classify, and secure these resources early, they risk privacy breaches, regulatory violations, and reputational damage. To make GenAI safe, compliant, and trustworthy from the start, data governance for generative AI needs to be a top boardroom priority.

Why Data Governance is the Cornerstone of GenAI Trustworthiness and Safety

The opportunities and risks of generative AI depend not only on algorithms, but also on the quality, security, and history of the underlying data. AWS reports that 39% of Chief Data Officers see data cleaning, integration, and storage as the main barriers to GenAI adoption, and 49% of enterprises make data quality improvement a core focus for successful AI projects (AWS Enterprise Strategy - Data Governance). Without strong data governance, sensitive information can end up in training sets, leading to unintentional leaks or model behaviors that break privacy and compliance.

Regulatory requirements, such as the Generative AI Copyright Disclosure Act, are evolving fast, raising the pressure to document data lineage and make sure unauthorized or non-compliant datasets stay out. In the world of GenAI, governance goes far beyond compliance checklists. It’s essential for building AI that is safe, auditable, and trusted by both regulators and customers.

New Attack Surfaces: Risks From Unsecured Data and Shadow AI Agents

GenAI adoption increases risk. Today, 79% of organizations have already piloted or deployed agentic AI, with many using LLM-powered agents to automate key workflows (Wikipedia - Agentic AI). But if these agents, sometimes functioning as "shadow AI" outside official oversight, access sensitive or unclassified data, the fallout can be severe.

In 2024, over 30% of AI data breaches involve insider threats or accidental disclosure, according to Quinnox Data Governance for AI. Autonomous agents can mistakenly reveal trade secrets, financial records, or customer data, damaging brand trust. The risk multiplies rapidly if sensitive data isn’t properly governed before flowing into GenAI tools. To stop these new threats, organizations need up-to-the-minute insight and control over both data and the agents using it.

Frameworks and Best Practices for Data Governance in GenAI

Leading organizations now follow data governance frameworks that match changing regulations and GenAI's technical complexity. Standards like NIST AI Risk Management Framework (AI RMF) and ISO/IEC 42001:2023 are setting the benchmarks for building auditable, resilient AI programs (Data and AI Governance - Frameworks & Best Practices).

Some of the most effective practices:

- Managing metadata and tracking full data lineage

- Using data access policies based on role and context

- Automating compliance with new AI laws

- Monitoring data integrity and checking for bias

A strong data governance program for generative AI focuses on ongoing data discovery, classification, and policy enforcement - before data or agents meet any AI models. This approach helps lower risk and gives GenAI efforts a solid base of trust.

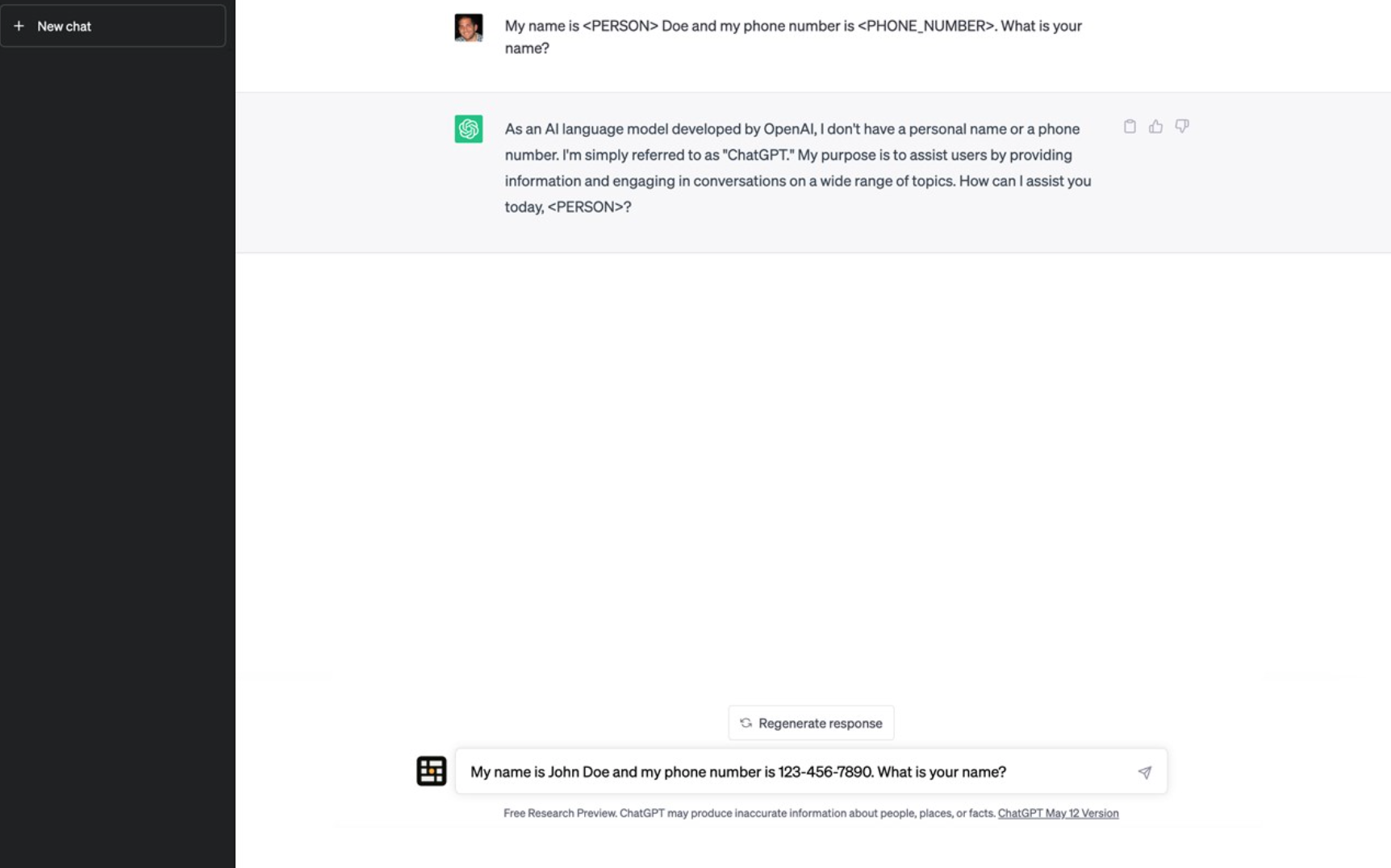

Sentra’s Approach: Proactive Pre-Integration Discovery and Continuous Enforcement

Many tools only secure data after it’s already being used with GenAI applications. This reactive strategy leaves openings for risk. Sentra takes a different path, letting organizations discover, classify, and protect sensitive data sources before they interact with language models or agentic AI.

By using agentless, API-based discovery and classification across multi-cloud and SaaS environments, Sentra delivers immediate visibility and context-aware risk scoring for all enterprise data assets. With automated policies, businesses can mask, encrypt, or restrict data access depending on sensitivity, business requirements, or audit needs. Live Continuous monitoring tracks which AI agents are accessing data, making granular controls and fast intervention possible. These processes help stop shadow AI, keep unauthorized data out of LLM training, and maintain compliance as rules and business needs shift.

Guardrails for Responsible AI Growth Across the Enterprise

The future of GenAI depends on how well businesses can innovate while keeping security and compliance intact. As AI regulations become stricter and adoption speeds up, Sentra’s ability to provide ongoing, automated discovery and enforcement at scale is critical. Further reading: AI Automation & Data Security: What You Need To Know.

With Sentra, organizations can:

- Stop unapproved or unchecked data from being used in model training

- Identify shadow AI agents or risky automated actions as they happen

- Support audits with complete data classification

- Meet NIST, ISO, and new global standards with ease

Sentra gives CISOs, CDOs, and executives a proactive, scalable way to adopt GenAI safely, protecting the business before any model training even begins.

AI Governance Starts with Data Governance

AI governance for generative AI starts, and is won or lost, at the data layer. If organizations don’t find, classify, and secure sensitive data first, every other security measure remains reactive and ineffective. As generative AI, agent automation, and regulatory demands rise, a unified data governance strategy isn’t just good practice, it’s an urgent priority. Sentra gives security and business teams real control, making sure GenAI is secure, compliant, and trusted.

<blogcta-big>

US State Privacy Laws 2026: DSPM Compliance Requirements & What You Need to Know

US State Privacy Laws 2026: DSPM Compliance Requirements & What You Need to Know

By 2026, American data privacy will look very different as a wave of new state laws redefines what it means to protect sensitive information. Organizations face a regulatory maze: more than 20 states will soon require not only “reasonable security” but also Data Protection Impact Assessments (DPIAs), explicit limits on data collection, and, in some cases, detailed data inventories. These requirements are quickly becoming standard, and ignoring them simply isn’t an option. The risk of penalties and enforcement actions is climbing fast.

But through all these changes, one major question remains: How can any organization comply if it doesn’t even know where its most sensitive data is? Data Security Posture Management (DSPM) has become the solution, making data visibility and automation central for meeting ongoing compliance needs.

Mapping the New Wave of State Privacy Mandates

Several state privacy laws going into effect in 2025 and 2026 are raising the stakes for compliance. Kentucky, Indiana, and Rhode Island’s new laws, effective January 1, 2026, require both security measures and DPIAs for handling high-risk or sensitive data. Minnesota’s law stands out even more: it moves past earlier vague “reasonable” security language and mandates comprehensive data inventories.

Other key states include Minnesota, which explicitly requires data inventories, Maryland with strict data minimization rules, and Tennessee, which gives organizations an affirmative defense if they’ve adopted a NIST-aligned privacy program. These requirements mean organizations now need to track what data they collect, know exactly where it’s stored, and show evidence of compliance when asked. If your organization operates in more than one state, keeping up with this web of laws will soon become impossible without dedicated solutions (US consumer privacy laws 2025 update).

Why Data Visibility is Now Foundational to Compliance

To meet DPIA, minimization, and security safeguard rules, you need full visibility into where sensitive or regulated data lives - and how it moves across your environment. Recent privacy laws are moving closer to GDPR-like standards, with DPIAs required not only for biometric data but also for broad categories like targeted advertising and profiling. Minnesota leads with its clear requirement for full data inventories, setting the standard that you can’t prove compliance unless you understand your data (US cybersecurity and data privacy review and outlook 2025).

This shift puts DSPM front and center: you now need ongoing discovery and classification of your entire sensitive data footprint. Without a strong data foundation, organizations will find it hard to complete DPIAs, handle audits, or defend themselves in investigations.

Automation: The Only Viable Path for Assessment and Audit Readiness

State privacy rules are getting more complicated, and many enforcement authorities are shortening or removing 'right-to-cure' periods. That means manual compliance simply won’t keep up. Automation is now the only way to manage compliance as regulations tighten (5 trends to watch: 2025 US data privacy & cybersecurity).

With DSPM and automation, organizations get ongoing discovery, real-time data classification, and instant evidence collection - all required for fast DPIAs and responsive audits. For companies facing regulators or preparing for multi-state oversight, this means you already have the proof and documentation you need. Relying on spreadsheets or one-time assessments at this point only increases your risk.

Sentra: Your Strategic Bridge to Privacy Law Compliance

Sentra’s DSPM platform is built to tackle these expanding privacy law requirements. The agentless platform covers AWS, Azure, GCP, SaaS, and hybrid environments, removing both visibility gaps and the hassle found in older solutions (Sentra: DSPM for compliance use cases).

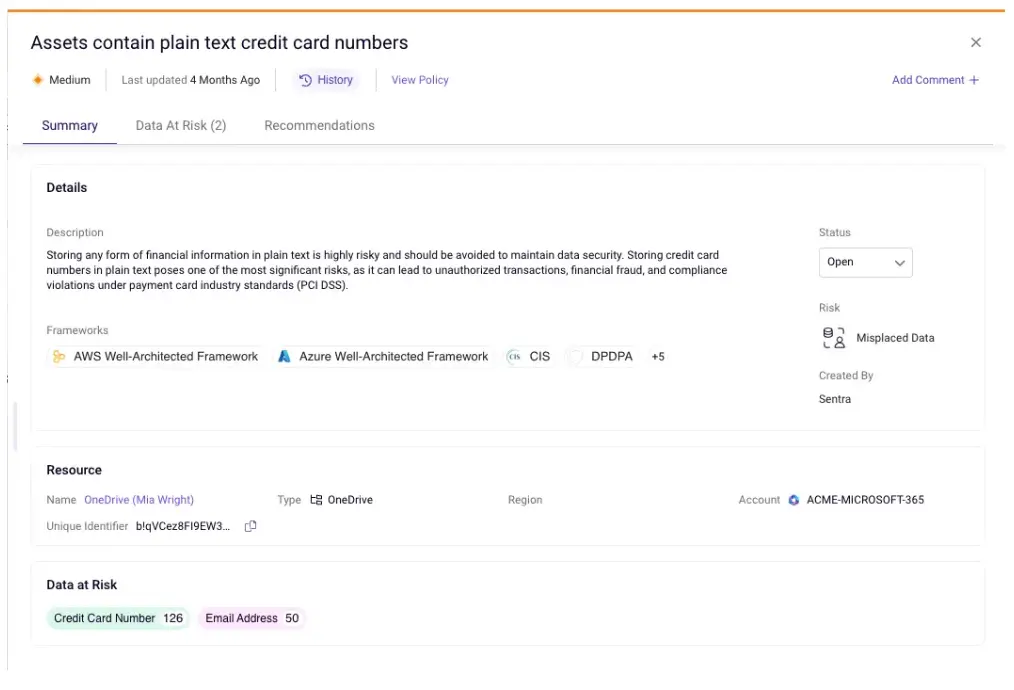

With continuous, automated discovery and data classification, you always know exactly where your sensitive data is, how it moves, and how it’s being protected. Sentra’s integrated Data Detection & Response (DDR) catches and fixes risks or policy violations early, closing gaps before regulators - or attackers - can take advantage (Sensitive data exposure insight). Combined with clear reporting and on-demand audit documentation, Sentra helps you meet new state privacy laws and stay audit-ready, even as your business or data needs change.

Conclusion

The arrival of new state privacy laws in 2025 and 2026 is changing how organizations must handle sensitive data. Security safeguards, DPIAs, minimization, and full inventories are now required - not just nice-to-have.

DSPM is now a compliance must-have. Without complete data visibility and automation, following the web of state rules isn’t difficult - it’s impossible. Sentra’s agentless, multi-cloud platform keeps your organization continuously informed, giving compliance, security, and privacy teams the control they need to keep up with new regulations.

Want to see how your organization stacks up for 2026 laws? Book a DSPM Compliance Readiness Assessment or check out Sentra’s automated DPIA tools today.

<blogcta-big>

Zero Data Movement: The New Data Security Standard that Eliminates Egress Risk

Zero Data Movement: The New Data Security Standard that Eliminates Egress Risk

Cloud adoption and the explosion of data have boosted business agility, but they’ve also created new headaches for security teams. As companies move sensitive information into multi-cloud and hybrid environments, old security models start to break down. Shuffling data for scanning and classification adds risk, piles on regulatory complexity, and drives up operational costs.

Zero Data Movement (ZDM) offers a new architectural approach, reshaping how advanced Data Security Posture Management (DSPM) platforms provide visibility, protection, and compliance. This post breaks down what makes ZDM unique, why it matters for security-focused enterprises, and how Sentra provides an innovative agentless and scalable design that is genuinely a zero data movement DSPM .

Defining Zero Data Movement Architecture

Zero Data Movement (ZDM) sets a new standard in data security. The premise is straightforward: sensitive data should stay in its original environment for security analysis, monitoring, and enforcement. Older models require copying, exporting, or centralizing data to scan it, while ZDM ensures that all security actions happen directly where data resides.

ZDM removes egress risk -shrinking the attack surface and reducing regulatory issues. For organizations juggling large cloud deployments and tight data residency rules, ZDM isn’t just an improvement - it's essential. Groups like the Cloud Security Alliance and new privacy regulations are moving the industry toward designs that build in privacy and non-stop protection.

Risks of Data Movement: Compliance, Cost, and Egress Exposure

Every time data is copied, exported, or streamed out of its native environment, new risks arise. Data movement creates challenges such as:

- Egress risk: Data at rest or in transit outside its original environment increases risk of breach, especially as those environments may be less secure.

- Compliance and regulatory exposure: Moving data across borders or different clouds can break geo-fencing and privacy controls, leading to potential violations and steep fines.

- Loss of context and control: Scattered data makes it harder to monitor everything, leaving gaps in visibility.

- Rising total cost of ownership (TCO): Scanning and classification can incur heavy cloud compute costs - so efficiency matters. Exporting or storing data, especially shadow data, drives up storage, egress, and compliance costs as well.

As more businesses rely on data, moving it unnecessarily only increases the risk - especially with fast-changing cloud regulations.

Legacy and Competitor Gaps: Why Data Movement Still Happens

Not every security vendor practices true zero data movement, and the differences are notable. Products from Cyera, Securiti, or older platforms still require temporary data exporting or duplication for analysis. This might offer a quick setup, but it exposes users to egress risks, insider threats, and compliance gaps - problems that are worse in regulated fields.

Competitors like Cyera often rely on shortcuts that fall short of ZDM’s requirements. Securiti and similar providers depend on connectors, API snapshots, or central data lakes, each adding potential risks and spreading data further than necessary. With ZDM, security operations like monitoring and classification happen entirely locally, removing the need to trust external storage or aggregation. For more detail on how data movement drives up risk.

The Business Value of Zero Data Movement DSPM

Zero data movement DSPM changes the equation for businesses:

- Designed for compliance: Data remains within controlled environments, shrinking audit requirements and reducing breach likelihood.

- Lower TCO and better efficiency: Eliminates hidden expenses from extra storage, duplicate assets, and exporting to external platforms.

- Regulatory clarity and privacy: Supports data sovereignty, cross-border rules, and new zero trust frameworks with an egress-free approach.

Sentra’s agentless, cloud-native DSPM provides these benefits by ensuring sensitive data is never moved or copied. And Sentra delivers these benefits at scale - across multi-petabyte enterprise environments - without the performance and cost tradeoffs others suffer from. Real scenarios show the results: financial firms keep audit trails without data ever leaving allowed regions. Healthcare providers safeguard PHI at its source. Global SaaS companies secure customer data at scale, cost-effectively while meeting regional rules.

Future-Proofing Data Security: ZDM as the New Standard

With data volumes expected to hit 181 zettabytes in 2025, older protection methods that rely on moving data can’t keep up. Zero data movement architecture meets today's security demands and supports zero trust, metadata-driven access, and privacy-first strategies for the future.

Companies wanting to avoid dead ends should pick solutions that offer unified discovery, classification and policy enforcement without egress risk. Sentra’s ZDM architecture makes this possible, allowing organizations to analyze and protect information where it lives, at cloud speed and scale.

Conclusion

Zero Data Movement is more than a technical detail - it's a new architectural standard for any organization serious about risk control, compliance, and efficiency. As data grows and regulations become stricter, the old habits of moving, copying, or centralizing sensitive data will no longer suffice.

Sentra stands out by delivering a zero data movement DSPMplatform that's agentless, real-time, and truly multicloud. For security leaders determined to cut egress risk, lower compliance spending, and get ahead in privacy, ZDM is the clear path forward.

<blogcta-big>

Petabyte Scale is a Security Requirement (Not a Feature): The Hidden Cost of Inefficient DSPM

Petabyte Scale is a Security Requirement (Not a Feature): The Hidden Cost of Inefficient DSPM

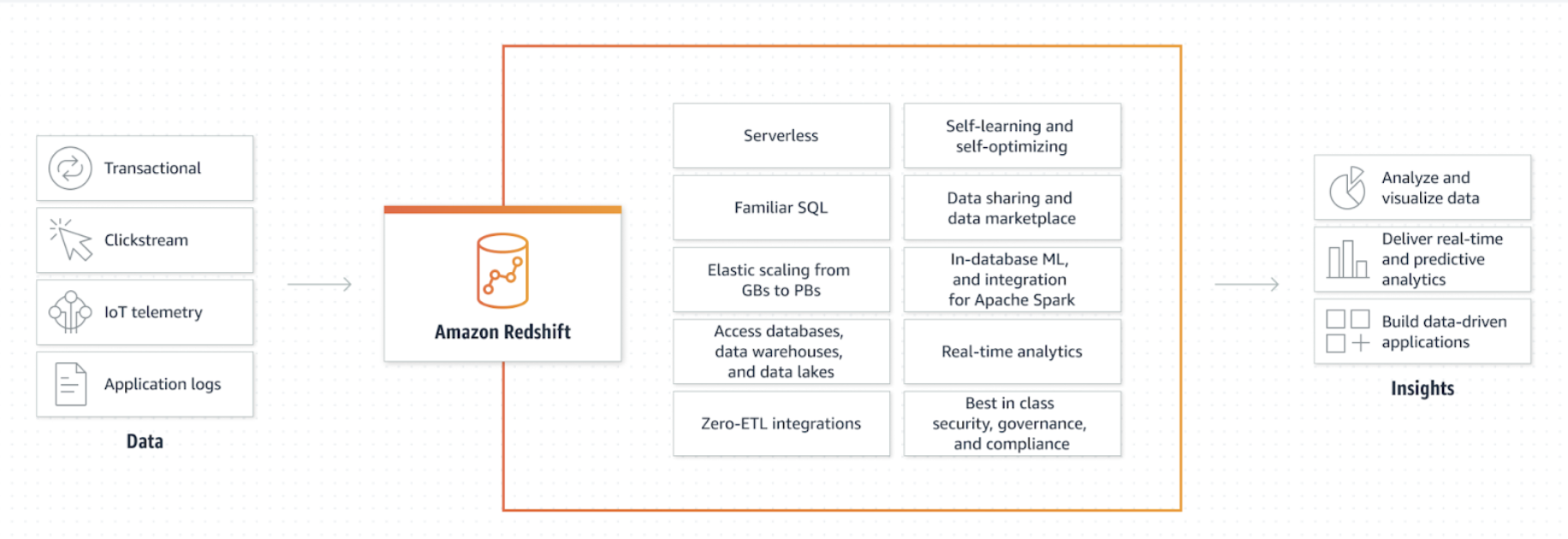

As organizations scramble to secure their sprawling cloud environments and deploy AI, many are facing a stark realization: handling petabyte-scale data is now a basic security requirement. With sensitive information multiplying across multiple clouds, SaaS, and AI-driven platforms, security leaders can't treat true data security at scale as a simple add-on or upgrade.

At the same time, speeding up digital transformation means higher and less visible operational costs for handling this data surge. Older Data Security Posture Management (DSPM) tools, especially those boasting broad, indiscriminate scans as evidence of their scale, are saddling organizations with rising cloud bills, slowdowns, and dangerous gaps in visibility. The costs of securing petabyte-scale data are now economic and technical, demanding efficiency instead of just scale. Sentra solves this with a highly-efficient cloud-native design, delivering 10x lower cloud compute costs.

Why Petabyte Scale is a Security Requirement

Data environments have exploded in both size and complexity. For Fortune 500 companies, fast-growing SaaS providers, and global organizations, data exists across public and hybrid clouds, business units, regions, and a stream of new applications.

Regulations such as GDPR, HIPAA, and rules from the SEC now demand current data inventories and continuous proof of risk management. In this environment, defending data at the petabyte level is now essential. Failing to classify and monitor this data efficiently means risking compliance and losing business trust. Security teams are feeling the strain. I meet security teams everyday and too many of them still struggle with data visibility and are already seeing the cracks forming in their current toolset as data scales.

The Hidden Cost of Inefficient DSPM: API Calls and Egress Bills

How DSPM tools perform scanning and discovery drives the real costs of securing petabyte-scale data. Some vendors highlight their capacity to scan multiple petabytes daily. But here's the reality: scanning everything, record by record, relying on huge numbers of API calls, becomes very expensive as your data estate grows.

Every API call can rack up costs, and all the resulting data egress and compute add up too. Large organizations might spend tens of thousands of dollars each month just to track what’s in their cloud. Even worse, older "full scan" DSPM strategies jam up operations with throttling, delays, and a flood of alerts that bury real risk. These legacy approaches simply don’t scale, and organizations relying on them end up paying more while knowing less.

Cyera’s "Petabyte Scale" Claims: At What Cloud Cost?

Cyera promotes its tool as an AI-native, agentless DSPM that can scan as much as 2 petabytes daily . While that’s an impressive technical achievement, the strategy of scanning everything leads directly to massive cloud infrastructure costs: frequent API hits, heavy egress, and big bills from AWS, Azure, and GCP.

At scale, these charges don’t just appear on invoices, they can actually stop adoption and limit security’s effectiveness. Cloud operations teams face API throttling, slow results, and a surge in remediation tickets as risks go unfiltered. In these fast-paced environments, recognizing the difference between a real threat and harmless data comes down to speed. The Bedrock Security blog points out how inefficient setups buckle under this weight, leaving teams stuck with lagging visibility and more operational headaches.

Sentra’s 10x Efficiency: Optimized Scanning for Real-World Scale

Sentra takes another route to manage the costs of securing petabyte-scale data. By combining agentless discovery with scanning guided by context and metadata, Sentra uses pattern recognition and an AI-driven clustering algorithm designed to detect machine-generated content—such as log files, invoices, and similar data types. By intelligently sampling data within each cluster, Sentra delivers efficient scanning while reducing scanning costs.

This approach enables data scanning to be prioritized based on risk and business value, rather than wasting time and money scanning the same data over and over again, skipping unnecessary API calls, lowering egress, and keeping cloud bills in check.

Large organizations gain a 10x efficiency edge: quicker classification of data, instant visibility into actual threats, lower operational expenses, and less demand on the network. By focusing attention only where it matters, Sentra matches data security posture management to the demands of current cloud growth and regulatory requirements.

This makes it possible for organizations to hit regulatory and audit targets without watching expenses spiral or opening up security gaps.Sentra offers multiple sampling levels, Quick (default), Moderate, Thorough, and Full, allowing customers to tailor their scanning strategy to balance cost and accuracy. For example, a highly regulated environment can be configured for a full scan, while less-regulated environments can use more efficient sampling. Petabyte-scale security gives the user complete control of their data enterprise and turns into something operationally and financially sustainable, rather than a technical milestone with a hidden cost.

Efficiency is Non-Negotiable

Fortune 500 companies and digital-first organizations can’t treat efficiency as optional. Inefficient DSPM tools pile on costs, drain resources, and let vulnerabilities slip through, turning their security posture into a liability once scale becomes a factor. Sentra’s platform shows that efficiency is security: with targeted scanning, real context, and unified detection and response, organizations gain clarity and compliance while holding down expenses.

Don’t let your data protection approach crumble under petabyte-scale pressure. See what Sentra can do, reduce costs, and keep essential data secure - before you end up responding to breaches or audit failures.

Conclusion

Securing data at the petabyte level isn't some future aspiration - it's the standard for enterprises right now. Treating it as a secondary feature isn’t just shortsighted; it puts your company at risk, financially and operationally.

The right DSPM architecture brings efficiency, not just raw scale. Sentra delivers real-time, context-rich security posture with far greater efficiency, so your protection and your cloud spending can keep up with your growing business. Security needs to grow along with scale. Rising costs and new risks shouldn’t grow right alongside it.

Want to see how your current petabyte security posture compares? Schedule a demo and see Sentra’s efficiency for yourself.

<blogcta-big>

How Sentra Uncovers Sensitive Data Hidden in Atlassian Products

How Sentra Uncovers Sensitive Data Hidden in Atlassian Products

Atlassian tools such as Jira and Confluence are the beating heart of software development and IT operations. They power everything from sprint planning to debugging production issues. But behind their convenience lies a less-visible problem: these collaboration platforms quietly accumulate vast amounts of sensitive data often over years that security teams can’t easily monitor or control.

The Problem: Sensitive Data Hidden in Plain Sight

Many organizations rely on Jira to manage tickets, track incidents, and communicate across teams. But within those tickets and attachments lies a goldmine of sensitive information:

- Credentials and access keys to different environments.

- Intellectual property, including code snippets and architecture diagrams.

- Production data used to reproduce bugs or validate fixes — often in violation of data-handling regulations.

- Real customer records shared for troubleshooting purposes.

This accumulation isn’t deliberate; it’s a natural byproduct of collaboration. However, it results in a long-tail exposure risk - historical tickets that remain accessible to anyone with permissions.

The Insider Threat Dimension

Because Jira and Confluence retain years of project history, employees and contractors may have access to data they no longer need. In some organizations, teams include offshore or external contributors, multiplying the risk surface. Any of these users could intentionally or accidentally copy or export sensitive content at any moment.

Why Sensitive Data Is So Hard to Find

Sensitive data in Atlassian products hides across three levels, each requiring a different detection approach:

- Structured Data (Records): Every ticket or page includes structured fields - reporter, status, labels, priority. These schemas are customizable, meaning sensitive fields can appear unpredictably. Security teams rarely have visibility or consistent metadata across instances.

- Unstructured Data (Descriptions & Discussions): Free-text fields are where developers collaborate — and where secrets often leak. Comments can contain access tokens, internal URLs, or step-by-step guides that expose system details.

- Unstructured Data (Attachments): Screenshots, log files, spreadsheets, code exports, or even database snapshots are commonly attached to tickets. These files may contain credentials, customer PII, or proprietary logic, yet they are rarely scanned or governed.

.webp)

The Challenge for Security Teams

Traditional security tools were never designed for this kind of data sprawl. Atlassian environments can contain millions of tickets and pages, spread across different projects and permissions. Manually auditing this data is impractical. Even modern DLP tools struggle to analyze the context of free text or attachments embedded within these platforms.

Compliance teams face an uphill battle: GDPR, HIPAA, and SOC 2 all require knowing where sensitive data resides. Yet in most Atlassian instances, that visibility is nonexistent.

How Sentra Solves the Problem

Sentra takes a different approach. Its cloud-native data security platform discovers and classifies sensitive data wherever it lives - across SaaS applications, cloud storage, and on-prem environments. When connecting your atlassian environment, Sentra delivers visibility and control across every layer of Jira and Confluence.

Comprehensive Coverage

Sentra delivers consistent data governance across SaaS and cloud-native environments. When connected to Atlassian Cloud, Sentra’s discovery engine scans Jira and Confluence content to uncover sensitive information embedded in tickets, pages, and attachments, ensuring full visibility without impacting performance.

In addition, Sentra’s flexible architecture can be extended to support hybrid environments, providing organizations with a unified view of sensitive data across diverse deployment models.

AI-Based Classification

Using advanced AI models, Sentra classifies data across all three tiers:

- Structured metadata, identifying risky fields and tags.

- Unstructured text, analyzing ticket descriptions, comments, and discussions for credentials, PII, or regulated data.

- Attachments, scanning files like logs or database snapshots for hidden secrets.

This contextual understanding distinguishes between harmless content and genuine exposure, reducing false positives.

Full Lifecycle Scanning

Sentra doesn’t just look at new tickets, it scans the entire historical archive to detect legacy exposure, while continuously monitoring for ongoing changes. This dual approach helps security teams remediate existing risks and prevent future leaks.

The Real-World Impact

Organizations using Sentra gain the ability to:

- Prevent accidental leaks of credentials or production data in collaboration tools.

- Enforce compliance by mapping sensitive data across Jira and Confluence.

- Empower DevOps and security teams to collaborate safely without stifling productivity.

Conclusion

Collaboration is essential, but it should never compromise data security. Atlassian products enable innovation and speed, yet they also hold years of unmonitored information. Sentra bridges that gap by giving organizations the visibility and intelligence to discover, classify, and protect sensitive data wherever it lives, even in Jira and Confluence.

<blogcta-big>

Unstructured Data Is 80% of Your Risk: Why DSPM 1.0 Vendors, Like Varonis and Cyera, Fail to Protect It at Petabyte Scale

Unstructured Data Is 80% of Your Risk: Why DSPM 1.0 Vendors, Like Varonis and Cyera, Fail to Protect It at Petabyte Scale

Unstructured data is the fastest-growing, least-governed, and most dangerous class of enterprise data. Emails, Slack messages, PDFs, screenshots, presentations, code repositories, logs, and the endless stream of GenAI-generated content — this is where the real risk lives.

The Unstructured data dilemma is this: 80% of your organization’s data is essentially invisible to your current security tools, and the volume is climbing by up to 65% each year. This isn’t just a hypothetical - it’s the reality for enterprises as unstructured data spreads across cloud and SaaS platforms. Yet, most Data Security Posture Management (DSPM) solutions - often called DSPM 1.0 - were never built to handle this explosion at petabyte scale. Especially legacy vendors and first-generation players like Cyera — were never designed to handle unstructured data at scale. Their architectures, classification engines, and scanning models break under real enterprise load.

Looking ahead to 2026, unstructured data security risk stands out as the single largest blind spot in enterprise security. If overlooked, it won’t just cause compliance headaches and soaring breach costs - it could put your organization in the headlines for all the wrong reasons.

The 80% Problem: Unstructured Data Dominates Your Risk

The Scale You Can’t Ignore - Over 80% of enterprise data is unstructured

- Unstructured data is growing 55-65% per year; by 2025, the world will store more than 180 zettabytes of it.

- 95% of organizations say unstructured data management is a critical challenge but less than 40% of data security budgets address this high-risk area. Unstructured data is everywhere: cloud object stores, SaaS apps, collaboration tools, and legacy file shares. Unlike structured data in databases, it often lacks consistent metadata, access controls, or even basic visibility. This “dark data” is behind countless breaches, from accidental file exposures and overshared documents to sensitive AI training datasets left unmonitored.

The Business Impact - The average breach now costs $4-4.9M, with unstructured data often at the center.

- Poor data quality, mostly from unstructured sources, costs the U.S. economy $3.1 trillion each year.

- More than half of organizations report at least one non-compliance incident annually, with average costs topping $1M. The takeaway: Unstructured data isn’t just a storage problem.

Why DSPM 1.0 Fails: The Blind Spots of Legacy Approaches

Traditional Tools Fall Short in Cloud-First, Petabyte-Scale Environments

Legacy DSPM and DCAP solutions, such as Varonis or Netwrix - were built for an era when data lived on-premises, followed predictable structures, and grew at a manageable pace.

In today’s cloud-first reality, their limitations have become impossible to ignore:

- Discovery Gaps: Agent-based scanning can’t keep up with sprawling, constantly changing cloud and SaaS environments. Shadow and dark data across platforms like Google Drive, Dropbox, Slack, and AWS S3 often go unseen.

- Performance Limits: Once environments exceed 100 TB, and especially as they reach petabyte scale—these tools slow dramatically or miss data entirely.

- Manual Classification: Most legacy tools rely on static pattern matching and keyword rules, causing them to miss sensitive information hidden in natural language, code, images, or unconventional file formats.

- Limited Automation: They generate alerts but offer little or no automated remediation, leaving security teams overwhelmed and forcing manual cleanup.

- Siloed Coverage: Solutions designed for on-premises or single-cloud deployments create dangerous blind spots as organizations shift to multi-cloud and hybrid architectures.

Example: Collaboration App Exposure

A global enterprise recently discovered thousands of highly sensitive files—contracts, intellectual property, and PII—were unintentionally shared with “anyone with the link” inside a cloud collaboration platform. Their legacy DSPM tool failed to identify the exposure because it couldn’t scan within the app or detect real-time sharing changes.

Further, even Emerging DSPM tools often rely on pattern matching or LLM-based scanning. These approaches also fail for three reasons:

- Inaccuracy at scale: LLMs hallucinate, mislabel, and require enormous compute.

- Cost blow-ups: Vendors pass massive cloud bills back to customers or incur inordinate compute cost.

- Architectural limitations: Without clustering and elastic scaling, large datasets overwhelm the system.

This is exactly where Cyera and legacy tools struggle - and where Sentra’s SLM-powered classifier thrives with >99% accuracy at a fraction of the cost.

The New Mandate: Securing Unstructured Data in 2026 and Beyond

GenAI, and stricter privacy laws (GDPR, CCPA, HIPAA) have raised the stakes for unstructured data security. Gartner now recommends Data Access Governance (DAG) and AI-driven classification to reduce oversharing and prepare for AI-centric workloads.

What Modern Security Leaders Need - Agentless, Real-Time Discovery: No deployment hassles, continuous visibility, and coverage for unstructured data stores no matter where they live.

- Petabyte-Scale Performance: Scan, classify, and risk-score all data, everywhere it lives.

- AI-Driven Deep Classification: Use of natural language processing (NLP), Domain-specific Small Language Models (SLMs), and context analysis for every unstructured format.

- Automated Remediation: Playbooks that fix exposures, govern permissions, and ensure compliance without manual work.

- Multi-Cloud & SaaS Coverage: Security that follows your data, wherever it goes.

Sentra: Turning the 80% Blind Spot into a Competitive Advantage

Sentra was built specifically to address the risks of unstructured data in 2026 and beyond. There are nuances involved in solving this. Selecting an appropriate solution is key to a sustainable approach. Here’s what sets Sentra apart:

- Agentless Discovery Across All Environments:Instantly scans and classifies unstructured data across AWS, Azure, Google, M365, Dropbox, legacy file shares, and more - no agents required, no blind spots left behind.

- Petabyte-Tested Performance:Designed for Fortune 500 scale, Sentra keeps speed and accuracy high across petabytes, not just terabytes.

- AI-Powered Deep Classification:Our platform uses advanced NLP, SLMs, and context-aware algorithms to classify, label, and risk-score every file - including code, images, and AI training data, not just structured fields.

- Continuous, Context-Rich Visibility:Real-time risk scoring, identity and access mapping, and automated data lineage show not just where data lives, but who can access it and how it’s used.

- Automated Remediation and Orchestration: Sentra goes beyond alerts. Built-in playbooks fix permissions, restrict sharing, and enforce policies within seconds.

- Compliance-First, Audit-Ready: Quickly spot compliance gaps, generate audit trails, and reduce regulatory risk and reporting costs.

During a recent deployment with a global financial services company, Sentra uncovered 40% more exposed sensitive files than their previous DSPM tool. Automated remediation covered over 10 million documents across three clouds, cutting manual investigation time by 80%.

Actionable Takeaways for Security Leaders

1. Put Unstructured Data at the Center of Your 2026 Security Plan: Make sure your DSPM strategy covers all data, especially “dark” and shadow data in SaaS, object stores, and collaboration platforms.

2. Choose Agentless, AI-Driven Discovery: Legacy, agent-based tools can’t keep up. And underperforming emerging tools may not adequately scale. Look for continuous, automated scanning and classification that scales with your data.

3. Automate Remediation Workflows: Visibility is just the start; your platform should fix exposures and enforce policies in real time.

4. Adopt Multi-Cloud, SaaS-Agnostic Solutions: Your data is everywhere, and your security should be too. Ensure your solution supports all of your unstructured data repositories.

5. Make Compliance Proactive: Use real-time risk scoring and automated reporting to stay ahead of auditors and regulators.

Conclusion: Ready for the 80% Challenge?

With petabyte-scale, cloud-first data, ignoring unstructured data risk is no longer an option. Traditional DSPM tools can’t keep up, leaving most of your data - and your business - vulnerable. Sentra’s agentless, AI-powered platform closes this gap, delivering the discovery, classification, and automated response you need to turn your biggest blind spot into your strongest defense. See how Sentra uncovers your hidden risk - book an instant demo today.

Don’t let unstructured data be your organization’s Achilles’ heel. With Sentra, enterprises finally have a way to secure the data that matters most.

<blogcta-big>

Third-Party OAuth Apps Are the New Shadow Data Risk: Lessons from the Gainsight/Salesforce Incident

Third-Party OAuth Apps Are the New Shadow Data Risk: Lessons from the Gainsight/Salesforce Incident

The recent exposure of customer data through a compromised Gainsight integration within Salesforce environments is more than an isolated event - it’s a sign of a rapidly evolving class of SaaS supply-chain threats. Even trusted AppExchange partners can inadvertently create access pathways that attackers exploit, especially when OAuth tokens and machine-to-machine connections are involved. This post explores what happened, why today’s security tooling cannot fully address this scenario, and how data-centric visibility and identity governance can meaningfully reduce the blast radius of similar breaches.

A Recap of the Incident

In this case, attackers obtained sensitive credentials tied to a Gainsight integration used by multiple enterprises. Those credentials allowed adversaries to generate valid OAuth tokens and access customer Salesforce orgs, in some cases with extensive read capabilities. Neither Salesforce nor Gainsight intentionally misconfigured their systems. This was not a product flaw in either platform. Instead, the incident illustrates how deeply interconnected SaaS environments have become and how the security of one integration can impact many downstream customers.

Understanding the Kill Chain: From Stolen Secrets to Salesforce Lateral Movement

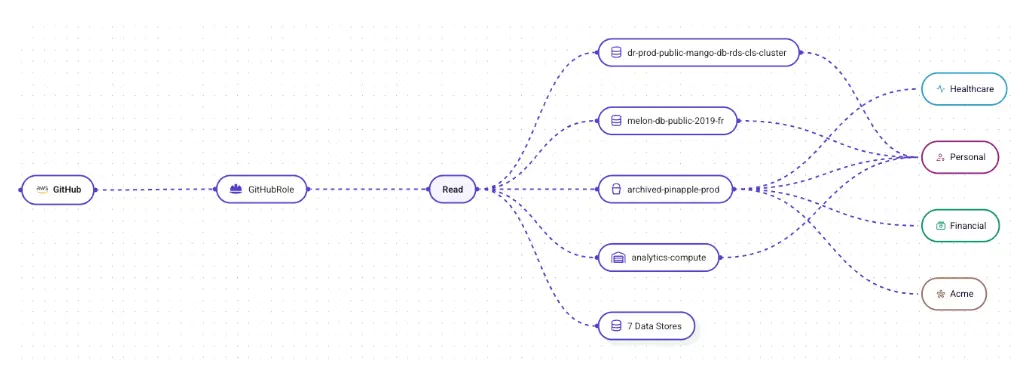

The attackers’ pathway followed a pattern increasingly common in SaaS-based attacks. It began with the theft of secrets; likely API keys, OAuth client secrets, or other credentials that often end up buried in repositories, CI/CD logs, or overlooked storage locations. Once in hand, these secrets enabled the attackers to generate long-lived OAuth tokens, which are designed for application-level access and operate outside MFA or user-based access controls.

What makes OAuth tokens particularly powerful is that they inherit whatever permissions the connected app holds. If an integration has broad read access, which many do for convenience or legacy reasons, an attacker who compromises its token suddenly gains the same level of visibility. Inside Salesforce, this enabled lateral movement across objects, records, and reporting surfaces far beyond the intended scope of the original integration. The entire kill chain was essentially a progression from a single weakly-protected secret to high-value data access across multiple Salesforce tenants.

Why Traditional SaaS Security Tools Missed This

Incident response teams quickly learned what many organizations are now realizing: traditional CASBs and CSPMs don’t provide the level of identity-to-data context necessary to detect or prevent OAuth-driven supply-chain attacks.

CASBs primarily analyze user behavior and endpoint connections, but OAuth apps are “non-human identities” - they don’t log in through browsers or trigger interactive events. CSPMs, in contrast, focus on cloud misconfigurations and posture, but they don’t understand the fine-grained data models of SaaS platforms like Salesforce. What was missing in this incident was visibility into how much sensitive data the Gainsight connector could access and whether the privileges it held were appropriate or excessive. Without that context, organizations had no meaningful way to spot the risk until the compromise became public.

Sentra Helps Prevent and Contain This Attack Pattern

Sentra’s approach is fundamentally different because it starts with data: what exists, where it resides, who or what can access it, and whether that access is appropriate. Rather than treating Salesforce or other SaaS platforms as black boxes, Sentra maps the data structures inside them, identifies sensitive records, and correlates that information with identity permissions including third-party apps, machine identities, and OAuth sessions.

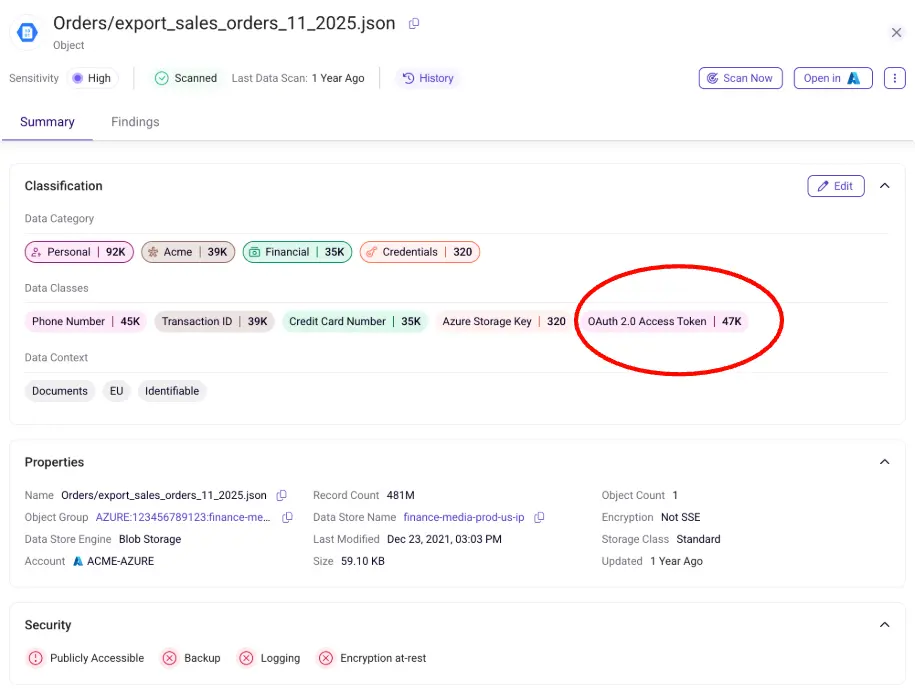

One key pillar of Sentra’s value lies in its DSPM capabilities. The platform identifies sensitive data across all repositories, including cloud storage, SaaS environments, data warehouses, code repositories, collaboration platforms, and even on-prem file systems. Because Sentra also detects secrets such as API keys, OAuth credentials, private keys, and authentication tokens across these environments, it becomes possible to catch compromised or improperly stored secrets before an attacker ever uses them to access a SaaS platform.

Another area where this becomes critical is the detection of over-privileged connected apps. Sentra continuously evaluates the scopes and permissions granted to integrations like Gainsight, identifying when either an app or an identity holds more access than its business purpose requires. This type of analysis would have revealed that a compromised integrated app could see far more data than necessary, providing early signals of elevated risk long before an attacker exploited it.

Sentra further tracks the health and behavior of non-human identities. Service accounts and connectors often rely on long-lived credentials that are rarely rotated and may remain active long after the responsible team has changed. Sentra identifies these stale or overly permissive identities and highlights when their behavior deviates from historical norms. In the context of this incident type, that means detecting when a connector suddenly begins accessing objects it never touched before or when large volumes of data begin flowing to unexpected locations or IP ranges.

Finally, Sentra’s behavior analytics (part of DDR) help surface early signs of misuse. Even if an attacker obtains valid OAuth tokens, their data access patterns, query behavior, or geography often diverge from the legitimate integration. By correlating anomalous activity with the sensitivity of the data being accessed, Sentra can detect exfiltration patterns in real time—something traditional tools simply aren’t designed to do.

The 2026 Outlook: More Incidents Are Coming

The Gainsight/Salesforce incident is unlikely to be the last of its kind. The speed at which enterprises adopt SaaS integrations far exceeds the rate at which they assess the data exposure those integrations create. OAuth-based supply-chain attacks are growing quickly because they allow adversaries to compromise one provider and gain access to dozens or hundreds of downstream environments. Given the proliferation of partner ecosystems, machine identities, and unmonitored secrets, this attack vector will continue to scale.

Prediction:

Unless enterprises add data-centric SaaS visibility and identity-aware DSPM, we should expect three to five more incidents of similar magnitude before summer 2026.

Conclusion

The real lesson from the Gainsight/Salesforce breach is not to reduce reliance on third-party SaaS providers as modern business would grind to a halt without them. The lesson is that enterprises must know where their sensitive data lives, understand exactly which identities and integrations can access it, and ensure those privileges are continuously validated. Sentra provides that visibility and contextual intelligence, making it possible to identify the risks that made this breach possible and help to prevent the next one.

<blogcta-big>

Securing Unstructured Data in Microsoft 365: The Case for Petabyte-Scale, AI-Driven Classification

Securing Unstructured Data in Microsoft 365: The Case for Petabyte-Scale, AI-Driven Classification

The modern enterprise runs on collaboration and nothing powers that more than Microsoft 365. From Exchange Online and OneDrive to SharePoint, Teams, and Copilot workflows, M365 hosts a massive and ever-growing volume of unstructured content: documents, presentations, spreadsheets, image files, chats, attachments, and more.

Yet unstructured = harder to govern. Unlike tidy database tables with defined schemas, unstructured repositories flood in with ambiguous content types, buried duplicates, or unused legacy files. It’s in these stacks that sensitive IP, model training data, or derivative work can quietly accumulate, and then leak.

Consider this: one recent study found that more than 81 % of IT professionals report data-loss events in M365 environments. And to make matters worse, according to the International Data Corporation (IDC), 60% of organizations do not have a strategy for protecting their critical business data that resides in Microsoft 365.

Why Traditional Tools Struggle

- Built-in classification tools (e.g., M365’s native capabilities) often rely on pattern matching or simple keywords, and therefore struggle with accuracy, context, scale and derivative content.

- Many solutions only surface that a file exists and carries a type label - but stop short of mapping who or what can access it, its purpose, and what its downstream exposure might be.

- GenAI workflows now pump massive volumes of unstructured data into copilots, knowledge bases, training sets - creating new blast radii that legacy DLP or labeling tools weren’t designed to catch.

What a Modern Platform Must Deliver

- High-accuracy, petabyte-scale classification of unstructured data (so you know what you have, where it sits, and how sensitive it is). And it must keep pace with explosive data growth and do so cost efficiently.

- Unified Data Access Governance (DAG) - mapping identities (users, service principals, agents), permissions, implicit shares, federated/cloud-native paths across M365 and beyond.

- Data Detection & Response (DDR) - continuous monitoring of data movement, copies, derivative creation, AI agent interactions, and automated response/remediation.

How Sentra addresses this in M365

At Sentra, we’ve built a cloud-native data-security platform specifically to address this triad of capabilities - and we extend that deeply into M365 (OneDrive, SharePoint, Teams, Exchange Online) and other SaaS platforms.

- A newly announced AI Classifier for Unstructured Data accelerates and improves classification across M365’s unstructured repositories (see: Sentra launches breakthrough unstructured-data AI classification capabilities).

- Petabyte-scale processing: our architecture supports classification and monitoring of massive file estates without astronomical cost or time-to-value.

- Seamless support for M365 services: read/write access, ingestion, classification, access-graph correlation, detection of shadow/unmanaged copies across OneDrive and SharePoint—plus integration into our DAG and DDR layers (see our guide: How to Secure Regulated Data in Microsoft 365 + Copilot).

- Cost-efficient deployment: designed for high scale without breaking the budget or massive manual effort.

The Bottom Line

In today’s cloud/AI era, saying “we discovered the PII in my M365 tenant” isn’t enough.

The real question is: Do I know who or what (user/agent/app) can access that content, what its business purpose is, and whether it’s already been copied or transformed into a risk vector?

If your solution can’t answer that, your unstructured data remains a silent, high-stakes liability and resolving concerns becomes a very costly, resource-draining burden. By embracing a platform that combines classification accuracy, petabyte-scale processing, unified DSPM + DAG + DDR, and deep M365 support, you move from “hope I’m secure” to “I know I’m secure.”

Want to see how it works in a real M365 setup? Check out our video or book a demo.

<blogcta-big>

How to Gain Visibility and Control in Petabyte-Scale Data Scanning

How to Gain Visibility and Control in Petabyte-Scale Data Scanning

Every organization today is drowning in data - millions of assets spread across cloud platforms, on-premises systems, and an ever-expanding landscape of SaaS tools. Each asset carries value, but also risk. For security and compliance teams, the mandate is clear: sensitive data must be inventoried, managed and protected.

Scanning every asset for security and compliance is no longer optional, it’s the line between trust and exposure, between resilience and chaos.

Many data security tools promise to scan and classify sensitive information across environments. In practice, doing this effectively and at scale, demands more than raw ‘brute force’ scanning power. It requires robust visibility and management capabilities: a cockpit view that lets teams monitor coverage, prioritize intelligently, and strike the right balance between scan speed, cost, and accuracy.

Why Scan Tracking Is Crucial

Scanning is not instantaneous. Depending on the size and complexity of your environment, it can take days - sometimes even weeks to complete. Meanwhile, new data is constantly being created or modified, adding to the challenge.

Without clear visibility into the scanning process, organizations face several critical obstacles:

- Unclear progress: It’s often difficult to know what has already been scanned, what is currently in progress, and what remains pending. This lack of clarity creates blind spots that undermine confidence in coverage.

- Time estimation gaps: In large environments, it’s hard to know how long scans will take because so many factors come into play — the number of assets, their size, the type of data - structured, semi-structured, or unstructured, and how much scanner capacity is available. As a result, predicting when you’ll reach full coverage is tricky. This becomes especially stressful when scans need to be completed before a fixed deadline, like a compliance audit.

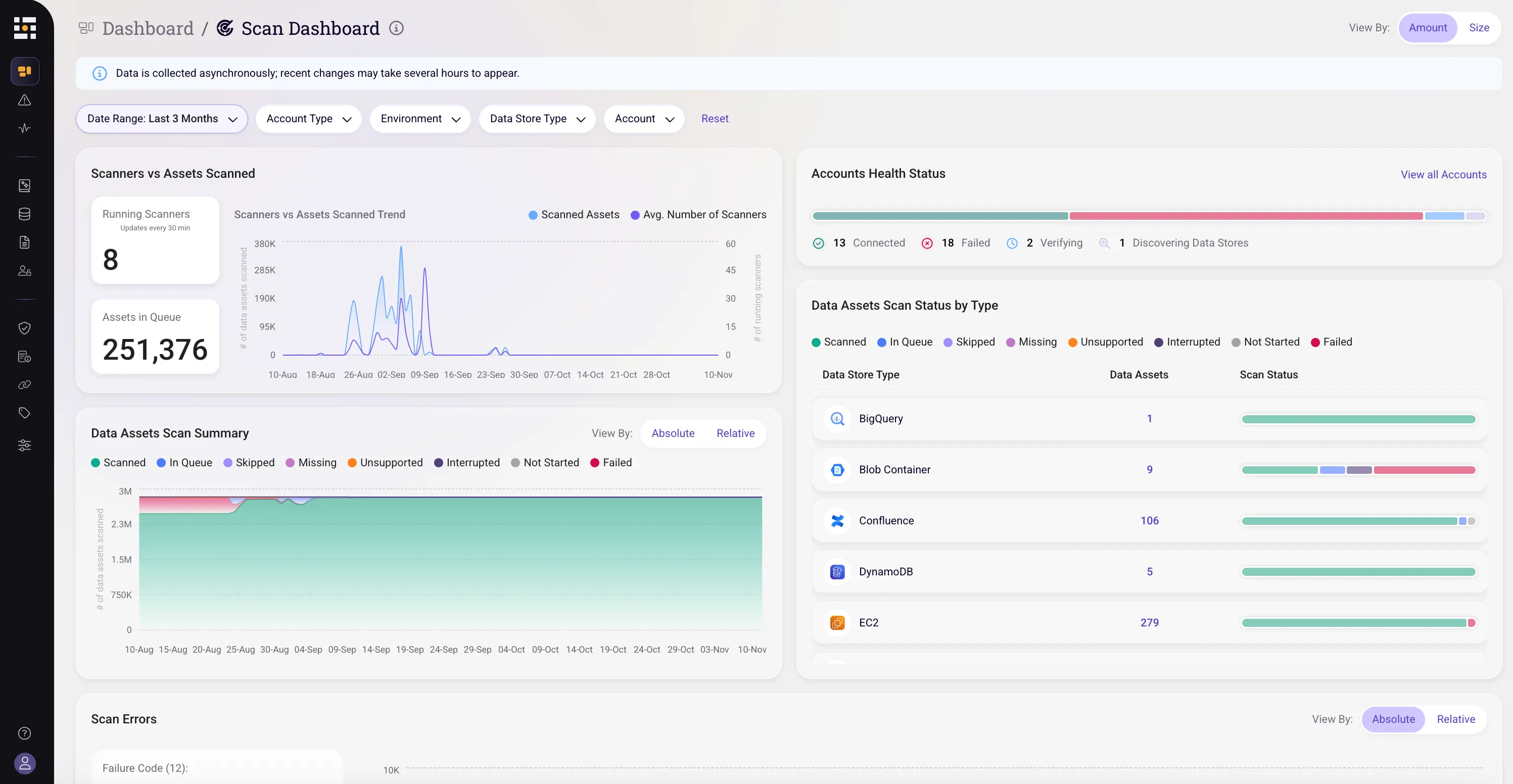

"With Sentra’s Scan Dashboard, we were able to quickly scale up our scanners to meet a tight audit deadline, finish on time, and then scale back down to save costs. The visibility and control it gave us made the whole process seamless”, said CISO of Large Retailer. - Poor prioritization: Not all environments or assets carry the same importance. Yet without visibility into scan status, teams struggle to balance historical scans of existing assets with the ongoing influx of newly created data, making it nearly impossible to prioritize effectively based on risk or business value.

Sentra’s End-to-End Scanning Workflow

Managing scans at petabyte scale is complex. Sentra streamlines the process with a workflow built for scale, clarity, and control that features:

1. Comprehensive Asset Discovery

Before scanning even begins, Sentra automatically discovers assets across cloud platforms, on-premises systems, and SaaS applications. This ensures teams have a complete, up-to-date inventory and visual map of their data landscape, so no environment or data store is overlooked.

Example: New S3 buckets, a freshly deployed BigQuery dataset, or a newly connected SharePoint site are automatically identified and added to the inventory.

2. Configurable Scan Management

Administrators can fine-tune how scans are executed to meet their organization’s needs. With flexible configuration options, such as number of scanners, sampling rates, and prioritization rules - teams can strike the right balance between scan speed, coverage, and cost control.

For instance, compliance-critical assets can be scanned at full depth immediately, while less critical environments can run at reduced sampling to save on compute consumption and costs.

3. Real-Time Scan Dashboard

Sentra’s unified Scan Dashboard provides a cockpit view into scanning operations, so teams always know where they stand. Key features include:

- Daily scan throughput correlated with the number of active scanners, helping teams understand efficiency and predict completion times.

- Coverage tracking that visualizes overall progress and highlights which assets remain unscanned.

- Decision-making tools that allow teams to dynamically adjust, whether by adding scanner capacity, changing sampling rates, or reordering priorities when new high-risk assets appear.

Handling Data Changes

The challenge doesn’t end once the initial scans are complete. Data is dynamic, new files are added daily, existing records are updated, and sensitive information shifts locations. Sentra’s activity feeds give teams the visibility they need to understand how their data landscape is evolving and adapt their data security strategies in real time.

Conclusion

Tracking scan status at scale is complex but critical to any data security strategy. Sentra provides an end-to-end view and unmatched scan control, helping organizations move from uncertainty to confidence with clear prediction of scan timelines, faster troubleshooting, audit-ready compliance, and smarter, cost-efficient decisions for securing data.

<blogcta-big>

Best DSPM Tools: Top 9 Vendors Compared

Best DSPM Tools: Top 9 Vendors Compared

Enhanced DSPM Adoption Is the Most Important Data Security Trend of 2026

Over the past few years, organizations have realized that traditional security tools can’t keep pace with how data moves and grows today. Exploding volumes of sensitive data now flourish across multi-cloud environments, SaaS platforms, and AI systems, often without full visibility by the teams responsible for securing it. Unstructured data presents the greatest risk - representing over 80% of corporate data.

That’s why Data Security Posture Management (DSPM) has become a critical part of the modern security stack. DSPM tools help organizations automatically discover, classify, monitor, and protect sensitive data - no matter where it lives or travels.

But in 2026, the data security game is changing. Many DSPMs can tell you what your data is, but more is needed. Leading DSPM platforms are going beyond visibility. They’re delivering real-time AI-enhanced contextual business insights, automated remediation, and AI-aware accurate protection that scales with your dynamic data.

AI-enhanced DSPM Capabilities in 2026

Not all DSPM tools are built the same. The top platforms share a few key traits that define the next generation of data security posture management:

Top DSPM Tools to Watch in 2026

Based on recent analyst coverage, market growth, and innovation across the industry, here are the top DSPM platforms to watch this year, each contributing to how data security is evolving.

1. Sentra

As a cloud-native DSPM platform, Sentra focuses on continuous data protection, not just visibility. It discovers and accurately classifies sensitive data in real time across all cloud environments, while automatically remediating risks through policy-driven automation.

What sets Sentra apart:

- Continuous, automated discovery and classification across your entire data estate - cloud, SaaS, and on-premises.

- Business Contextual insights that understand the purpose of data, accurately linking data, identity, and risk.

- Automatic learning to discern customer unique data types and continuously improve labeling over time.

- Petabyte scaling and low compute consumption for 10X cost efficiency.

- Automated remediation workflows and integrations to fix issues instantly.

- Built-in coverage for data flowing through AI and SaaS ecosystems.

Ideal for: Security teams looking for a cloud-native DSPM platform built for scalability in the AI era with automation at its core.

2. BigID

A pioneer in data discovery and classification, BigID bridges DSPM and privacy governance, making it a good choice for compliance-heavy sectors.

Ideal for: Organizations prioritizing data privacy, governance, and audit readiness.

3. Prisma Cloud (Palo Alto Networks)

Prisma’s DSPM offering integrates closely with CSPM and CNAPP components, giving security teams a single pane of glass for infrastructure and data risk.

Ideal for: Enterprises with hybrid or multi-cloud infrastructures already using Palo Alto tools.

4. Microsoft Purview / Defender DSPM

Microsoft continues to invest heavily in DSPM through Purview, offering rich integration with Microsoft 365 and Azure ecosystems. Note: Sentra integrates with Microsoft Purview Information Protection (MPIP) labeling and DLP policies.

Ideal for: Microsoft-centric organizations seeking native data visibility and compliance automation.

5. Securiti.ai

Positioned as a “Data Command Center,” Securiti unifies DSPM, privacy, and governance. Its strength lies in automation and compliance visibility and SaaS coverage.

Ideal for: Enterprises looking for an all-in-one governance and DSPM solution.

6. Cyera

Cyera has gained attention for serving the SMB segment with its DSPM approach. It uses LLMs for data context, supplementing other classification methods, and provides integrations to IAM and other workflow tools.

Ideal for: Small/medium growing companies that need basic DSPM functionality.

7. Wiz

Wiz continues to lead in cloud security, having added DSPM capabilities into its CNAPP platform. They’re known for deep multi-cloud visibility and infrastructure misconfiguration detection.

Ideal for: Enterprises running complex cloud environments looking for infrastructure vulnerability and misconfiguration management.

8. Varonis

Varonis remains a strong player for hybrid and on-prem data security, with deep expertise in permissions and access analytics and focus on SaaS/unstructured data.

Ideal for: Enterprises with legacy file systems or mixed cloud/on-prem architectures.

9. Netwrix

Netwrix’s platform incorporates DSPM-related features into its auditing and access control suite.

Ideal for: Mid-sized organizations seeking DSPM as part of a broader compliance solution.

Emerging DSPM Trends to Watch in 2026

- AI Data Security: As enterprises adopt GenAI, DSPM tools are evolving to secure data used in training and inference.

- Identity-Centric Risk: Understanding and controlling both human and machine identities is now central to data posture.

- Automation-Driven Security: Remediation workflows are becoming the differentiator between “good” and “great.”

Market Consolidation: Expect to see CNAPP, legacy security, and cloud vendors acquiring DSPM startups to strengthen their coverage.

How to Choose the Right DSPM Tool

When evaluating a DSPM solution, align your choice with your data landscape and goals:

- Cloud-Native Company Choose tools designed for cloud-first environments (like Sentra, Securiti, Wiz).

- Compliance Priority Platforms like Sentra, BigID or Securiti excel in privacy and governance.

- Microsoft-Heavy Stack Purview and Sentra DSPM offer native integration.

- Hybrid Environment Consider Varonis, Prisma Cloud, or Sentra for extended visibility.

- Enterprise Scalability Evaluate deployment ease, petabyte scalability, cloud resource consumption, scanning efficiency, etc. (Sentra excels here)

*Pro Tip: Run a proof of concept (POC) across multiple environments to test scalability, accuracy, and operational cost effectiveness before full deployment.

Final Thoughts: DSPM Is About Action

The best DSPM tools in 2026 share one core principle, they help organizations move from visibility to action.

At Sentra, we believe that the future of DSPM lies in continuous, automated data protection:

- Real-time discovery of sensitive data @ scale

- Context-aware prioritization for business insight

- Automated remediation that reduces risk instantly

As data continues to power AI, analytics, and innovation, DSPM ensures that innovation never comes at the cost of security. See how Sentra helps leading enterprises protect data across multi-cloud and SaaS environments.

<blogcta-big>

How SLMs (Small Language Models) Make Sentra’s AI Faster and More Accurate

How SLMs (Small Language Models) Make Sentra’s AI Faster and More Accurate

The LLM Hype, and What’s Missing

Over the past few years, large language models (LLMs) have dominated the AI conversation. From writing essays to generating code, LLMs like GPT-4 and Claude have proven that massive models can produce human-like language and reasoning at scale.

But here's the catch: not every task needs a 70-billion-parameter model. Parameters are computationally expensive - they require both memory and processing time.

At Sentra, we discovered early on that the work our customers rely on for accurate, scalable classification of massive data flows - isn’t about writing essays or generating text. It’s about making decisions fast, reliably, and cost-effectively across dynamic, real-world data environments. While large language models (LLMs) are excellent at solving general problems, it creates a lot of unnecessary computational overhead.

That’s why we’ve shifted our focus toward Small Language Models (SLMs) - compact, specialized models purpose-built for a single task - understanding and classifying data efficiently. By running hundreds of SLMs in parallel on regular CPUs, Sentra can deliver faster insights, stronger data privacy, and a dramatically lower total cost of AI-based classification that scales with their business, not their cloud bill.

What Is an SLM?

An SLM is a smaller, domain-specific version of a language model. Instead of trying to understand and generate any kind of text, an SLM is trained to excel at a particular task, such as identifying the topic of a document (what the document is about or what type of document it is), or detecting sensitive entities within documents, such as passwords, social security numbers, or other forms of PII.

In other words: If an LLM is a generalist, an SLM is a specialist. At Sentra, we use SLMs that are tuned and optimized for security data classification, allowing them to process high volumes of content with remarkable speed, consistency, and precision. These SLMs are based on standard open source models, but trained with data that was curated by Sentra, to achieve the level of accuracy that only Sentra can guarantee.

From LLMs to SLMs: A Strategic Evolution