Cloud Security Strategy: Key Elements, Principles, and Challenges

What is a Cloud Security Strategy?

During the initial phases of digital transformation, organizations may view cloud services as an extension of their traditional data centers. But to fully harness cloud security, there must be progression beyond this view.

A cloud security strategy is an extensive framework that outlines how an organization manages its dynamic, software-defined security ecosystem and protects its cloud-based assets. Security, in its essence, is about managing risk – addressing the probability and impact of attacks instead of eliminating them outright. This reality essentially positions security as a continuous endeavor rather than being a finite problem with a singular solution.

Cloud security strategy advocates for:

- Ensuring the cloud framework’s integrity: Involves implementing security controls as a foundational part of cloud service planning and operational processes. The aim is to ensure that security measures are a seamless part of the cloud environment, guarding every resource.

-

- Harnessing cloud capabilities for defense: Employing the cloud as a force multiplier to bolster overall security posture. This shift in strategy leverages the cloud's agility and advanced capabilities to enhance security mechanisms, particularly those natively integrated into the cloud infrastructure.

Why is a Cloud Security Strategy Important?

Some organizations make the mistake of miscalculating the duality of productivity and security. They often learn the hard way that while innovation drives competitiveness, robust security preserves it. The absence of either can lead to diminished market presence or organizational failure. As such, a balanced focus on both fronts is paramount.

Customers are more likely to do business with organizations that consistently retain the trust to protect proprietary data. When a single instance of a data breach or a security incident that can erode customer trust and damage an organization's reputation, the stakes are naturally high. A cloud security strategy can help organizations address these challenges by providing a framework for managing risk.

A well-crafted cloud security strategy will include the following:

- Risk assessment to identify and prioritize the organization's key security risks.

- Set of security controls to mitigate those risks.

- Process framework for monitoring and improving the security posture of the cloud environment over time.

Key Elements of a Cloud Security Strategy

Tactically, a cloud security strategy empowers organizations to navigate the complexities of shared responsibility models, where the burden of security is divided between the cloud provider and the client.

Key Challenges in Building a Cloud Security Strategy

When organizations shift from on-premises to cloud computing, the biggest stumbling block is their lack of expertise in dealing with a decentralized environment. Some consider agility and performance to be the super-features that led them to adopt the cloud. Anything that impacts the velocity of deployment is met with resistance. As a result, the challenge often lies in finding the sweet spot between achieving efficiency and administering robust security. But in reality, there are several factors that compound the complexity of this challenge.

Lack of Visibility

If your organization lacks insight into its cloud activity, it cannot accurately assess the associated risks. Lack of visibility also introduces multifaceted challenges. Initially, it can be about cataloging active elements in your cloud. Subsequently, it can restrain comprehension of the data, operation, and interconnections of those systems.

Imagine manually checking each cloud service across different HA zones for each provider. You'd be manifesting virtual machines, surveying databases, and tracking user accounts. It's a complex task which can rapidly become unmanageable.

Most major cloud service providers (CSPs) offer monitoring services to streamline this complexity into a more efficient strategy. But even with these tools, you mostly see the numbers—data stores, resources—but not the substance within or their inter-relationship. In reality, a production-grade observability stack depends on a mix of CSP provider tools, third-party services, and architecture blueprints to assess the security landscape.

Human Errors

Surprisingly, the most significant cloud security threat originates from your own IT team's oversights. Gartner estimates that by 2025, a staggering 99% of cloud security failures will be due to human errors.

One contributing factor is the shift to the cloud which demands specialized skills. Seasoned IT professionals who are already well-versed in on-prem security may potentially mishandle cloud platforms. These lapses usually involve issues like misconfigured storage buckets, exposed network ports, or insecure use of accounts. Such mistakes, if unnoticed, offer attackers easy pathways to infiltrate cloud environments.

An organization can likely utilize a mix of service models—Infrastructure as a Service (IaaS) for foundational compute resources, Platform as a Service (PaaS) for middleware orchestration, and Software as a Service (SaaS) for on-demand applications. For each tier, manual security controls might entail crafting bespoke policies for every service. This method provides meticulous oversight, albeit with considerable demands on time and the ever-present risk of human error.

Misconfiguration

OWASP highlights that around 4.51% of applications become susceptible when wrongly configured or deployed. The dynamism of cloud environments, where assets are constantly deployed and updated, exacerbates this risk.

While human errors are more about the skills gap and oversight, the root of misconfiguration often lies in the complexity of an environment, particularly when a deployment doesn’t follow best practices. Cloud setups are intricate, where each change or a newly deployed service can introduce the potential for error. And as cloud offerings evolve, so do the configuration parameters, subsequently increasing the likelihood of oversight.

Some argue that it’s the cloud provider that ensures the security of the cloud. Yet, the shared responsibility model places a significant portion of the configuration management on the user. Besides the lack of clarity, this division often leads to gaps in security postures.

Automated tools can help but have their own limitations. They require precise tuning to recognize the correct configurations for a given context. Without comprehensive visibility and understanding of the environment, these tools tend to miss critical misconfigurations.

Compliance with Regulatory Standards

When your cloud environment sprawls across jurisdictions, adherence to regulatory standards is naturally a complex affair. Each region comes with its mandates, and cloud services must align with them. Data protection laws like GDPR or HIPAA additionally demand strict handling and storage of sensitive information.

The key to compliance in the cloud is a thorough understanding of data residency, how it is protected, and who has access to it. A thorough understanding of the shared responsibility model is also crucial in such settings. While cloud providers ensure their infrastructure meets compliance standards, it's up to organizations to maintain data integrity, secure their applications, and verify third-party services for compliance.

Modern Cloud Security Strategy Principles

Because the cloud-native ecosystem is still an emerging discipline with a high degree of process variations, a successful security strategy calls for a nuanced approach. Implementing security should start with low-friction changes to workflows, the development processes, and the infrastructure that hosts the workload.

Here’s how it can be imagined:

Establishing Comprehensive Visibility

Visibility is the foundational starting point. Total, accessible visibility across the cloud environment helps achieve a deeper understanding of your systems' interactions and behaviors by offering a clear mapping of how data moves and is processed.

Establish a model where teams can achieve up-to-date, easy-to-digest overviews of their cloud assets, understand their configuration, and recognize how data flows between them. Visibility also lays the foundation for traceability and observability. Modern performance analysis stacks leverage the principle of visibility, which eventually leads to traceability—the ability to follow actions through your systems. And then to observability—gaining insight from what your systems output.

Enabling Business Agility

The cloud is known for its agile nature that enables organizations to respond swiftly to market changes, demands, and opportunities. Yet, this very flexibility requires a security framework that is both robust and adaptable. Security measures must protect assets without hindering the speed and flexibility that give cloud-based businesses their edge.

To truly scale and enhance efficiency, your security strategy must blend the organization’s technology, structure, and processes together. This ensures that the security framework is capable of supporting fast-paced development cycles, ensures compliance, and fosters innovation without compromising on protection. In practice, this means integrating security into the development lifecycle from its initial stages, automating security processes where possible, and ensuring that security protocols can accommodate the rapid deployment of services.

Cross-Functional Coordination

A future-focused security strategy acknowledges the need for agility in both action and thought. A crucial aspect of a robust cloud security strategy is avoiding the pitfall where accountability for security risks is mistakenly assigned to security teams rather than to the business owners of the assets. Such misplacement arises from the misconception of security as a static technical hurdle rather than the dynamic risk it can introduce.

Security cannot be a siloed function; instead, every stakeholder has a part to play in securing cloud assets. The success of your security strategy is largely influenced by distinguishing between healthy and unhealthy friction within DevOps and IT workflows. The strategic approach blends security seamlessly into cloud operations, challenging teams to preemptively consider potential threats during design and to rectify vulnerabilities early in the development process. This constructive friction strengthens systems against attacks, much like stress tests to inspect the resilience of a system.

However, the practicality of security in a dynamic cloud setting demands more than stringent measures; it requires smart, adaptive protocols. Excessive safeguards that result in frequent false positives or overcomplicate risk assessments can impact the rapid development cycles characteristic of cloud environments. To counteract this, maintaining the health of relationships within and across teams is essential.

Ongoing and Continuous Improvement

Adopting agile security practices involves shifting from a perfectionist mindset to embracing a baseline of “minimum viable security.” This baseline evolves through continuous incremental improvements, matching the agility of cloud development. In a production-grade environment, this relies on a data-driven approach where user experiences, system performance, and security incidents shape the evolution of the platform.

The commitment to continuous improvement means that no system is ever "finished." Security is seen as an ongoing process, where DevSecOps practices can ensure that every code commit is evaluated against security benchmarks, allowing for immediate correction and learning from any identified issues.

To truly embody continuous improvement though, organizations must foster a culture that encourages experimentation and learning from failures. Blameless postmortems following security incidents, for example, can uncover root causes without fear of retribution, ensuring that each issue is a learning opportunity.

Preventing Security Vulnerabilities Early

A forward-thinking security strategy focuses on preempting risks. The 'shift left' concept evolved to solve this problem by integrating security practices at the very beginning and throughout the application development lifecycle. Practically, this approach embeds security tools and checks into the pipeline where the code is written, tested, and deployed.

Start with outlining a concise strategy document that defines your shift-left approach. It needs a clear vision, designated roles, milestones, and clear metrics. For large corporations, this could be a complex yet indispensable task—requiring thorough mapping of software development across different teams and possibly external vendors.

The aim here is to chart out the lifecycle of software from development to deployment, identifying the people involved, the processes followed, and the technologies used. A successful approach to early vulnerability prevention also includes a comprehensive strategy for supply chain risk management. This involves scrutinizing open-source components for vulnerabilities and establishing a robust process for regularly updating dependencies.

How to Create a Robust Cloud Security Strategy

Before developing a security strategy, assess the inherent risks your organization may be susceptible to. The findings of the risk assessment should be treated as the baseline to develop a security architecture that aligns with your cloud environment's business goals and risk tolerance.

In most cases, a cloud security architecture should include the following combination of technical, administrative and physical controls for comprehensive security:

Access and Authentication Controls

The foundational principle of cloud security is to ensure that only authorized users can access your environment. The emphasis should be on strong, adaptive authentication mechanisms that can respond to varying risk levels.

Build an authentication framework that is non-static. It should scale with risk, assessing context, user behavior, and threat intelligence. This adaptability ensures that security is not a rigid gate but a responsive, intelligent gateway that can be configured to suit the complexity of different cloud environments and sophisticated threat actors.

Actionable Steps

- Enforce passwordless or multi-factor authentication (MFA) mechanisms to support a dynamic security ethos.

- Adjust permissions dynamically based on contextual data.

- Integrate real-time risk assessments that actively shape and direct access control measures.

- Employ AI mechanisms for behavioral analytics and adaptive challenges.

- Develop a trust-based security perimeter centered around user identity.

Identify and Classify Sensitive Data

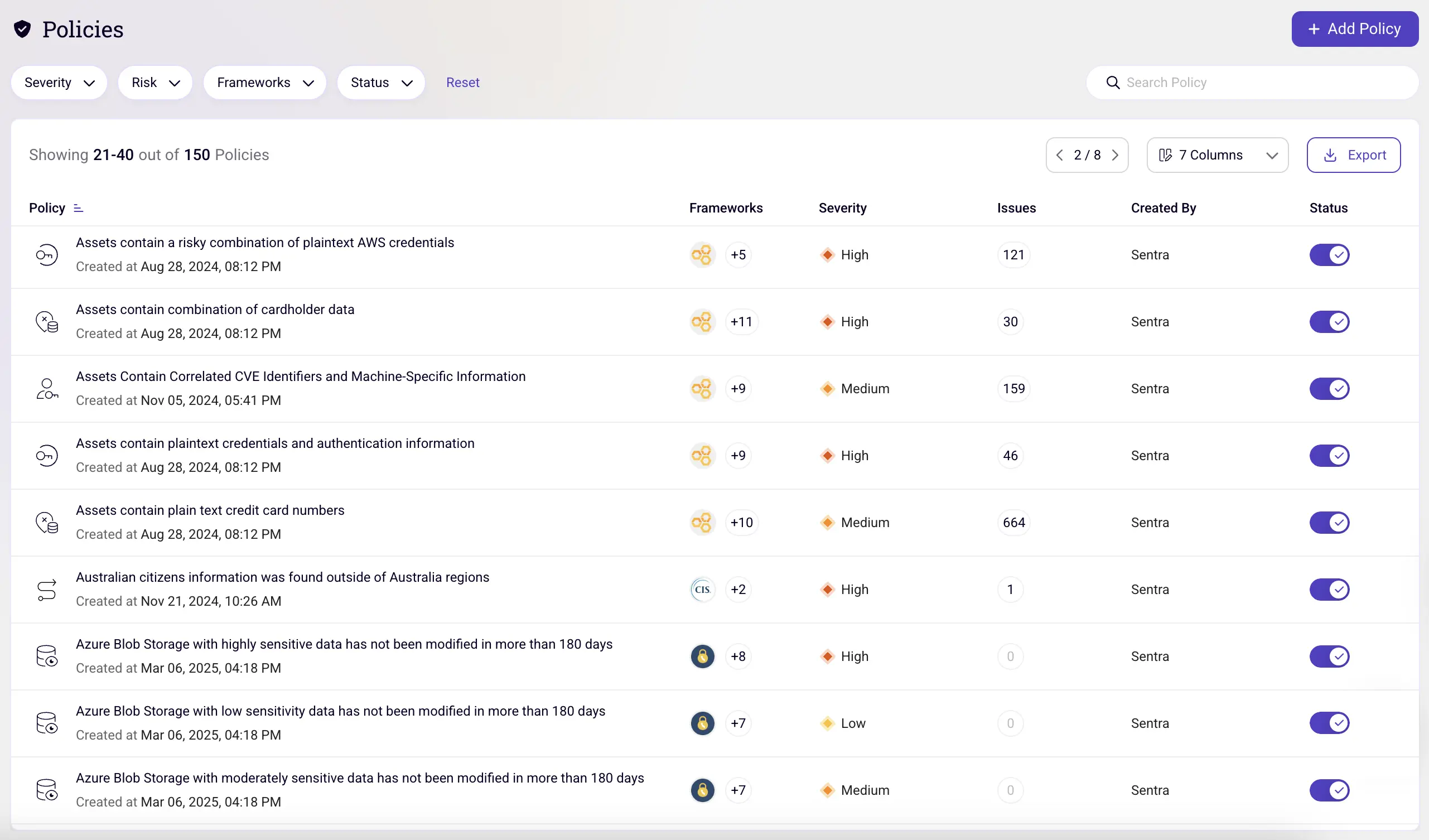

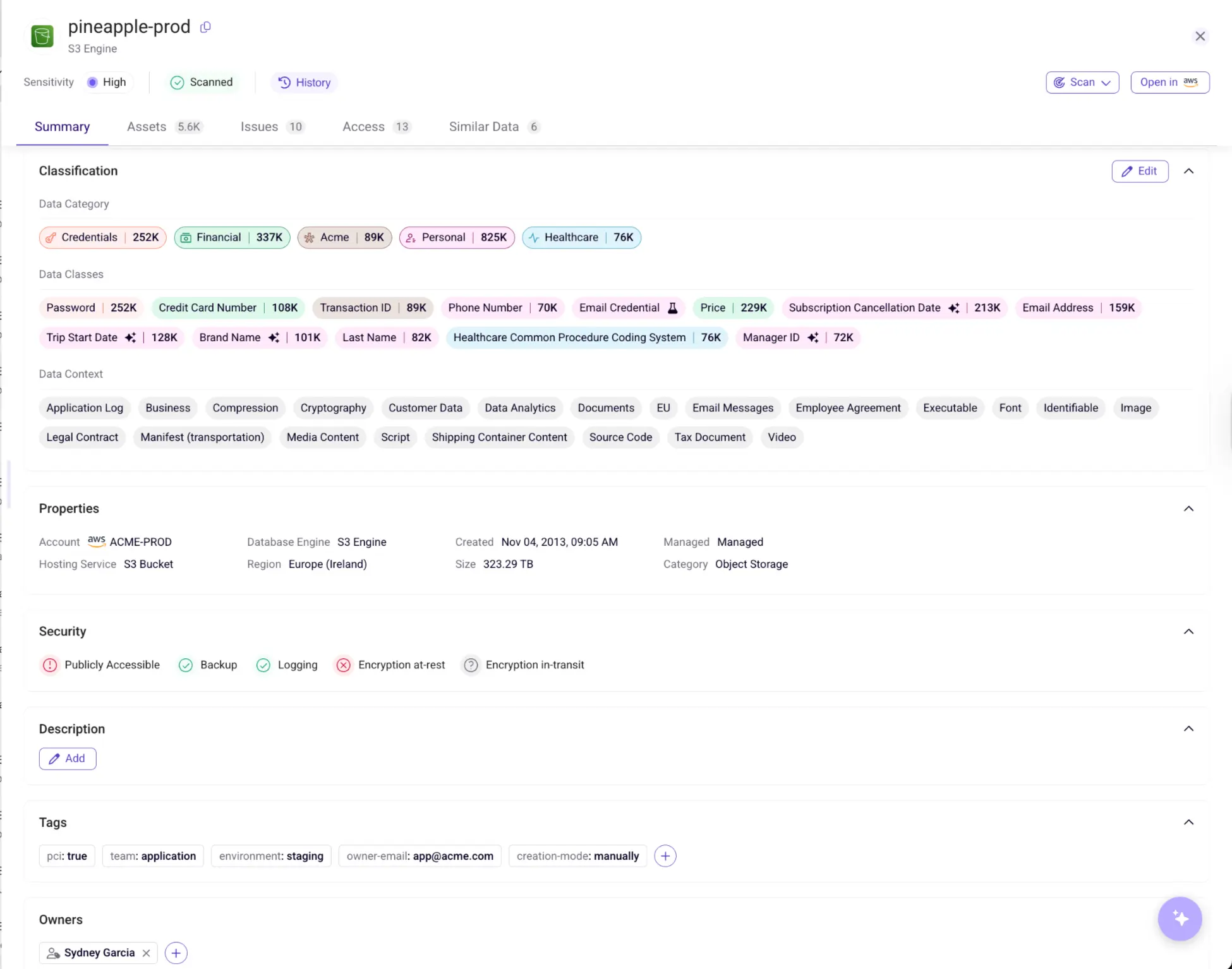

Before classification, locate sensitive cloud data first. Implement enterprise-grade data discovery tools and advanced scanning algorithms that seamlessly integrate with cloud storage services to detect sensitive data points.

Once identified, the data should be tagged with metadata that reflects its sensitivity level; typically by using automated classification frameworks capable of processing large datasets at scale. These systems should be configured to recognize various data privacy regulations (like GDPR, HIPAA, etc.) and proprietary sensitivity levels.

Actionable Steps

- Establish a data governance framework agile enough to adapt to the cloud's fluid nature.

- Create an indexed inventory of data assets, which is essential for real-time risk assessment and for implementing fine-grained access controls.

- Ensure the classification system is backed by policies that dynamically adjust controls based on the data’s changing context and content.

Monitoring and Auditing

Define a monitoring strategy that delivers service visibility across all layers and dimensions. A recommended practice is to balance in-depth telemetry collection with a broad, end-to-end view and east-west monitoring that encompasses all aspects of service health.

Treat each dimension as crucial—depth ensures you're catching the right data, breadth ensures you're seeing the whole picture, and the east-west focus ensures you're always tuned into availability, performance, security, and continuity. This tri-dimensional strategy also allows for continuous compliance checks against industry standards, while helping with automated remediation actions in cases of deviations.

Actionable Steps

- Implement deep-dive telemetry to gather detailed data on transactions, system performance, and potential security events.

- Utilize specialized monitoring agents that span across the stack, providing insights into the OS, applications, and services.

- Ensure full visibility by correlating events across networks, servers, databases, and application performance.

- Deploy network traffic analysis to track lateral movement within the cloud, which is indicative of potential security threats.

Data Encryption and Tokenization

Construct a comprehensive approach that embeds security within the data itself. This strategy ensures data remains indecipherable and useless to unauthorized entities, both at rest and in transit.

When encrypting data at rest, protocols like AES-256 ensure that should the physical security controls fail, the data remains worthless to unauthorized users. For data in transit, TLS secures the channels over which data travels to prevent interceptions and leaks.

Tokenization takes a different approach by swapping out sensitive data with unique symbols (also known as tokens) to keep the real data secure. Tokens can safely move through systems and networks without revealing what they stand for.

Actionable Steps

- Embrace strong encryption for data at rest to render it inaccessible to intruders. Implement industry-standard protocols such as AES-256 for storage and database encryption.

- Mandate TLS protocols to safeguard data in transit, eliminating vulnerabilities during data movement across the cloud ecosystem.

- Adopt tokenization to substitute sensitive data elements with non-sensitive tokens. This renders the data non-exploitable in its tokenized form.

- Isolate the tokenization system, maintaining the token mappings in a highly restricted environment detached from the operational cloud services.

Incident Response and Disaster Recovery

Modern disaster recovery (DR) strategies are typically centered around intelligent, automated, and geographically diverse backups. With that in mind, design your infrastructure in a way that anticipates failure, with planning focused on rapid failback.

Planning for the unknown essentially means preparing for all outage permutations. Classify and prepare for the broader impact of outages, which encompass security, connectivity, and access.

Define your recovery time objective (RTO) and recovery point objective (RPO) based on data volatility. For critical, frequently modified data, aim for a low RPO and adjust RTO to the shortest feasible downtime.

Actionable Steps

- Implement smart backups that are automated, redundant, and cross-zone.

- Develop incident response protocols specific to the cloud. Keep these dynamic while testing them frequently.

- Diligently choose between active-active or active-passive configurations to balance expense and complexity.

- Focus on quick isolation and recovery by using the cloud's flexibility to your advantage.

Conclusion

Organizations must discard the misconception that what worked within the confines of traditional data centers will suffice in the cloud. Sticking to traditional on-premises security solutions and focusing solely on perimeter defense is irrelevant in the cloud arena. The traditional model—where data was a static entity within an organization’s stronghold—is now also obsolete.

Like earlier shifts in computing, the modern IT landscape demands fresh approaches and agile thinking to neutralize cloud-centric threats. The challenge is to reimagine cloud data security from the ground up, shifting focus from infrastructure to the data itself.

Sentra's innovative data-centric approach, which focuses on Data Security Posture Management (DSPM), emphasizes the importance of protecting sensitive data in all its forms. This ensures the security of data whether at rest, in motion, or even during transitions across platforms.

Book a demo to explore how Sentra's solutions can transform your approach to your enterprise's cloud security strategy.

<blogcta-big>

.webp)

.webp)