AWS Security Groups: Best Practices, EC2, & More

What are AWS Security Groups?

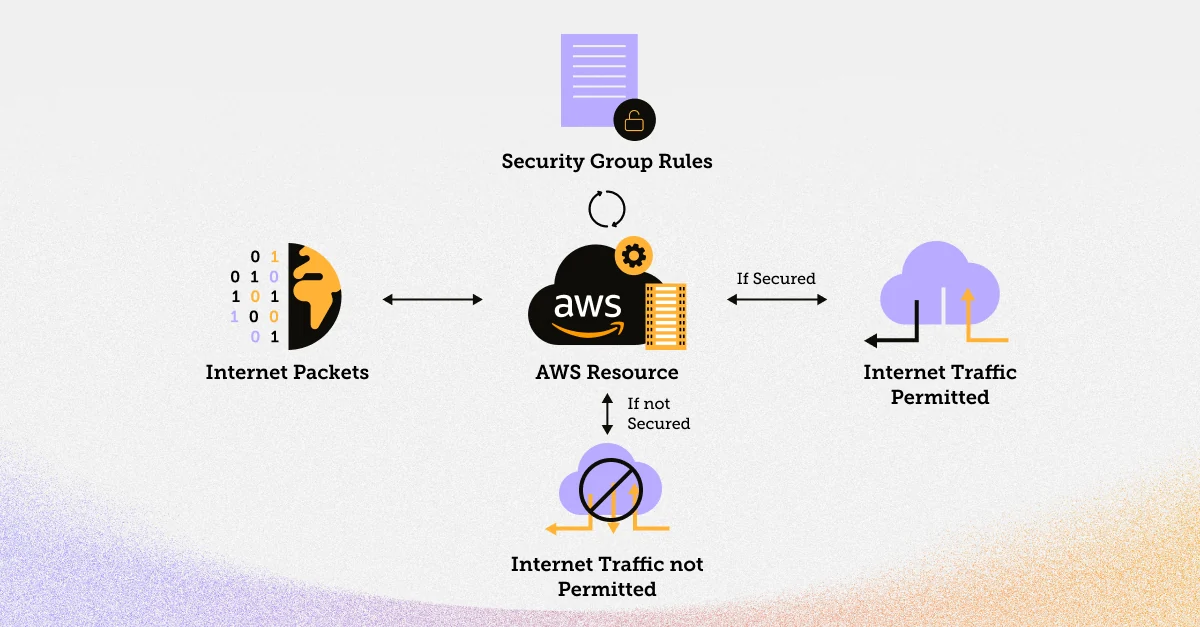

AWS Security Groups are a vital component of AWS's network security and cloud data security. They act as a virtual firewall that controls inbound and outbound traffic to and from AWS resources. Each AWS resource, such as Amazon Elastic Compute Cloud (EC2) instances or Relational Database Service (RDS) instances, can be associated with one or more security groups.

Security groups operate at the instance level, meaning that they define rules that specify what traffic is allowed to reach the associated resources. These rules can be applied to both incoming and outgoing traffic, providing a granular way to manage access to your AWS resources.

How Do AWS Security Groups Work?

To comprehend how AWS Security Groups, in conjunction with AWS security tools, function within the AWS ecosystem, envision them as gatekeepers for inbound and outbound network traffic. These gatekeepers rely on a predefined set of rules to determine whether traffic is permitted or denied.

Here's a simplified breakdown of the process:

Inbound Traffic: When an incoming packet arrives at an AWS resource, AWS evaluates the rules defined in the associated security group. If the packet matches any of the rules allowing the traffic, it is permitted; otherwise, it is denied.

Outbound Traffic: Outbound traffic from an AWS resource is also controlled by the security group's rules. It follows the same principle: traffic is allowed or denied based on the rules defined for outbound traffic.

Security groups are stateful, which means that if you allow inbound traffic from a specific IP address, the corresponding outbound response traffic is automatically allowed. This simplifies rule management and ensures that related traffic is not blocked.

Types of Security Groups in AWS

There are two types of AWS Security Groups:

For this guide, we will focus on VPC Security Groups as they are more versatile and widely used.

How to Use Multiple Security Groups in AWS

In AWS, you can associate multiple security groups with a single resource. When multiple security groups are associated with an instance, AWS combines their rules. This is done in a way that allows for flexibility and ease of management. The rules are evaluated as follows:

- Union: Rules from different security groups are merged. If any security group allows the traffic, it is permitted.

- Deny Overrides Allow: If a rule in one security group denies the traffic, it takes precedence over any rule that allows the traffic in another security group.

- Default Deny: If a packet doesn't match any rule, it is denied by default.

Let's explore how to create, manage, and configure security groups in AWS.

Security Groups and Network ACLs

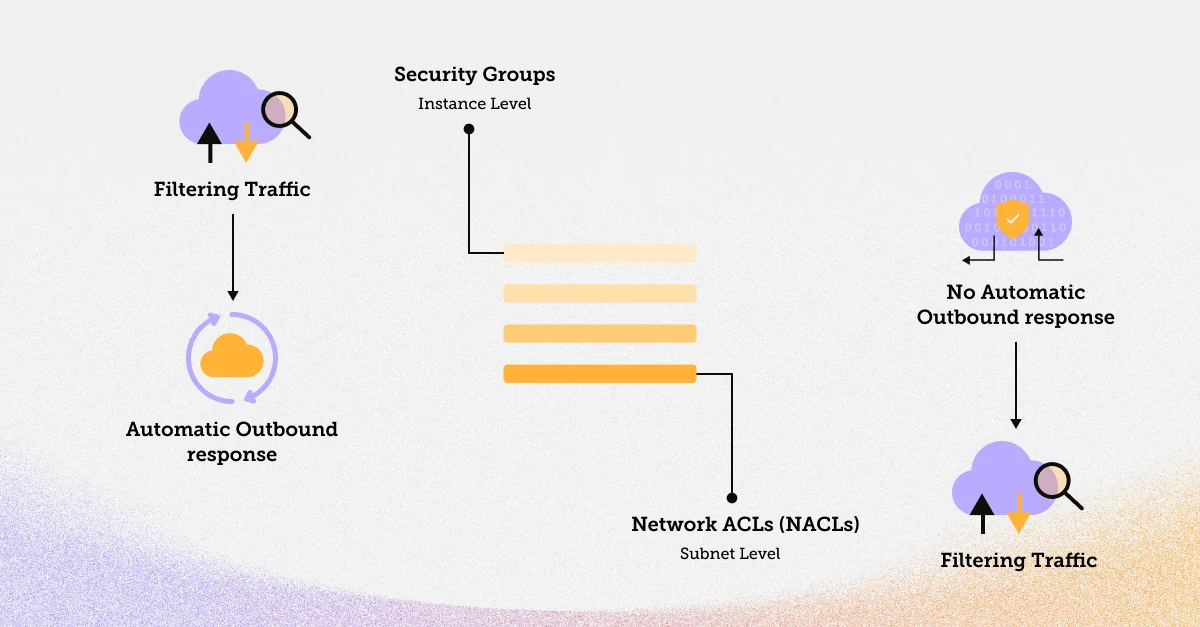

Before diving into security group creation, it's essential to understand the difference between security groups and Network Access Control Lists (NACLs). While both are used to control inbound and outbound traffic, they operate at different levels.

Security Groups: These operate at the instance level, filtering traffic to and from the resources (e.g., EC2 instances). They are stateful, which means that if you allow incoming traffic from a specific IP, outbound response traffic is automatically allowed.

Network ACLs (NACLs): These operate at the subnet level and act as stateless traffic filters. NACLs define rules for all resources within a subnet, and they do not automatically allow response traffic.

For the most granular control over traffic, use security groups for instance-level security and NACLs for subnet-level security.

AWS Security Groups Outbound Rules

AWS Security Groups are defined by a set of rules that specify which traffic is allowed and which is denied. Each rule consists of the following components:

- Type: The protocol type (e.g., TCP, UDP, ICMP) to which the rule applies.

- Port Range: The range of ports to which the rule applies.

- Source/Destination: The IP range or security group that is allowed to access the resource.

- Allow/Deny: Whether the rule allows or denies traffic that matches the rule criteria.

Now, let's look at how to create a security group in AWS.

Creating a Security Group in AWS

To create a security group in AWS (through the console), follow these steps:

Your security group is now created and ready to be associated with AWS resources.

Below, we'll demonstrate how to create a security group using the AWS CLI.

In the above command:

--group-name specifies the name of your security group.

--description provides a brief description of the security group.

After executing this command, AWS will return the security group's unique identifier, which is used to reference the security group in subsequent commands.

Adding a Rule to a Security Group

Once your security group is created, you can easily add, edit, or remove rules. To add a new rule to an existing security group through a console, follow these steps:

- Select the security group you want to modify in the EC2 Dashboard.

- In the "Inbound Rules" or "Outbound Rules" tab, click the "Edit Inbound Rules" or "Edit Outbound Rules" button.

- Click the "Add Rule" button.

- Define the rule with the appropriate type, port range, and source/destination.

- Click "Save Rules."

To create a Security Group, you can also use the create-security-group command, specifying a name and description. After creating the Security Group, you can add rules to it using the authorize-security-group-ingress and authorize-security-group-egress commands. The code snippet below adds an inbound rule to allow SSH traffic from a specific IP address range.

Assigning a Security Group to an EC2 Instance

To secure your EC2 instances using security groups through the console, follow these steps:

- Navigate to the EC2 Dashboard in the AWS Management Console.

- Select the EC2 instance to which you want to assign a security group.

- Click the "Actions" button, choose "Networking," and then click "Change Security Groups."

- In the "Assign Security Groups" dialog, select the desired security group(s) and click "Save."

Your EC2 instance is now associated with the selected security group(s), and its inbound and outbound traffic is governed by the rules defined in those groups.

When launching an EC2 instance, you can specify the Security Groups to associate with it. In the example above, we associate the instance with a Security Group using the --security-group-ids flag.

Deleting a Security Group

To delete a security group via the AWS Management Console, follow these steps:

- In the EC2 Dashboard, select the security group you wish to delete.

- Check for associated instances and disassociate them, if necessary.

- Click the "Actions" button, and choose "Delete Security Group."

- Confirm the deletion when prompted.

- Receive confirmation of the security group's removal.

To delete a Security Group, you can use the delete-security-group command and specify the Security Group's ID through AWS CLI.

AWS Security Groups Best Practices

Here are some additional best practices to keep in mind when working with AWS Security Groups:

Enable Tracking and Alerting

One best practice is to enable tracking and alerting for changes made to your Security Groups. AWS provides a feature called AWS Config, which allows you to track changes to your AWS resources, including Security Groups. By setting up AWS Config, you can receive notifications when changes occur, helping you detect and respond to any unauthorized modifications quickly.

Delete Unused Security Groups

Over time, you may end up with unused or redundant Security Groups in your AWS environment. It's essential to regularly review your Security Groups and delete any that are no longer needed. This reduces the complexity of your security policies and minimizes the risk of accidental misconfigurations.

Avoid Incoming Traffic Through 0.0.0.0/0

One common mistake in Security Group configurations is allowing incoming traffic from '0.0.0.0/0,' which essentially opens up your resources to the entire internet. It's best to avoid this practice unless you have a specific use case that requires it. Instead, restrict incoming traffic to only the IP addresses or IP ranges necessary for your applications.

Use Descriptive Rule Names

When creating Security Group rules, provide descriptive names that make it clear why the rule exists. This simplifies rule management and auditing.

Implement Least Privilege

Follow the principle of least privilege by allowing only the minimum required access to your resources. Avoid overly permissive rules.

Regularly Review and Update Rules

Your security requirements may change over time. Regularly review and update your Security Group rules to adapt to evolving security needs.

Avoid Using Security Group Rules as the Only Layer of Defense

Security Groups are a crucial part of your defense, but they should not be your only layer of security. Combine them with other security measures, such as NACLs and web application firewalls, for a comprehensive security strategy.

Leverage AWS Identity and Access Management (IAM)

Use AWS IAM to control access to AWS services and resources. IAM roles and policies can provide fine-grained control over who can modify Security Groups and other AWS resources.

Implement Network Segmentation

Use different Security Groups for different tiers of your application, such as web servers, application servers, and databases. This helps in implementing network segmentation and ensuring that resources only communicate as necessary.

Regularly Audit and Monitor

Set up auditing and monitoring tools to detect and respond to security incidents promptly. AWS provides services like AWS CloudWatch and AWS CloudTrail for this purpose.

Conclusion

Securing your cloud environment is paramount when using AWS, and Security Groups play a vital role in achieving this goal. By understanding how Security Groups work, creating and managing rules, and following best practices, you can enhance the security of your AWS resources. Remember to regularly review and update your security group configurations to adapt to changing security requirements and maintain a robust defense against potential threats. With the right approach to AWS Security Groups, you can confidently embrace the benefits of cloud computing while ensuring the safety and integrity of your applications and data.

<blogcta-big>

.webp)

.webp)