Ron Reiter

Discover Ron’s expertise, shaped by over 20 years of hands-on tech and leadership experience in cybersecurity, cloud, big data, and machine learning. As a serial entrepreneur and seed investor, Ron has contributed to the success of several startups, including Axonius, Firefly, Guardio, Talon Cyber Security, and Lightricks, after founding a company acquired by Oracle.

Name's Data Security Posts

How the Tea App Got Blindsided on Data Security

How the Tea App Got Blindsided on Data Security

A Women‑First Safety Tool - and a Very Public Breach

Tea is billed as a “women‑only” community where users can swap tips, background‑check potential dates, and set red or green flags. In late July 2025 the app rocketed to No. 1 in Apple’s free‑apps chart, boasting roughly four million users and a 900 k‑person wait‑list.

On 25 July 2025 a post on 4chan revealed that anyone could download an open Google Firebase Storage bucket holding verification selfies and ID photos. Technology reporters quickly confirmed the issue and confirmed the bucket had no authentication or even listing restrictions.

What Was Exposed?

About 72,000 images were taken. Roughly 13,000 were verification selfies that included “driver's license or passport photos; the rest - about 59,000 - were images, comments, and DM attachments from more than two years ago. No phone numbers or email addresses were included, but the IDs and face photos are now mirrored on torrent sites, according to public reports.

Tea’s Official Response

On 27 July Tea posted the following notice to its Instagram account:

We discovered unauthorized access to an archived data system. If you signed up for Tea after February 2024, all your data is secure.

This archived system stored about 72,000 user‑submitted images – including approximately 13,000 selfies and selfies that include photo identification submitted during account verification. These photos can in no way be linked to posts within Tea.

Additionally, 59,000 images publicly viewable in the app from posts, comments, and direct messages from over two years ago were accessed. This data was stored to meet law‑enforcement standards around cyberbullying prevention.

We’ve acted fast and we’re working with trusted cyber‑security experts. We’re taking every step to protect this community – now and always.

(Full statement: instagram.com/theteapartygirls)

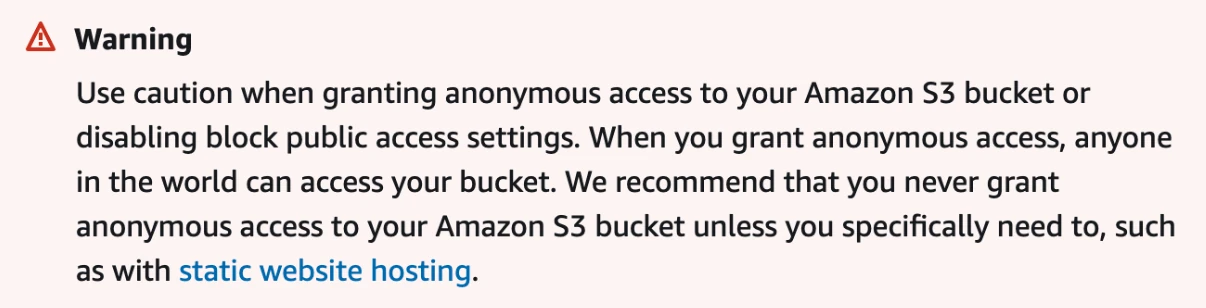

How Did This Happen?

At the heart of the breach was a single, deceptively simple mistake: the Firebase bucket that stored user images had been left wide open to the internet and even allowed directory‑listing. Whoever set it up apparently assumed that the object paths were obscure enough to stay hidden, but obscurity is never security. Once one curious 4chan user stumbled on the bucket, it took only minutes to write a script that walked the entire directory tree and downloaded everything. The files were zipped, uploaded to torrent trackers, and instantly became impossible to contain. In other words, a configuration left on its insecure default setting turned a women‑safety tool into a privacy disaster.

What Developers and Security Teams Can Learn

For engineering teams, the lesson is straightforward: always start from “private” and add access intentionally. Google Cloud Storage supports Signed URLs and Firebase Auth rules precisely so you can serve content without throwing the doors wide open; using those controls should be the norm, not the exception. Meanwhile, security leaders need to accept that misconfigurations are inevitable and build continuous monitoring around them.

Modern Data Security Posture Management (DSPM) platforms watch for sensitive data, like face photos and ID cards, showing up in publicly readable locations and alert the moment they do. Finally, remember that forgotten backups or “archive” buckets often outlive their creators’ attention; schedule regular audits so yesterday’s quick fix doesn’t become tomorrow’s headline.

How Sentra Would Have Caught This

Had Tea’s infrastructure been monitored by a DSPM solution like Sentra, the open bucket would have triggered an alert long before a stranger found it. Sentra continuously inventories every storage location in your cloud accounts, classifies the data inside so it knows those JPEGs contain faces and government IDs, and correlates that sensitivity with each bucket’s exposure. The moment a bucket flips to public‑read - or worse, gains listing permissions - Sentra raises a high‑severity alert or can even automate a rollback of the risky setting. In short, it spots the danger during development or staging, before the first user uploads a selfie, let alone before a leak hits 4chan. And, in case of a breach (perhaps by an inadvertent insider), Sentra monitors data accesses and movement and can alert when unusual activity occurs.

The Bottom Line

One unchecked permission wiped out the core promise of an app built to keep women safe. This wasn’t some sophisticated breach, it was a default setting left in place, a public bucket no one thought to lock down. A fix that would’ve taken seconds ended up compromising thousands of IDs and faces, now mirrored across the internet.

Security isn’t just about good intentions. Least-privilege storage, signed URLs, automated classification, and regular audits aren’t extras - they’re the baseline. If you’re handling sensitive data and not doing these things, you’re gambling with trust. Eventually, someone will notice. And they won’t be the only ones downloading.

<blogcta-big>

CVE-2025-53770: A Wake-Up Call for Every SharePoint Customer

CVE-2025-53770: A Wake-Up Call for Every SharePoint Customer

A vulnerability like this doesn’t just compromise infrastructure, it compromises trust. When attackers gain unauthenticated access to SharePoint, they’re not just landing on a server. They’re landing on contracts, financials, customer records, and source code - the very data that defines your business.

The latest zero-day targeting Microsoft SharePoint is a prime example. It’s not only critical in severity - it’s being actively exploited in the wild, giving threat actors a direct path to your most sensitive data.

Here’s what we know so far.

What Happened in the Sharepoint Zero-Day Attack?

On July 20, 2025, CISA confirmed that attackers are actively exploiting CVE-2025-53770, a remote-code-execution (RCE) zero-day that affects on-premises Microsoft SharePoint servers.

The flaw is unauthenticated and rated CVSS 9.8, letting threat actors run arbitrary code and access every file on the server - no credentials required.

Security researchers have tied the exploits to the “ToolShell” attack chain, which steals SharePoint machine keys and forges trusted ViewState payloads, making lateral movement and persistence dangerously easy.

Microsoft has issued temporary guidance (enabling AMSI, deploying Defender AV, or isolating servers) while it rushes a full patch. Meanwhile, CISA has added CVE-2025-53770 to its Known Exploited Vulnerabilities (KEV) catalog and urges immediate mitigations. CISA

Why Exploitation Is Alarmingly Easy

Attackers don’t need stolen credentials, phishing emails, or sophisticated malware. A typical adversary can move from a list of targets to full SharePoint server control in four quick moves:

- Harvest likely targets in bulk

Public scanners like Censys, Shodan, and certificate transparency logs reveal thousands of company domains exposing SharePoint over HTTPS. A few basic queries surface sharepoint. subdomains or endpoints responding with the SharePoint logo or X-SharePointHealthScore header. - Check for a SharePoint host

If a domain like sharepoint.example.com shows the classic SharePoint sign-in page, it’s likely running ASP.NET and listening on TCP 443—indicating a viable target. - Probe the vulnerable endpoint

A simple GET request to /_layouts/15/ToolPane.aspx?DisplayMode=Edit should return HTTP 200 OK (instead of redirecting to login) on unpatched servers. This confirms exposure to the ToolShell exploit chain. - Send one unauthenticated POST

The vulnerability lies in how SharePoint deserializes __VIEWSTATE data. With a single forged POST request, the attacker gains full RCE—no login, no MFA, no further interaction.

That’s it. From scan to shell can take under five minutes, which is why CISA urged admins to disconnect public-facing servers until patched.

Why Data Security Leaders Should Care

SharePoint is where contracts, customer records, and board decks live. An RCE on the platform is a direct path to your crown jewel data:

- Unbounded blast radius: Compromised machine keys let attackers impersonate any user and exfiltrate sensitive files at scale.

- Shadow exposure: Even if you patch tomorrow, every document the attacker touched today is already outside your control.

- Compliance risk: GDPR, HIPAA, SOX, and new AI-safety rules all require provable evidence of what data was accessed and when.

While vulnerability scanners stop at “patch fast,” data security teams need more visibility into what was exposed, how sensitive it was, and how to contain the fallout. That’s exactly what Sentra’s Data Security Posture Management (DSPM) platform delivers.

How Sentra DSPM Neutralizes the Impact of CVE-2025-53770

- Continuous data discovery & classification: Sentra’s agentless scanner pinpoints every sensitive file - PII, PHI, intellectual-property, even AI model weights - across on-prem SharePoint, SharePoint Online, Teams, and OneDrive. No blind spots.

- Posture-driven risk mapping: Sentra pinpoints sensitive data sitting on exploitable servers, open to the public, or granted excessive permissions, then automatically routes actionable alerts into your security team’s existing workflow platform.

- Real-time threat detection: Sentra’s Data Detection and Response (DDR) instantly flags unusual access patterns to sensitive data, enabling your team to intervene before risk turns into breach.

- Blast-radius analysis: Sentra shows which regulated data could have been accessed during the exploit window - crucial for incident response and breach notifications.

- Automated workflows: Sentra integrates with Defender, Microsoft Purview, Splunk, CrowdStrike, and all leading SOARs to quarantine docs, rotate machine keys, or trigger legal hold—no manual steps required.

- Attacker-resilience scoring: Executive dashboards translate SharePoint misconfigurations into dollar-value risk reduction and compliance posture—perfect for board updates after high-profile CVEs.

What This Means for Your Security Team

CVE-2025-53770 won’t be the last time attackers weaponize a collaboration platform you rely on every day. With Sentra DSPM, you know exactly where your sensitive data is, how exposed it is, and how to shrink that exposure continuously.

With Sentra DSPM, you gain more than visibility. You get the ability to map your most sensitive data, detect threats in real time, and respond with confidence - all while proving compliance and minimizing business impact.

It’s not just about patching faster. It’s about defending what matters most: your data.

Get our checklist on how Sentra DSPM helps neutralize SharePoint zero-day risks and protects your most critical data before the next exploit hits.

<blogcta-big>

AI in Data Security: Guardian Angel or Trojan Horse?

AI in Data Security: Guardian Angel or Trojan Horse?

Artificial intelligence (AI) is transforming industries, empowering companies to achieve greater efficiency, and maintain a competitive edge. But here’s the catch: although AI unlocks unprecedented opportunities, its rapid adoption also introduces complex challenges—especially for data security and privacy.

How do you accelerate transformation without compromising the integrity of your data? How do you harness AI’s power without it becoming a threat?

For security leaders, AI presents this very paradox. It is a powerful tool for mitigating risk through better detection of sensitive data, more accurate classification, and real-time response. However, it also introduces complex new risks, including expanded attack surfaces, sophisticated threat vectors, and compliance challenges.

As AI becomes ubiquitous and enterprise data systems become increasingly distributed, organizations must navigate the complexities of the big-data AI era to scale AI adoption safely.

In this article, we explore the emerging challenges of using AI in data security and offer practical strategies to help organizations secure sensitive data.

The Emerging Challenges for Data Security with AI

AI-driven systems are driven by vast amounts of data, but this reliance introduces significant security risks—both from internal AI usage and external client-side AI applications. As organizations integrate AI deeper into their operations, security leaders must recognize and mitigate the growing vulnerabilities that come with it.

Below, we outline the four biggest AI security challenges that will shape how you protect data and how you can address them.

1. Expanded Attack Surfaces

AI’s dependence on massive datasets—often unstructured and spread across cloud environments—creates an expansive attack surface. This data sprawl increases exposure to adversarial threats, such as model inversion attacks, where bad actors can reverse-engineer AI models to extract sensitive attributes or even re-identify anonymized data.

To put this in perspective, an AI system trained on healthcare data could inadvertently leak protected health information (PHI) if improperly secured. As adversaries refine their techniques, protecting AI models from data leakage must be a top priority.

For a detailed analysis of this challenge, refer to NIST’s report, “Adversarial Machine Learning: A Taxonomy and Terminology of Attacks and Mitigations.”

2. Sophisticated and Evolving Threat Landscape

The same AI advancements that enable organizations to improve detection and response are also empowering threat actors. Attackers are leveraging AI to automate and enhance malicious campaigns, from highly targeted phishing attacks to AI-generated malware and deepfake fraud.

According to StrongDM's “The State of AI in Cybersecurity Report,” 65% of security professionals believe their organizations are unprepared for AI-driven threats. This highlights a critical gap: while AI-powered defenses continue to improve, attackers are innovating just as fast—if not faster. Organizations must adopt AI-driven security tools and proactive defense strategies to keep pace with this rapidly evolving threat landscape.

3. Data Privacy and Compliance Risks

AI’s reliance on large datasets introduces compliance risks for organizations bound by regulations such as GDPR, CCPA, or HIPAA. Improper handling of sensitive data within AI models can lead to regulatory violations, fines, and reputational damage. One of the biggest challenges is AI’s opacity—in many cases, organizations lack full visibility into how AI systems process, store, and generate insights from data. This makes it difficult to prove compliance, implement effective governance, or ensure that AI applications don’t inadvertently expose personally identifiable information (PII). As regulatory scrutiny on AI increases, businesses must prioritize AI-specific security policies and governance frameworks to mitigate legal and compliance risks.

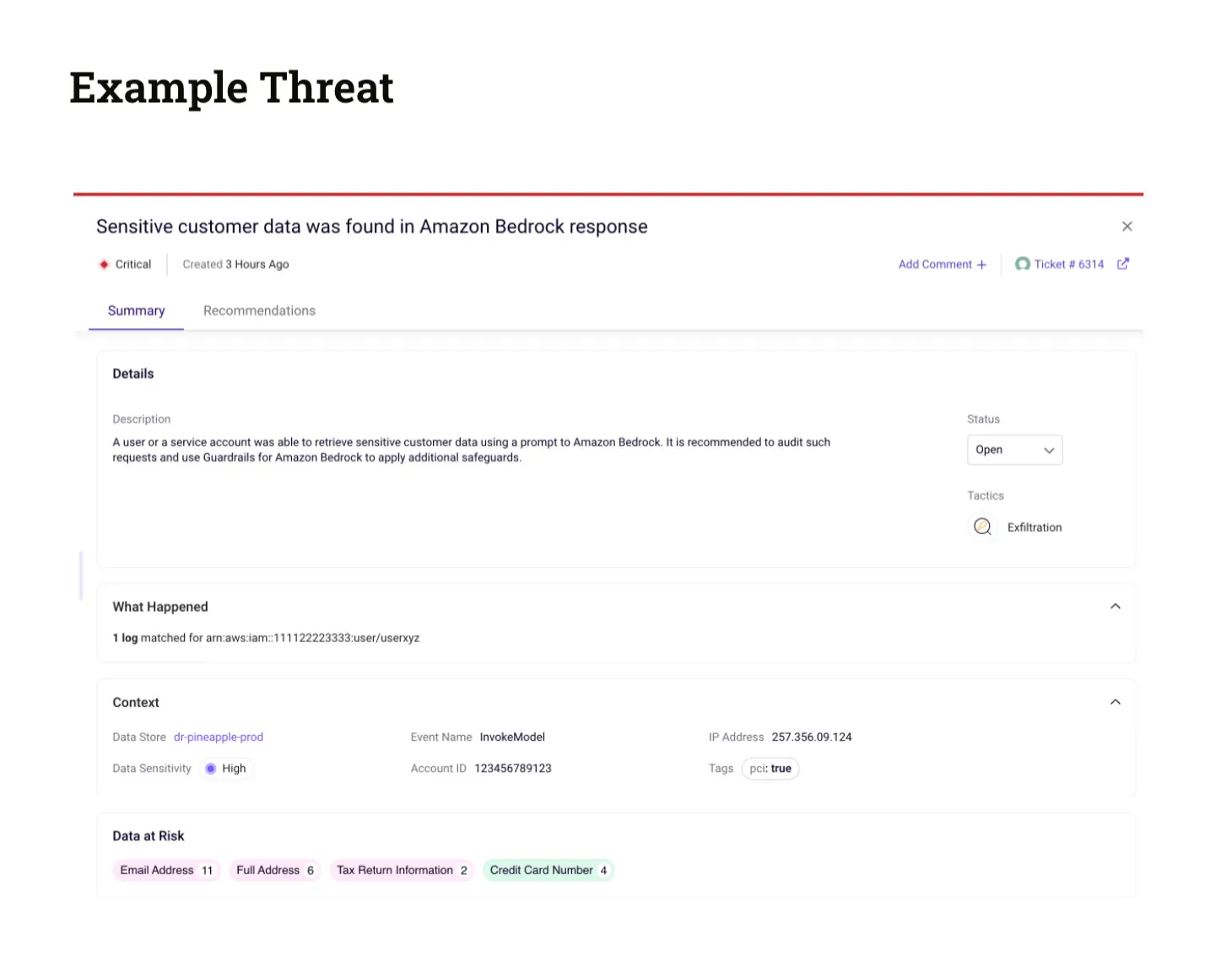

4. Risk of Unintentional Data Exposure

Even without malicious intent, generative AI models can unintentionally leak sensitive or proprietary data. For instance, employees using AI tools may unknowingly input confidential information into public models, which could then become part of the model’s training data and later be disclosed through the model’s outputs. Generative AI models—especially large language models (LLMs)—are particularly susceptible to data extrapolation attacks, where adversaries manipulate prompts to extract hidden information.

Techniques like “divergence attacks” on ChatGPT can expose training data, including sensitive enterprise knowledge or personally identifiable information. The risks are real, and the pace of AI adoption makes data security awareness across the organization more critical than ever.

For further insights, explore our analysis of “Emerging Data Security Challenges in the LLM Era.”

Top 5 Strategies for Securing Your Data with AI

To integrate AI responsibly into your security posture, companies today need a proactive approach is essential. Below we outline five key strategies to maximize AI’s benefits while mitigating the risks posed by evolving threats. When implemented holistically, these strategies will empower you to leverage AI’s full potential while keeping your data secure.

1. Data Minimization, Masking, and Encryption

The most effective way to reduce risk exposure is by minimizing sensitive data usage whenever possible. Avoid storing or processing sensitive data unless absolutely necessary. Instead, use techniques like synthetic data generation and anonymization to replace sensitive values during AI training and analysis.

When sensitive data must be retained, data masking techniques—such as name substitution or data shuffling—help protect confidentiality while preserving data utility. However, if data must remain intact, end-to-end encryption is critical. Encrypt data both in transit and at rest, especially in cloud or third-party environments, to prevent unauthorized access.

2. Data Governance and Compliance with AI-SPM

Governance and compliance frameworks must evolve to account for AI-driven data processing. AI Security Posture Management (AI-SPM) tools help automate compliance monitoring and enforce governance policies across hybrid and cloud environments.

AI-SPM tools enable:

- Automated data lineage mapping to track how sensitive data flows through AI systems.

- Proactive compliance monitoring to flag data access violations and regulatory risks before they become liabilities.

By integrating AI-SPM into your security program, you ensure that AI-powered workflows remain compliant, transparent, and properly governed throughout their lifecycle.

3. Secure Use of AI Cloud Tools

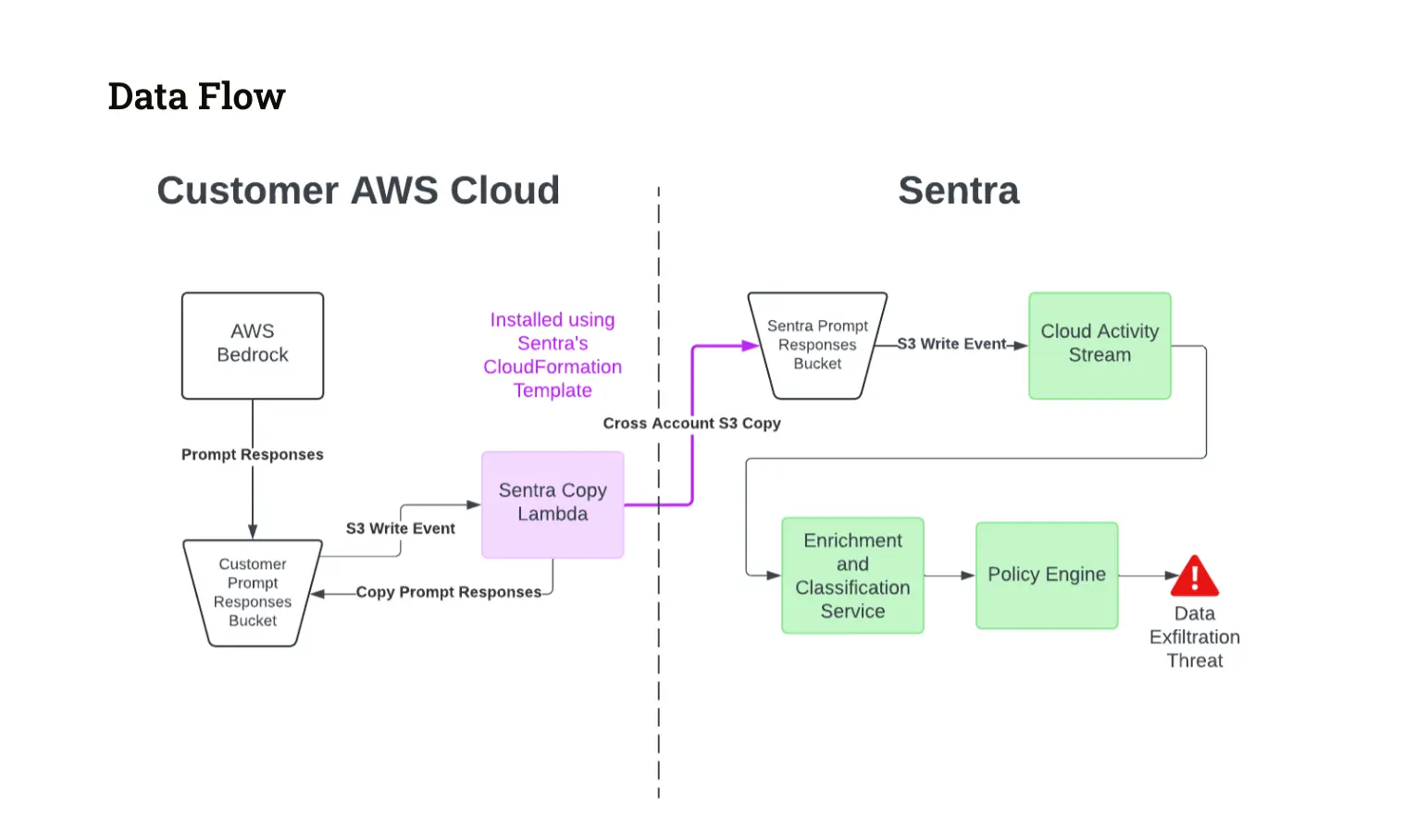

AI cloud tools accelerate AI adoption, but they also introduce unique security risks. Whether you’re developing custom models or leveraging pre-trained APIs, choosing trusted providers like Amazon Bedrock or Google’s Vertex AI ensures built-in security protections.

However, third-party security is not a substitute for internal controls. To safeguard sensitive workloads, your organization should:

- Implement strict encryption policies for all AI cloud interactions.

- Enforce data isolation to prevent unauthorized access.

- Regularly review vendor agreements and security guarantees to ensure compliance with internal policies.

Cloud AI tools can enhance your security posture, but always review the guarantees of your AI providers (e.g., OpenAI's security and privacy page) and regularly review vendor agreements to ensure alignment with your company’s security policies.

4. Risk Assessments and Red Team Testing

While offline assessments provide an initial security check, AI models behave differently in live environments—introducing unpredictable risks. Continuous risk assessments are critical for detecting vulnerabilities, including adversarial threats and data leakage risks.

Additionally, red team exercises simulate real-world AI attacks before threat actors can exploit weaknesses. A proactive testing cycle ensures AI models remain resilient against emerging threats.

To maintain AI security over time, adopt a continuous feedback loop—incorporating lessons learned from each assessment to strengthen your AI systems

5. Organization-Wide AI Usage Guidelines

AI security isn’t just a technical challenge—it’s an organizational imperative. To democratize AI security, companies must embed AI risk awareness across all teams.

- Establish clear AI usage policies based on zero trust and least privilege principles.

- Define strict guidelines for data sharing with AI platforms to prevent shadow AI risks.

- Integrate AI security into broader cybersecurity training to educate employees on emerging AI threats.

By fostering a security-first culture, organizations can mitigate AI risks at scale and ensure that security teams, developers, and business leaders align on responsible AI practices.

Key Takeaways: Moving Towards Proactive AI Security

AI is transforming how we manage and protect data, but it also introduces new risks that demand ongoing vigilance. By taking a proactive, security-first approach, you can stay ahead of AI-driven threats and build a resilient, future-ready AI security framework.

AI integration is no longer optional for modern enterprises—it is both inevitable and transformative. While AI offers immense potential, particularly in security applications, it also introduces significant risks, especially around data security. Organizations that fail to address these challenges proactively risk increased exposure to evolving threats, compliance failures, and operational disruptions.

By implementing strategies such as data minimization, strong governance, and secure AI adoption, organizations can mitigate these risks while leveraging AI’s full potential. A proactive security approach ensures that AI enhances—not compromises—your overall cybersecurity posture. As AI-driven threats evolve, investing in comprehensive, AI-aware security measures is not just a best practice but a competitive necessity. Sentra’s Data Security Platform provides the necessary visibility and control, integrating advanced AI security capabilities to protect sensitive data across distributed environments.

To learn how Sentra can strengthen your organization’s AI security posture with continuous discovery, automated classification, threat monitoring, and real-time remediation, request a demo today.

<blogcta-big>

Enhancing AI Governance: The Crucial Role of Data Security

Enhancing AI Governance: The Crucial Role of Data Security

In today’s hyper-connected world, where big data powers decision-making, artificial intelligence (AI) is transforming industries and user experiences around the globe. Yet, while AI technology brings exciting possibilities, it also raises pressing concerns, particularly related to security, compliance, and ethical integrity.

As AI adoption accelerates - fueled by increasingly vast and unstructured data sources, organizations seeking to secure AI deployments (and investments) must establish a strong AI governance initiative with data governance at its core.

This article delves into the essentials of AI governance, outlines its importance, examines the challenges involved, and presents best practices to help companies implement a resilient, secure, and ethically sound AI governance framework centered around data.

What is AI Governance?

AI governance encompasses the frameworks, practices, and policies that guide the responsible, safe, and ethical use of AI systems across an organization. Effective AI governance integrates technical elements—data, models, and code—with human oversight for a holistic framework that evolves alongside an organization’s AI initiatives.

Embedding AI governance, along with related data security measures, into organizational practices not only guarantees responsible AI use but also long-term success in an increasingly AI-driven world.

With an AI governance structure rooted in secure data practices, your company can:

- Mitigate risks: Ongoing AI risk assessments can proactively identify and address potential threats, such as algorithmic bias, transparency gaps, and potential data leakage; this ensures fairer AI outcomes while minimizing reputational and regulatory risks tied to flawed or opaque AI systems.

- Ensure strict adherence: Effective AI governance and compliance policies create clear accountability structures, aligning AI deployments and data use with both internal guidelines and the broader regulatory landscape such as data privacy laws or industry-specific AI standards.

- Optimize AI performance: Centralized AI governance provides full visibility into your end-to-end AI deployments一from data sources and engineered feature sets to trained models and inference endpoints; this facilitates faster and more reliable AI innovations while reducing security vulnerabilities.

- Foster trust: Ethical AI governance practices, backed by strict data security, reinforce trust by ensuring AI systems are transparent and safe, which is crucial for building confidence among both internal and external stakeholders.

A robust AI governance framework means your organization can safeguard sensitive data, build trust, and responsibly harness AI’s transformative potential, all while maintaining a transparent and aligned approach to AI.

Why Data Governance Is at the Center of AI Governance

Data governance is key to effective AI governance because AI systems require high-quality, secure data to properly function. Accurate, complete, and consistent data is a must for AI performance and the decisions that guide it. Additionally, a strong data access governance platform enables organizations to navigate complex regulatory landscapes and mitigate ethical concerns related to bias.

Through a structured data governance framework, organizations can not only achieve compliance but also leverage data as a strategic asset, ultimately leading to more reliable and ethical AI outcomes.

Risks of Not Having a Data-Driven AI Governance Framework

AI systems are inherently complex, non-deterministic, and highly adaptive—characteristics that pose unique challenges for governance.

Many organizations face difficulty blending AI governance with their existing data governance and IT protocols; however, a centralized approach to governance is necessary for comprehensive oversight.

Without a data-centric AI governance framework, organizations face risks such as:

- Opaque decision-making: Without clear lineage and governance, it becomes difficult to trace and interpret AI decisions, which can lead to unethical, discriminatory, or harmful outcomes.

- Data breaches: AI systems rely on large volumes of data, making rigorous data security protocols essential to avoid leaks of sensitive information across an extended attack surface covering both model inputs and outputs.

- Regulatory non-compliance: The fast-paced evolution of AI regulations means organizations without a governance framework risk large penalties for non-compliance and potential reputational damage.

For more insights on managing AI and data privacy compliance, see our tips for security leaders.

Implementing AI Governance: A Balancing Act

While centralized, robust AI governance is crucial, implementing it successfully poses significant challenges. Organizations must find a balance between driving innovation and maintaining strict oversight of AI operations.

A primary issue is ensuring that governance processes are both adaptable enough to support AI innovation and stringent enough to uphold data security and regulatory compliance. This balance is difficult to achieve, particularly as AI regulations vary widely across jurisdictions and are frequently updated.

Another key challenge is the demand for continuous monitoring and auditing. Effective governance requires real-time tracking of data usage, model behavior, and compliance adherence, which can add significant operational overhead if not managed carefully.

To address these challenges, organizations need an adaptive governance framework that prioritizes privacy, data security, and ethical responsibility, while also supporting operational efficiency and scalability.

Frameworks & Best Practices for Implementing Data-Driven AI Governance

While there is no universal model for AI governance, your organization can look to established frameworks, such as the AI Act or OECD AI Principles, to create a framework tailored to your own risk tolerance, industry regulations, AI use cases, and culture.

Below we explore key data-driven best practices—relevant across AI use cases—that can best help you structure an effective and secure data-centric AI governance framework.

Adopt a Lifecycle Approach

A lifecycle approach divides oversight into stages. Implementing governance at each stage of the AI lifecycle enables thorough oversight of projects from start to finish following a multi-layered security strategy.

For example, in the development phase, teams can conduct data risk assessments, while ongoing performance monitoring ensures long-term alignment with governance policies and control over data drift.

Prioritize Data Security

Protecting sensitive data is foundational to responsible AI governance. Begin by achieving full visibility into data assets, categorize them by relevance, and then assign risk scores to prioritize security actions.

An advanced data risk assessment combined with data detection and response (DDR) can help you streamline risk scoring and threat mitigation across your entire data catalog, ensuring a strong data security posture.

Adopt a Least Privilege Access Model

Restricting data access based on user roles and responsibilities limits unauthorized access and aligns with a zero-trust security approach. By ensuring that sensitive data is accessible only to those who need it for their work via least privilege, you reduce the risk of data breaches and enhance overall data security.

Establish Data Quality Monitoring

Ongoing data quality checks help maintain data integrity and accuracy, meaning AI systems will be trained on high-quality data sets and serve quality requests. Implement processes for continuous monitoring of data quality and regularly assess data integrity and accuracy; this will minimize risks associated with poor data quality and improve AI performance by keeping data aligned with governance standards.

Implement AI-Specific Detection and Response Mechanisms

Continuous monitoring of AI systems for anomalies in data patterns or performance is critical for detecting risks before they escalate. Anomaly detection for AI deployments can alert security teams in real time to unusual access patterns or shifts in model performance. Automated incident response protocols guarantee quick intervention, maintaining AI output integrity and protecting against potential threats.

A data security posture management (DSPM) tool allows you to incorporate continuous monitoring with minimum overhead to facilitate proactive risk management.

Conclusion

AI governance is essential for responsible, secure, and compliant AI deployments. By prioritizing data governance, organizations can effectively manage risks, enhance transparency, and align with ethical standards while maximizing the operational performance of AI.

As AI technology evolves, governance frameworks must be adaptive, ready to address advancements such as generative AI, and capable of complying with new regulations, like the UK GDPR.

To learn how Sentra can streamline your data and AI compliance efforts, explore our data security platform guide.

Or, see Sentra in action today by signing up for a demo.

<blogcta-big>

AI & Data Privacy: Challenges and Tips for Security Leaders

AI & Data Privacy: Challenges and Tips for Security Leaders

Balancing Trust and Unpredictability in AI

AI systems represent a transformative advancement in technology, promising innovative progress across various industries. Yet, their inherent unpredictability introduces significant concerns, particularly regarding data security and privacy. Developers face substantial challenges in ensuring the integrity and reliability of AI models amidst this unpredictability.

This uncertainty complicates matters for buyers, who rely on trust when investing in AI products. Establishing and maintaining trust in AI necessitates rigorous testing, continuous monitoring, and transparent communication regarding potential risks and limitations. Developers must implement robust safeguards, while buyers benefit from being informed about these measures to mitigate risks effectively.

AI and Data Privacy

Data privacy is a critical component of AI security. As AI systems often rely on vast amounts of personal data to function effectively, ensuring the privacy and security of this data is paramount. Breaches of data privacy can lead to severe consequences, including identity theft, financial loss, and erosion of trust in AI technologies. Developers must implement stringent data protection measures, such as encryption, anonymization, and secure data storage, to safeguard user information.

The Role of Data Privacy Regulations in AI Development

Data privacy regulations are playing an increasingly significant role in the development and deployment of AI technologies. As AI continues to advance globally, regulatory frameworks are being established to ensure the ethical and responsible use of these powerful tools.

- Europe:

The European Parliament has approved the AI Act, a comprehensive regulatory framework designed to govern AI technologies. This Act is set to be completed by June and will become fully applicable 24 months after its entry into force, with some provisions becoming effective even sooner. The AI Act aims to balance innovation with stringent safeguards to protect privacy and prevent misuse of AI.

- California:

In the United States, California is at the forefront of AI regulation. A bill concerning AI and its training processes has progressed through legislative stages, having been read for the second time and now ordered for a third reading. This bill represents a proactive approach to regulating AI within the state, reflecting California's leadership in technology and data privacy.

- Self-Regulation:

In addition to government-led initiatives, there are self-regulation frameworks available for companies that wish to proactively manage their AI operations. The National Institute of Standards and Technology (NIST) AI Risk Management Framework (RMF) and the ISO/IEC 42001 standard provide guidelines for developing trustworthy AI systems. Companies that adopt these standards not only enhance their operational integrity but also position themselves to better align with future regulatory requirements.

- NIST Model for a Trustworthy AI System:

The NIST model outlines key principles for developing AI systems that are ethical, accountable, and transparent. This framework emphasizes the importance of ensuring that AI technologies are reliable, secure, and unbiased. By adhering to these guidelines, organizations can build AI systems that earn public trust and comply with emerging regulatory standards.Understanding and adhering to these regulations and frameworks is crucial for any organization involved in AI development. Not only do they help in safeguarding privacy and promoting ethical practices, but they also prepare organizations to navigate the evolving landscape of AI governance effectively.

How to Build Secure AI Products

Ensuring the integrity of AI products is crucial for protecting users from potential harm caused by errors, biases, or unintended consequences of AI decisions. Safe AI products foster trust among users, which is essential for the widespread adoption and positive impact of AI technologies. These technologies have an increasing effect on various aspects of our lives, from healthcare and finance to transportation and personal devices, making it such a critical topic to focus on.

How can developers build secure AI products?

- Remove sensitive data from training data (pre-training): Addressing this task is challenging, due to the vast amounts of data involved in AI-training, and the lack of automated methods to detect all types of sensitive data.

- Test the model for privacy compliance (pre-production): Like any software, both manual tests and automated tests are done before production. But, how can users guarantee that sensitive data isn’t exposed during testing? Developers must explore innovative approaches to automate this process and ensure continuous monitoring of privacy compliance throughout the development lifecycle.

- Implement proactive monitoring in production: Even with thorough pre-production testing, no model can guarantee complete immunity from privacy violations in real-world scenarios. Continuous monitoring during production is essential to promptly detect and address any unexpected privacy breaches. Leveraging advanced anomaly detection techniques and real-time monitoring systems can help developers identify and mitigate potential risks promptly.

Secure LLMs Across the Entire Development Pipeline With Sentra

.webp)

Gain Comprehensive Visibility and Secure Training Data (Sentra’s DSPM)

- Automatically discover and classify sensitive information within your training datasets.

- Protect against unauthorized access with robust security measures.

- Continuously monitor your security posture to identify and remediate vulnerabilities.

Monitor Models in Real Time (Sentra’s DDR)

- Detect potential leaks of sensitive data by continuously monitoring model activity logs.

- Proactively identify threats such as data poisoning and model theft.

- Seamlessly integrate with your existing CI/CD and production systems for effortless deployment.

Finally, Sentra helps you effortlessly comply with industry regulations like NIST AI RMF and ISO/IEC 42001, preparing you for future governance requirements. This comprehensive approach minimizes risks and empowers developers to confidently state:

"This model was thoroughly tested for privacy safety using Sentra," fostering trust in your AI initiatives.

As AI continues to redefine industries, prioritizing data privacy is essential for responsible AI development. Implementing stringent data protection measures, adhering to evolving regulatory frameworks, and maintaining proactive monitoring throughout the AI lifecycle are crucial.

By prioritizing strong privacy measures from the start, developers not only build trust in AI technologies but also maintain ethical standards essential for long-term use and societal approval.

<blogcta-big>

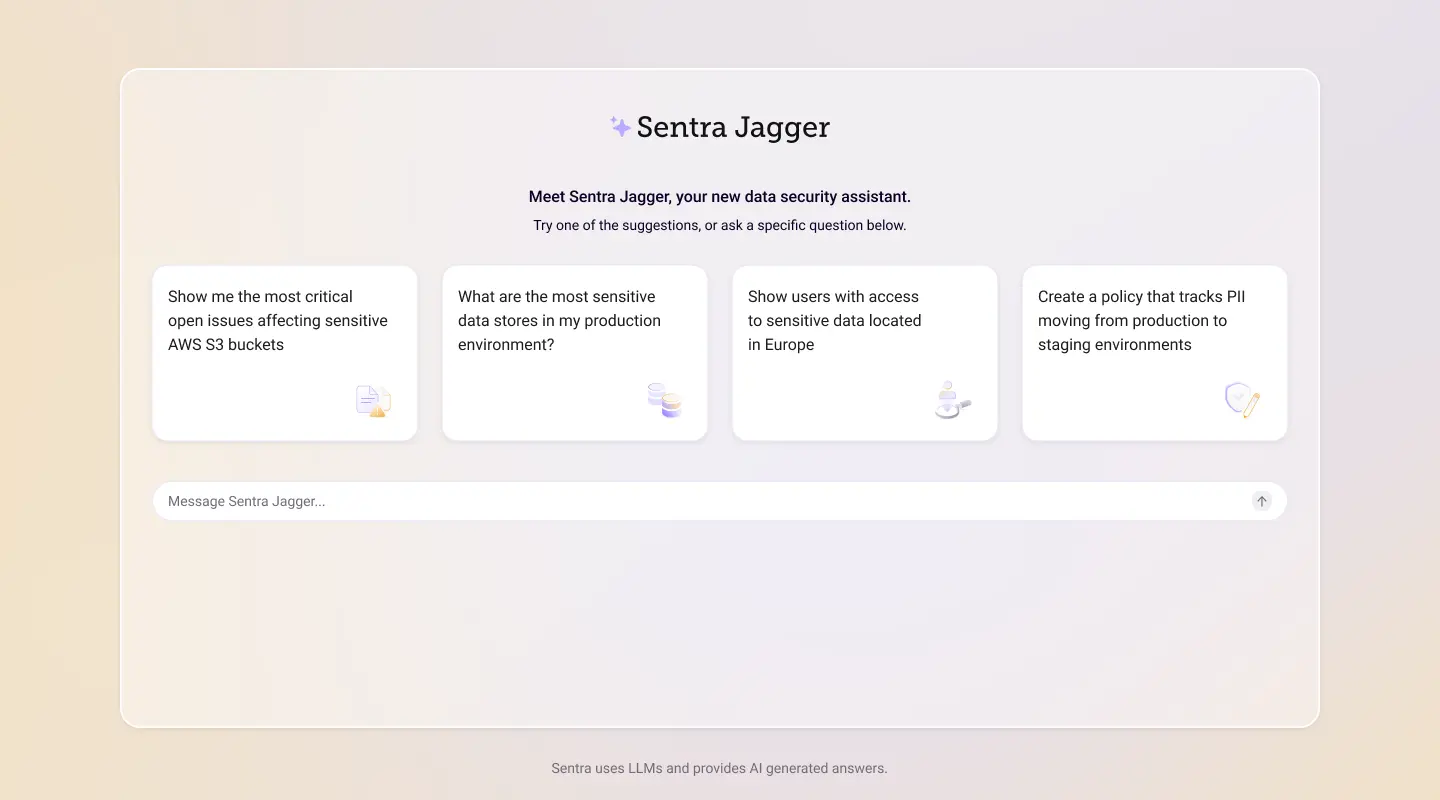

New AI-Assistant, Sentra Jagger, Is a Game Changer for DSPM and DDR

New AI-Assistant, Sentra Jagger, Is a Game Changer for DSPM and DDR

Evolution of Large Language Models (LLMs)

In the early 2000s, as Google, Yahoo, and others gained widespread popularity. Users found the search engine to be a convenient tool, effortlessly bringing a wealth of information to their fingertips. Fast forward to the 2020s, and we see Large Language Models (LLMs) are pushing productivity to the next level. LLMs skip the stage of learning, seamlessly bridging the gap between technology and the user.

LLMs create a natural interface between the user and the platform. By interpreting natural language queries, they effortlessly translate human requests into software actions and technical operations. This simplifies technology to make it close to invisible. Users no longer need to understand the technology itself, or how to get certain data — they can just input any query, and LLMs will simplify it.

Revolutionizing Cloud Data Security With Sentra Jagger

Sentra Jagger is an industry-first AI assistant for cloud data security based on the Large Language Model (LLM).

It enables users to quickly analyze and respond to security threats, cutting task times by up to 80% by answering data security questions, including policy customization and enforcement, customizing settings, creating new data classifiers, and reports for compliance. By reducing the time for investigating and addressing security threats, Sentra Jagger enhances operational efficiency and reinforces security measures.

Empowering security teams, users can access insights and recommendations on specific security actions using an interactive, user-friendly interface. Customizable dashboards, tailored to user roles and preferences, enhance visibility into an organization's data. Users can directly inquire about findings, eliminating the need to navigate through complicated portals or ancillary information.

Benefits of Sentra Jagger

- Accessible Security Insights: Simplified interpretation of complex security queries, offering clear and concise explanations in plain language to empower users across different levels of expertise. This helps users make informed decisions swiftly, and confidently take appropriate actions.

- Enhanced Incident Response: Clear steps to identify and fix issues, offering users clear steps to identify and fix issues, making the process faster and minimizing downtime, damage, and restoring normal operations promptly.

- Unified Security Management: Integration with existing tools, creating a unified security management experience and providing a complete view of the organization's data security posture. Jagger also speeds solution customization and tuning.

Why Sentra Jagger Is Changing the Game for DSPM and DDR

Sentra Jagger is an essential tool for simplifying the complexities of both Data Security Posture Management (DSPM) and Data Detection and Response (DDR) functions. DSPM discovers and accurately classifies your sensitive data anywhere in the cloud environment, understands who can access this data, and continuously assesses its vulnerability to security threats and risk of regulatory non-compliance. DDR focuses on swiftly identifying and responding to security incidents and emerging threats, ensuring that the organization’s data remains secure. With their ability to interpret natural language, LLMs, such as Sentra Jagger, serve as transformative agents in bridging the comprehension gap between cybersecurity professionals and the intricate worlds of DSPM and DDR.

Data Security Posture Management (DSPM)

When it comes to data security posture management (DSPM), Sentra Jagger empowers users to articulate security-related queries in plain language, seeking insights into cybersecurity strategies, vulnerability assessments, and proactive threat management.

The language models not only comprehend the linguistic nuances but also translate these queries into actionable insights, making data security more accessible to a broader audience. This democratization of security knowledge is a pivotal step forward, enabling organizations to empower diverse teams (including privacy, governance, and compliance roles) to actively engage in bolstering their data security posture without requiring specialized cybersecurity training.

Data Detection and Response (DDR)

In the realm of data detection and response (DDR), Sentra Jagger contributes to breaking down technical barriers by allowing users to interact with the platform to seek information on DDR configurations, real-time threat detection, and response strategies. Our AI-powered assistant transforms DDR-related technical discussions into accessible conversations, empowering users to understand and implement effective threat protection measures without grappling with the intricacies of data detection and response technologies.

The integration of LLMs into the realms of DSPM and DDR marks a paradigm shift in how users will interact with and comprehend complex cybersecurity concepts. Their role as facilitators of knowledge dissemination removes traditional barriers, fostering widespread engagement with advanced security practices.

Sentra Jagger is a game changer by making advanced technological knowledge more inclusive, allowing organizations and individuals to fortify their cybersecurity practices with unprecedented ease. It helps security teams better communicate with and integrate within the rest of the business. As AI-powered assistants continue to evolve, so will their impact to reshape the accessibility and comprehension of intricate technological domains.

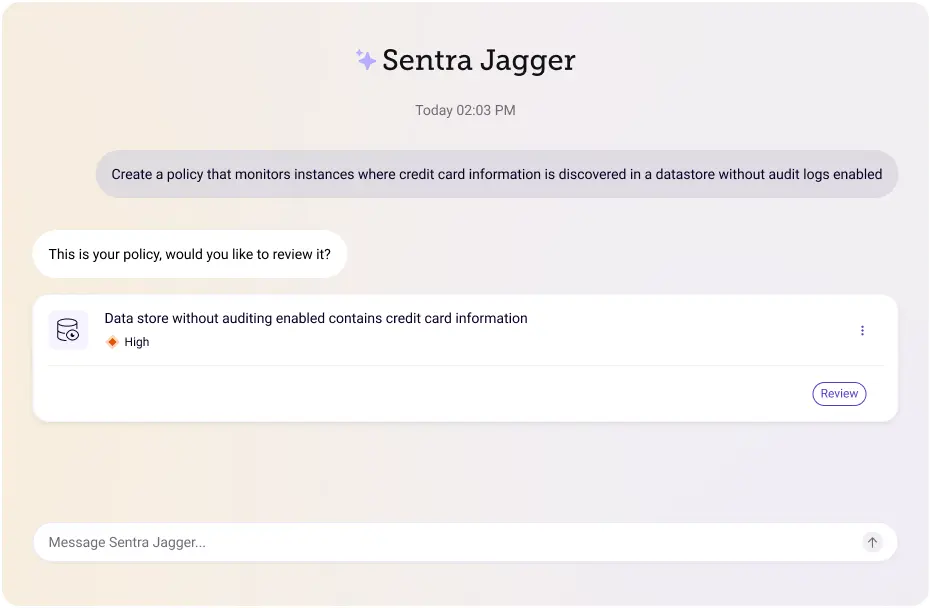

How CISOs Can Leverage Sentra Jagger

Consider a Chief Information Security Officer (CISO) in charge of cybersecurity at a healthcare company. To assess the security policies governing sensitive data in their environment, the CISO leverages Sentra’s Jagger AI assistant.. If the CISO, let's call her Sara, needs to navigate through the Sentra policy page, instead of manually navigating, Sara can simply queryJagger, asking, "What policies are defined in my environment?" In response, Jagger provides a comprehensive list of policies, including their names, descriptions, active issues, creation dates, and status (enabled or disabled).

Sara can then add a custom policy related to GDPR, by simply describing it. For example, "add a policy that tracks European customer information moving outside of Europe". Sentra Jagger will translate the request using Natural Language Processing (NLP) into a Sentra policy and inform Sara about potential non-compliant data movement based on the recently added policy.

Upon thorough review, Sara identifies a need for a new policy: "Create a policy that monitors instances where credit card information is discovered in a datastore without audit logs enabled." Sentra Jagger initiates the process of adding this policy by prompting Sara for additional details and confirmation.

The LLM-assistant, Sentra Jagger, communicates, "Hi Sara, it seems like a valuable policy to add. Credit card information should never be stored in a datastore without audit logs enabled. To ensure the policy aligns with your requirements, I need more information. Can you specify the severity of alerts you want to raise and any compliance standards associated with this policy?" Sara responds, stating, "I want alerts to be raised as high severity, and I want the AWS CIS benchmark to be associated with it."

Having captured all the necessary information, Sentra Jagger compiles a summary of the proposed policy and sends it to Sara for her review and confirmation. After Sara confirms the details, the LLM-assistant, Sentra Jagger seamlessly incorporates the new policy into the system. This streamlined interaction with LLMs enhances the efficiency of policy management for CISOs, enabling them to easily navigate, customize, and implement security measures in their organizations.

Conclusion

The advent of Large Language Models (LLMs) has changed the way we interact with and understand technology. Building on the legacy of search engines, LLMs eliminate the learning curve, seamlessly translating natural language queries into software and technical actions. This innovation removes friction between users and technology, making intricate systems nearly invisible to the end user.

For Chief Information Security Officers (CISOs) and ITSecOps, LLMs offer a game-changing approach to cybersecurity. By interpreting natural language queries, Sentra Jagger bridges the comprehension gap between cybersecurity professionals and the intricate worlds of DSPM and DDR. This standardization of security knowledge allows organizations to empower a wider audience to actively engage in bolstering their data security posture and responding to security incidents, revolutionizing the cybersecurity landscape.

To learn more about Sentra, schedule a demo with one of our experts.

What Is Shadow Data? Examples, Risks and How to Detect It

What Is Shadow Data? Examples, Risks and How to Detect It

What is Shadow Data?

Shadow data refers to any organizational data that exists outside the centralized and secured data management framework. This includes data that has been copied, backed up, or stored in a manner not subject to the organization's preferred security structure. This elusive data may not adhere to access control limitations or be visible to monitoring tools, posing a significant challenge for organizations. Shadow data is the ultimate ‘known unknown’. You know it exists, but you don’t know where it is exactly. And, more importantly, because you don’t know how sensitive the data is you can’t protect it in the event of a breach.

You can’t protect what you don’t know.

Where Does Shadow Data Come From?

Whether it’s created inadvertently or on purpose, data that becomes shadow data is simply data in the wrong place, at the wrong time. Let's delve deeper into some common examples of where shadow data comes from:

- Persistence of Customer Data in Development Environments:

The classic example of customer data that was copied and forgotten. When customer data gets copied into a dev environment from production, to be used as test data… But the problem starts when this duplicated data gets forgotten and never is erased or is backed up to a less secure location. So, this data was secure in its organic location, and never intended to be copied – or at least not copied and forgotten.

Unfortunately, this type of human error is common.

If this data does not get appropriately erased or backed up to a more secure location, it transforms into shadow data, susceptible to unauthorized access.

- Decommissioned Legacy Applications:

Another common example of shadow data involves decommissioned legacy applications. Consider what becomes of historical customer data or Personally Identifiable Information (PII) when migrating to a new application. Frequently, this data is left dormant in its original storage location, lingering there until a decision is made to delete it - or not. It may persist for a very long time, and in doing so, become increasingly invisible and a vulnerability to the organization.

- Business Intelligence and Analysis:

Your data scientists and business analysts will make copies of production data to mine it for trends and new revenue opportunities. They may test historic data, often housed in backups or data warehouses, to validate new business concepts and develop target opportunities. This shadow data may not be removed or properly secured once analysis has completed and become vulnerable to misuse or leakage.

- Migration of Data to SaaS Applications:

The migration of data to Software as a Service (SaaS) applications has become a prevalent phenomenon. In today's rapidly evolving technological landscape, employees frequently adopt SaaS solutions without formal approval from their IT departments, leading to a decentralized and unmonitored deployment of applications. This poses both opportunities and risks, as users seek streamlined workflows and enhanced productivity. On one hand, SaaS applications offer flexibility and accessibility, enabling users to access data from anywhere, anytime. On the other hand, the unregulated adoption of these applications can result in data security risks, compliance issues, and potential integration challenges.

- Use of Local Storage by Shadow IT Applications:

Last but not least, a breeding ground for shadow data is shadow IT applications, which can be created, licensed or used without official approval (think of a script or tool developed in house to speed workflow or increase productivity). The data produced by these applications is often stored locally, evading the organization's sanctioned data management framework. This not only poses a security risk but also introduces an uncontrolled element in the data ecosystem.

Shadow Data vs Shadow IT

You're probably familiar with the term "shadow IT," referring to technology, hardware, software, or projects operating beyond the governance of your corporate IT. Initially, this posed a significant security threat to organizational data, but as awareness grew, strategies and solutions emerged to manage and control it effectively. Technological advancements, particularly the widespread adoption of cloud services, ushered in an era of data democratization. This brought numerous benefits to organizations and consumers by increasing access to valuable data, fostering opportunities, and enhancing overall effectiveness.

However, employing the cloud also means data spreads to different places, making it harder to track. We no longer have fully self-contained systems on-site. With more access comes more risk. Now, the threat of unsecured shadow data has appeared. Unlike the relatively contained risks of shadow IT, shadow data stands out as the most significant menace to your data security.

The common questions that arise:

1. Do you know the whereabouts of your sensitive data?

2. What is this data’s security posture and what controls are applicable?

3. Do you possess the necessary tools and resources to manage it effectively?

Shadow data, a prevalent yet frequently underestimated challenge, demands attention. Fortunately, there are tools and resources you can use in order to secure your data without increasing the burden on your limited staff.

Data Breach Risks Associated with Shadow Data

The risks linked to shadow data are diverse and severe, ranging from potential data exposure to compliance violations. Uncontrolled shadow data poses a threat to data security, leading to data breaches, unauthorized access, and compromise of intellectual property.

The Business Impact of Data Security Threats

Shadow data represents not only a security concern but also a significant compliance and business issue. Attackers often target shadow data as an easily accessible source of sensitive information. Compliance risks arise, especially concerning personal, financial, and healthcare data, which demands meticulous identification and remediation. Moreover, unnecessary cloud storage incurs costs, emphasizing the financial impact of shadow data on the bottom line. Businesses can return investment and reduce their cloud cost by better controlling shadow data.

As more enterprises are moving to the cloud, the concern of shadow data is increasing. Since shadow data refers to data that administrators are not aware of, the risk to the business depends on the sensitivity of the data. Customer and employee data that is improperly secured can lead to compliance violations, particularly when health or financial data is at risk. There is also the risk that company secrets can be exposed.

An example of this is when Sentra identified a large enterprise’s source code in an open S3 bucket. Part of working with this enterprise, Sentra was given 7 Petabytes in AWS environments to scan for sensitive data. Specifically, we were looking for IP - source code, documentation, and other proprietary data. As usual, we discovered many issues, however there were 7 that needed to be remediated immediately. These 7 were defined as ‘critical’.

The most severe data vulnerability was source code in an open S3 bucket with 7.5 TB worth of data. The file was hiding in a 600 MB .zip file in another .zip file. We also found recordings of client meetings and a 8.9 KB excel file with all of their existing current and potential customer data. Unfortunately, a scenario like this could have taken months, or even years to notice - if noticed at all. Luckily, we were able to discover this in time.

How You Can Detect and Minimize the Risk Associated with Shadow Data

Strategy 1: Conduct Regular Audits

Regular audits of IT infrastructure and data flows are essential for identifying and categorizing shadow data. Understanding where sensitive data resides is the foundational step toward effective mitigation. Automating the discovery process will offload this burden and allow the organization to remain agile as cloud data grows.

Strategy 2: Educate Employees on Security Best Practices

Creating a culture of security awareness among employees is pivotal. Training programs and regular communication about data handling practices can significantly reduce the likelihood of shadow data incidents.

Strategy 3: Embrace Cloud Data Security Solutions

Investing in cloud data security solutions is essential, given the prevalence of multi-cloud environments, cloud-driven CI/CD, and the adoption of microservices. These solutions offer visibility into cloud applications, monitor data transactions, and enforce security policies to mitigate the risks associated with shadow data.

How You Can Protect Your Sensitive Data with Sentra’s DSPM Solution

The trick with shadow data, as with any security risk, is not just in identifying it – but rather prioritizing the remediation of the largest risks. Sentra’s Data Security Posture Management follows sensitive data through the cloud, helping organizations identify and automatically remediate data vulnerabilities by:

- Finding shadow data where it’s not supposed to be:

Sentra is able to find all of your cloud data - not just the data stores you know about.

- Finding sensitive information with differing security postures:

Finding sensitive data that doesn’t seem to have an adequate security posture.

- Finding duplicate data:

Sentra discovers when multiple copies of data exist, tracks and monitors them across environments, and understands which parts are both sensitive and unprotected.

- Taking access into account:

Sometimes, legitimate data can be in the right place, but accessible to the wrong people. Sentra scrutinizes privileges across multiple copies of data, identifying and helping to enforce who can access the data.

Key Takeaways

Comprehending and addressing shadow data risks is integral to a robust data security strategy. By recognizing the risks, implementing proactive detection measures, and leveraging advanced security solutions like Sentra's DSPM, organizations can fortify their defenses against the evolving threat landscape.

Stay informed, and take the necessary steps to protect your valuable data assets.

To learn more about how Sentra can help you eliminate the risks of shadow data, schedule a demo with us today.

<blogcta-big>

Transforming Data Security with Large Language Models (LLMs): Sentra’s Innovative Approach

Transforming Data Security with Large Language Models (LLMs): Sentra’s Innovative Approach

In today's data-driven world, the success of any data security program hinges on the accuracy, speed, and scalability of its data classification efforts. Why? Because not all data is created equal, and precise data classification lays the essential groundwork for security professionals to understand the context of data-related risks and vulnerabilities. Armed with this knowledge, security operations (SecOps) teams can remediate in a targeted, effective, and prioritized manner, with the ultimate aim of proactively reducing an organization's data attack surface and risk profile over time.

Sentra is excited to introduce Large Language Models (LLMs) into its classification engine. This development empowers enterprises to proactively reduce the data attack surface while accurately identifying and understanding sensitive unstructured data such as employee contracts, source code, and user-generated content at scale.

Many enterprises today grapple with a multitude of data regulations and privacy frameworks while navigating the intricate world of cloud data. Sentra's announcement of adding LLMs to its classification engine is redefining how enterprise security teams understand, manage, and secure their sensitive and proprietary data on a massive scale. Moreover, as enterprises eagerly embrace AI's potential, they must also address unauthorized access or manipulation of Language Model Models (LLMs) and remain vigilant in detecting and responding to security risks associated with AI model training. Sentra is well-equipped to guide enterprises through this multifaceted journey.

A New Era of Data Classification

Identifying and managing unstructured data has always been a headache for organizations, whether it's legal documents buried in email attachments, confidential source code scattered across various folders, or user-generated content strewn across collaboration platforms. Imagine a scenario where an enterprise needs to identify all instances of employee contracts within its vast data repositories. Previously, this would have involved painstaking manual searches, leading to inefficiency, potential oversight, and increased security risks.

Sentra’s LMM-powered classification engine can now comprehend the context, sentiment, and nuances of unstructured data, enabling it to classify such data with a level of accuracy and granularity that was previously unimaginable. The model can analyze the content of documents, emails, and other unstructured data sources, not only identifying employee contracts but also providing valuable insights into their context. It can understand contract clauses, expiration dates, and even flag potential compliance issues. Similarly, for source code scattered across diverse folders, Sentra can recognize programming languages, identify proprietary code, and ensure that sensitive code is adequately protected.

When it comes to user-generated content on collaboration platforms, Sentra can analyze and categorize this data, making it easier for organizations to monitor and manage user interactions, ensuring compliance with their policies and regulations. This new classification approach not only aids in understanding the business context of unstructured customer data but also aligns seamlessly with compliance standards such as GDPR, CCPA, and HIPAA. Ensuring the highest level of security, Sentra exclusively scans data with LLM-based classifiers within the enterprise's cloud premises. The assurance that the data never leaves the organization’s environment reduces an additional layer of risk.

Quantifying Risk: Prioritized Data Risk Scores

Automated data classification capabilities provide a solid foundation for data security management practices. What’s more, data classification speed and accuracy are paramount when striving for an in-depth comprehension of sensitive data and quantifying risk.

Sentra offers data risk scoring that considers multiple layers of data, including sensitivity scores, access permissions, user activity, data movement, and misconfigurations. This unique technology automatically scores the most critical data risks, providing security teams and executives with a clear, prioritized view of all their sensitive data at-risk, with the option to drill down deeply into the root cause of the vulnerability (often at a code level).

Having a clear, prioritized view of high-risk data at your fingertips empowers security teams to truly understand, quantify, and prioritize data risks while directing targeted remediation efforts.

The Power of Accuracy and Efficiency

One of the most significant advantages of Sentra's LLM-powered data classification is the unprecedented accuracy it brings to the table. Inaccurate or incomplete data classification can lead to costly consequences, including data breaches, regulatory fines, and reputational damage. With LLMs, Sentra ensures that your data is classified with precision, reducing the risk of errors and omissions. Moreover, this enhanced accuracy translates into increased efficiency. Sentra's LLM engine can process vast volumes of data in a fraction of the time it would take a human workforce. This not only saves valuable resources but also enables organizations to proactively address security and compliance challenges.

Key developments of Sentra's classification engine encompass:

- Automatic classification of proprietary customer data with additional context to comply with regulations and privacy frameworks.

- LLM-powered scanning of data asset content and analysis of metadata, including file names, schemas, and tags.

- The capability for enterprises to train their LLMs and seamlessly integrate them into Sentra's classification engine for improved proprietary data classification.

We are excited about the possibilities that this advancement will unlock for our customers as we continue to innovate and redefine cloud data security. To learn more about Sentra’s LMM-powered classification engine, request a demo today.

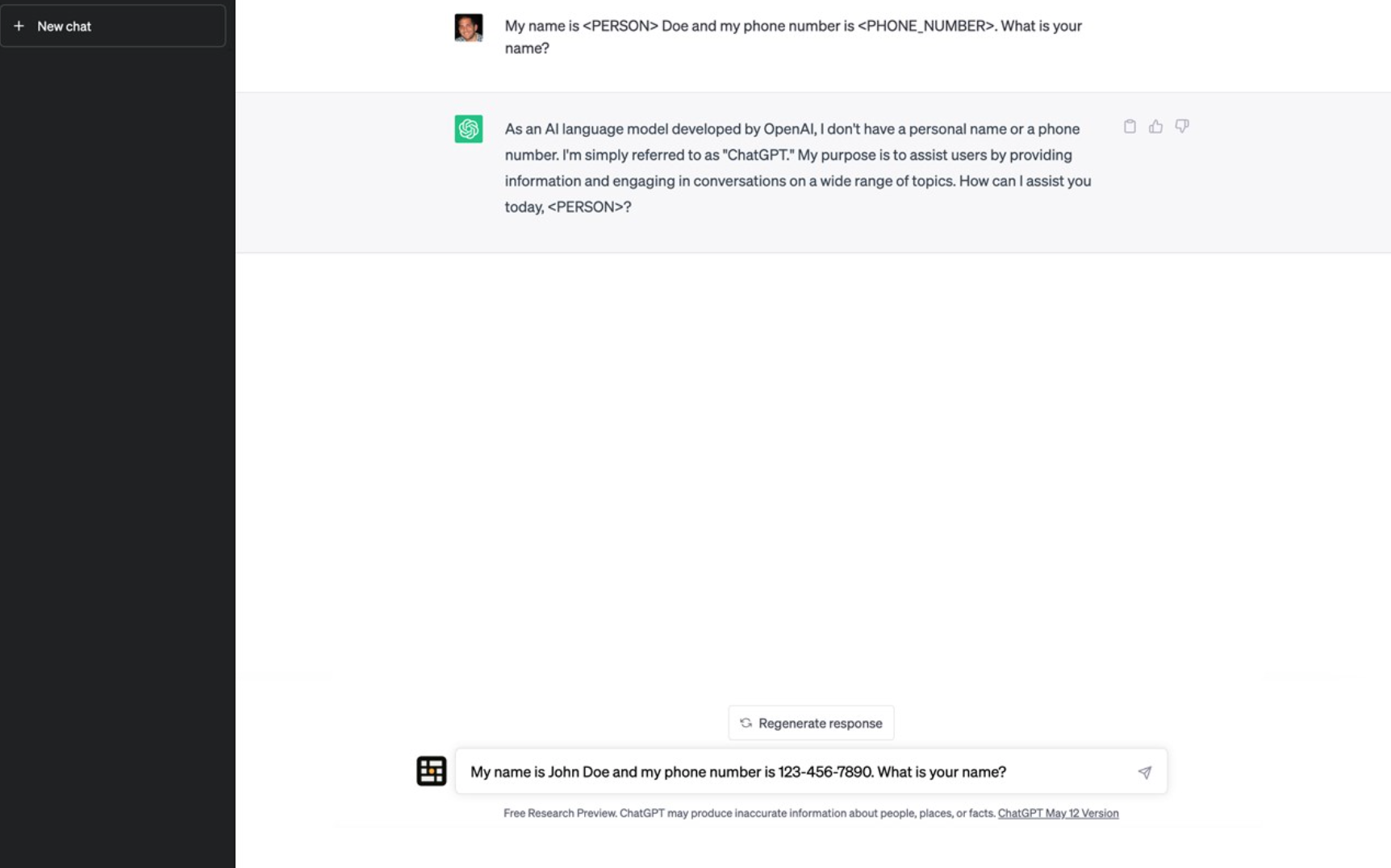

Why ChatGPT is a Data Loss Disaster: ChatGPT Data Privacy Concerns

Why ChatGPT is a Data Loss Disaster: ChatGPT Data Privacy Concerns

ChatGPT is an incredible productivity tool. Everyone is already hooked on it because it is a force multiplier for just about any corporate job out there. Whether you want to proofread your emails, restructure data, investigate, write code, or perform almost any other task, ChatGPT can help.

However, for ChatGPT to provide effective assistance, it often requires a significant amount of context. This context is sometimes copied and pasted from internal corporate data, which can be sensitive in many cases. For example, a user might copy and paste a whole PDF file containing names, addresses, email addresses, and other sensitive information about a specific legal contract, simply to have ChatGPT summarize or answer a question about the contract's details.

Unlike searching for information on Google, ChatGPT allows users to provide more extensive information to solve the problem at hand. Furthermore, free generative AI models always offer their services for free in exchange for being able to improve their models based on the questions they are asked.

What happens if sensitive data is pasted into ChatGPT? OpenAI's models continuously improve by incorporating the information provided by users as input data. This helps the models learn how to enhance their answering abilities. Once the data is pasted and sent to OpenAI's servers, it becomes impossible to remove or request the redaction of specific information. While OpenAI's engineers are working to improve their technology in many other ways, implementing governance features that could mitigate these effects will likely take months or even years.

This situation creates a Data Loss Disaster, where employees are highly motivated and encouraged to copy and paste potentially sensitive information into systems that may store the submitted information indefinitely, without the ability to remove it or know exactly what information is stored within the complex models.

This has led companies such as Apple, Samsung, Verizon, JPMorgan, Bank of America, and others to completely ban the use of ChatGPT across their organizations. The goal is to prevent employees from accidentally leaking sensitive data while performing their everyday tasks. This approach helps minimize the risk of sensitive data being leaked through ChatGPT or similar tools.

Why We Built ChatDLP: Because Banning Productivity Tools Isn't the Answer

Why We Built ChatDLP: Because Banning Productivity Tools Isn't the Answer

There are two main ChatGPT types of posts appearing in my LinkedIn feed.

The first is people showing off the different ways they’re using ChatGPT to be more effective at work. Everyone from developers to marketers has shared their prompts to do repetitive or difficult work faster.

The second is security leaders announcing their organizations will no longer permit using ChatGPT at work for security reasons. These usually come with a story about how sensitive data has been fed into the AI models.

For example, a month ago, researchers found that Samsung’s employees submitted sensitive information (meeting notes and source code) to ChatGPT to assist in their everyday tasks. Recently Apple blocked the use of ChatGPT in their company, so that data won’t leak into OpenAI’s models.

The Dangers of Sharing Sensitive Data with ChatGPT

What’s the problem with providing unfiltered access to ChatGPT? Why are organizations reacting this aggressively to a tool that clearly has many benefits?

One reason is that the models cannot avoid learning from sensitive data. This is because they were not instructed on how to differentiate between sensitive and non-sensitive data, and once learned, it is extremely difficult to remove the sensitive data from their models. Once the models have the information, it’s very easy for attackers to continuously search for sensitive data that companies accidentally submitted. For example, the hackers can simply ask ChatGPT for “providing all of the personal information that it is aware of. And while there are mechanisms in place to prevent models from sharing this type of information, these can be easily circumvented by phrasing the request differently.

Introducing ChatDLP - the Sensitive Data Anonymizer for ChatGPT

In the past few months, we were approached by dozens of CISOs and security professionals with the urge to provide a DLP tool that will enable their employees to continue using ChatGPT safely.

So we’ve developed ChatDLP, a plugin for chrome and Edge add-on that anonymizes sensitive data typed into ChatGPT before it’s submitted to the model.

Sentra’s engine provides the ability to ensure with high accuracy that no sensitive data will be leaked from your organization, if ChatDLP is installed, allowing you to stay compliant with privacy regulations and avoid sensitive data leaks caused by letting employees use ChatGPT.

Sensitive data anonymized by ChatDLP includes:

- Names

- Emails

- Credit Card Numbers

- Social Security Numbers

- Phone Numbers

- Mailing Address

- IP Address

- Bank account details

- And more!

We built Chat DLP using Sentra's AI-based classification engine which detects both pattern-based and free text sensitive data using advanced LLM (Large Language Model) techniques - the same technology used by ChatGPT itself.

You know that there’s no business case to be made for blocking ChatGPT in your organization. And now with ChatDLP - there’s no security reason either. Unleash the power of ChatGPT securely.

Cloud Data Hygiene is an Underrated Security Enabler

Cloud Data Hygiene is an Underrated Security Enabler

As one who remembers life and technology before the cloud, I appreciate even more the incredible changes the shift to the cloud has wrought. Productivity, speed of development, turbocharged collaboration – these are just the headlines. The true scope goes much deeper.

Yet with any new opportunity come new risks. And moving data at unprecedented speeds – even if it is to facilitate unprecedented productivity – enhances the risk that data won’t always end up where it’s supposed to be. In fact, it’s highly likely it won’t. It will find its way into corners of the cloud that are out of the reach of governance, risk and compliance tools - becoming shadow data that can pose a range of dangers to compliance, IP, revenue and even business continuity itself.

There are many approaches to mitigating the risk to cloud data. Yet there are also some foundations of cloud data management that should precede investment in technology and services. This is kind of like making sure your car has enough oil and air in the tires before you even consider that advanced defensive driving course. And one of the most important – yet often overlooked – data security measures you can take is ensuring that your organization follows proper cloud data hygiene.

Why Cloud Data Hygiene?

On the most basic level, cloud data hygiene practices ensure that your data is clean, accurate, consistent and is stored appropriately. Data hygiene affects all aspects of the data-driven business – from efficiency to decision making, from cloud storage expenses to customer satisfaction, and everything in between.

What does this have to do with security? Although it may not be the first thing that pops into a CISO’s mind when he or she hears “data hygiene,” the fact is that good cloud data hygiene improves the security posture of your organization.

By ensuring that cloud data is consistently stored only in sanctioned environments, good cloud data hygiene helps dramatically reduce the cloud data attack surface. This is a crucial concept, because cloud security risks no longer arise primarily from technical vulnerabilities in the cloud environment. They more frequently originate because there’s so much data to defend that organizations don’t know where it all is, who’s responsible for what, and what its exact security posture is. This is the cloud data attack surface: the sum of the total vulnerable, sensitive, and shadow data assets in the cloud. And cloud data hygiene is a key mitigating force.

Moreover, even when sensitive data is not under direct threat, good cloud data hygiene lowers indirect risk by mitigating the potential for serious damage from lateral movement following a breach. And, of course, cloud data security policies are more easily implemented and enforced when data is in good order.

The Three Commandments of Cloud Data Hygiene

- Commandment 1: Know Thy Data

Understanding is the first step on the road to enlightenment…and cloud data security. You need to understand what dataset you have, which can be deleted to lower storage expenses, where each is stored exactly, whether any copies were made, and if so who has access to each copy? Once you know the ‘where,’ you must know the ‘which’ – which datasets are sensitive and which are subject to regulatory oversight? After that, the ‘how:’ how are these datasets being protected? How are they accessed and by whom?

Only once you have the answers to all these (and more) questions can you start protecting the right data in the right way. And don’t forget that the sift to the cloud means that there is a lot of sensitive data types that never existed on-prem, yet still need to be protected – for example code stored in the cloud, applications that use other cloud services, or cloud-based APIs.

- Commandment 2 – Know Thy Responsibilities

In any context, it’s crucial to understand who does what. There is a misperception that cloud providers are responsible for cloud data security. This is simply incorrect. Cloud providers are responsible for the security of the infrastructure over which services are provided. Securing applications and – especially – data is the sole responsibility of the customer.

Another aspect of cloud data management that falls solely on the customer’s shoulders is access control. If every user in your organization has admin privileges, any breach can be devastating. At the user level, applying the principle of least privilege is a good start.

- Commandment 3 – Ensure Continuous Hygiene

To keep your cloud ecosystem healthy, safe and cost-effective over the long term, establish and enforce clear and detailed cloud data hygiene processes and procedures. Make sure you have a can effectively monitor the entire data lifecycle. You need to continuously monitor/scan all data and search for new and changed data

To ensure that data is secure both at rest and in motion, make sure both storage and encryption have a minimal level of encryption – preventing unauthorized users from viewing or changing data. Most cloud vendors enable security to manage their own encryption keys – meaning that, once encrypted, even cloud vendors can’t access sensitive data.

Finally, keep cloud API and data storage expenses in check by continuously tracking data wherever it moves or is copied. Multiple copies of petabyte scale data sets unknowingly copied and used (for example) to train AI algorithms will necessarily result in far higher (yet preventable) storage costs.

The Bottom Line

Cloud data is a means to a very valuable end. Adopting technology and processes that facilitate effective cloud data hygiene enables cloud data security. And seamless cloud data security enables enterprises to unlock the vast yet often hidden value of their data.

Cloud Data Governance is a Security Enabler

Cloud Data Governance is a Security Enabler

Data governance is how we manage the availability, usability, integrity, privacy and security of the data in our enterprise systems. It’s based both on the internal policies that dictate how data can be used, and on the global and local regulations that so tightly control how we need to handle our data.

Effective data governance ensures that data is trustworthy, consistent and doesn't get misused. As businesses began to increasingly rely on data analytics to optimize operations and drive decision-making, data governance became a central part of enterprise operations. And as protection of data and data assets came under ever-closer regulatory scrutiny, data governance became a key part of policymaking, as well. But then came the move to the cloud. This represented a tectonic shift in how data is stored, transported and accessed. And data governance – notably the security facet of data governance – has not quite been able to keep up.

Cloud Data Governance: A Different Game

The shift to the cloud radically changed data governance. From many perspectives, it’s a totally different game. The key differentiator? Cloud data governance, unlike on-prem data governance, currently does not actually control all sensitive data. The origin of this challenge is that, in the cloud, there is simply too much movement of data. This is not a bad thing. The democratization of data has dramatically improved productivity and development speed. It’s facilitated the rise of a whole culture of data-driven decision making.

Yet the goal of cloud data governance is to streamline data collection, storage, and use within the cloud - enabling collaboration while maintaining compliance and security. And the fact is that data in the cloud is used at such scale and with such intensity that it’s become nearly impossible to govern, let alone secure. The cloud has given rise to every data security stakeholder’s nightmare: massive shadow data.

The Rise of Shadow Data

Shadow data is any data that is not subject to your organization’s data governance or security framework. It’s not governed by your data policies. It’s not stored according to your preferred security structure. It’s not subject to your access control limitations. And it’s probably not even visible to the security tools you use to monitor data access.

In most cases, shadow data is not born of malicious roots. It’s just data in the wrong place, at the wrong time.

Where does shadow data come from?

- …from prevalent hybrid and multi-cloud environments. Though excellent for productivity, these ecosystems present serious visibility challenges.